Prevent Performance Review Surprises with a No‑Surprise System

Prevent Performance Review Surprises with a No‑Surprise System

The Cost of Surprise—and How to Prevent Performance Review Surprises

Once, a manager blindsided me in a performance review with an old issue we’d never discussed. The funny part is, we’d had weekly 1:1s for months. I can still picture the scanned notes sliding across the table, the sudden awkward shift in the room. The criticism landed with this uncomfortable finality. Temporal whiplash. I kept thinking, “How did I miss this? How did we both miss this?”

That moment broke something. My trust in the process dropped. My anxiety spiked. I started questioning what those weekly conversations were actually for. If this could blindside me now, what else was hiding? When trust in the leader is already shaky, negative feedback hits even harder and drags down the sense that the feedback is useful. It’s not just about the issue. It’s about whether the whole system can be counted on.

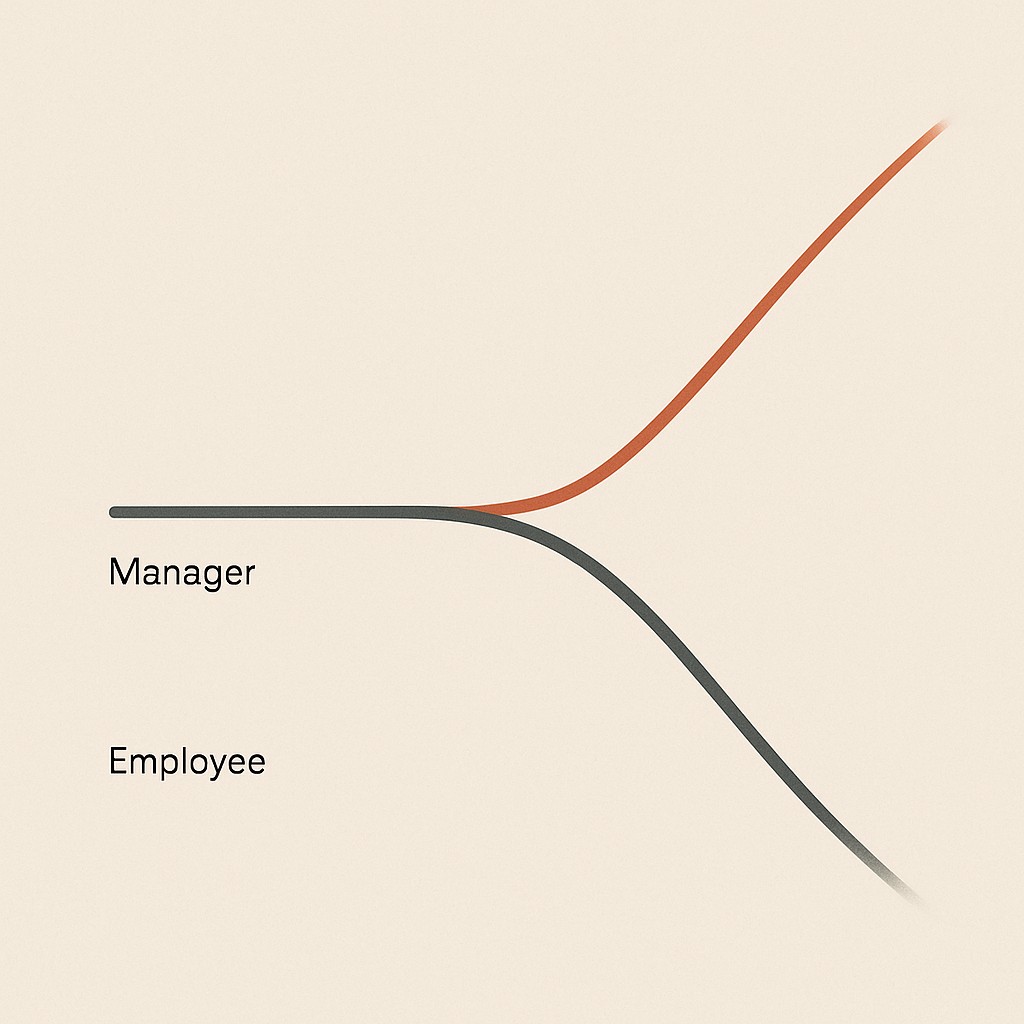

At its core, the problem is how to prevent performance review surprises. When feedback gets deferred until the review, it lurks out of sight, leaving engineers misaligned and freezing growth just when it should be compounding. If you’ve ever felt lost during a review, or left wondering how the “official” feedback managed to skip every actual conversation, you know what I mean.

Here’s what I think needs to change. Reviews should be predictable and fair. They only work when expectations and growth are handled continuously, out in the open, not stored up for a dramatic reveal. The reality is, more than 60% of a performance rating can come from a manager’s quirks alone. Predictability and transparency aren’t just nice to have. They’re a must. That’s why, for years now, I’ve led with one principle: no surprises.

If a review contains surprises, the breakdown didn’t happen at the review. It happened months earlier. Immediate feedback outperforms delayed feedback for actual growth and retention. Surprises land when we hold back too long. Let’s build the loop that keeps us aligned. Not just in theory, but in every conversation.

Why Misalignment Creeps In—and How to Catch It Early

Drift isn’t dramatic. Expectations shift quietly. Priorities get nudged by last week’s fire, and perceptions start to warp before anyone notices. It’s not that everything changes at once. More often, it’s months later that the small gaps snowball. A review doesn’t create these gaps. It just puts a spotlight on them, all at once. Suddenly things that felt minor now have months of weight behind them.

This is why I structure effective weekly 1:1s as the primary early-warning system to avoid performance review surprises. The rhythm matters. If we use these conversations to catch drift before it turns into distance, those little priority misalignments or brewing frustrations get airtime long before they become landmines. Don’t wait for the review to be the first checkpoint. By then, it’s too late.

After any inflection point—a shipped feature, a team conflict, a missed deadline—I like to insert a lightweight debrief. It’s rarely formal. Just “How did that go for you?” right after the dust clears. That’s when wins and tensions surface naturally, before they harden into “issues.” Growth conversations stay live, not boxed up until review time.

Feedback isn’t a grenade. I see it as ongoing calibration. Tiny course corrections so we already know the direction we’re heading. When the review arrives, nobody’s bracing for impact. It’s a summary, not a verdict. That’s what builds trust, lowers anxiety, and lets actual growth compound. No surprises. With no-surprise performance reviews, clear signals run all the way through.

Last spring, one of my direct reports showed up for our check-in and mentioned—completely offhand—that he hadn’t seen his own job description in almost two years. I paused. We both laughed (awkwardly), then realized most of the team was probably in the same boat. Two hours later, I was still digging through shared folders to find old PDFs. I kept thinking about drifting expectations and how, sometimes, people just quietly lose the thread. That goofy scramble made me double down on surfacing the basics in 1:1s, not just relying on memory.

The No-Surprise System: Making Reviews Uneventful (In a Good Way)

Reviews should prevent performance review surprises, so nobody walks into a review bracing for a twist ending. The simplest system I know is built around dozens of small touchpoints, expectations tracked in the open, and deliberate moments of shared reflection. Mapping progress all year keeps everything visible, honest, and actionable. I’ll admit, this only clicks when feedback isn’t an event, but a running conversation.

Six months ago I used to think a continuous feedback loop sounded like corporate noise—endless nudges, nothing landing. But seeing growth week by week made the difference. Expectations clarified upfront, wounds aired quick instead of left to fester. When you see it play out over a quarter, alignment really does become the norm.

Every week in our 1:1s, we check actual work against the goals we set. Yep, I’m literally pulling up last month’s list and asking “Are these still the priorities?” If something’s off, we flag it then, not later.

Halfway through the cycle, I do a mid-quarter check-in anchored in active listening to spot blind spots. This isn’t a review, just a chance to recalibrate scope if the project’s turning out bigger or smaller than expected. After major launches, we do post-mortems. Not just technical, but also “What did you learn from leading that piece?” These debriefs aren’t homework. They’re quick and dirty. Sometimes it’s two bullet points. The point is, lessons get converted into next steps immediately. All of this means feedback gets baked in steadily, not held back. When the actual review rolls around, we’re just summarizing what’s already on the table.

Don’t wing someone’s year. It’s easy to think you’ll remember the highlights, the struggles, all the “little things.” You won’t. Preparation is a promise you make to your report and to yourself. Their career narrative and compensation moment should never hinge on improv.

Before any review, I ask reports to reflect—specifically. “What are you most proud of? Where did you grow? What do you want to approach differently?” That way the conversation starts with actual evidence and a shared perspective. I’m not guessing, and neither are they.

If I heard “I was hoping for more” during a review, I’d already failed. Getting expectations explicit and repeatedly aligned makes comp conversations boring (which is good). No missed targets. No ambiguous bars dragged out at the last minute.

Here’s a nerdy confession. I treat growth almost like continuous integration for people, supported by a management skills feedback loop. Tiny frequent checks always catch regressions better than waiting for a big red build. The review should never be the first time the system fails. It’s just a pass/fail on what we already fixed along the way.

When Preparation Makes or Breaks the Review

I’ve sat through reviews where, ten minutes in, both sides realize nobody brought receipts. You drift from vague impressions (“I think you did well on that project… was it March?”) to arm’s-length generalities. It feels awkward because it is. No single thread ties things together, and you both know it. Contrast that with a review that starts with a tightly woven narrative. You’re running evidence-based performance reviews by pulling in check-in notes, results from actual projects, and feedback from peers. It shifts from guesswork to synthesis. Suddenly, it’s possible to be precise and fair because you can point to real events, not just feelings. When you build from a bank of context, the conversation grows sharper, trustier, even when there are hard truths to unpack.

Remember that scanned notes moment? What still stands out is how flimsy it all felt compared to reviews built from lived context. Surprises turn what could be simple into a mess—the callback’s real, even years down the line.

Here’s the core principle, as simple as I can put it. A performance review should be the summary, not the source, of your feedback, goals, and growth. If something lands in a review, it should already sound familiar. If it’s a surprise, it means the continuous loop broke down somewhere along the line. The process works when nothing in the review feels brand new to you or your report.

So when we meet for the actual review, I start with their self-assessment—what they’re most proud of, where they think they’ve moved the needle—and, when relevant, a weekly burnout self‑check. Then we compare notes, check the evidence, and talk about any differences that crop up. From there, the focus shifts to which experiments or growth opportunities are next, not re-litigating the past year. Keeping things rooted in evidence and lived context turns what could be an anxious event into a reflective, actionable conversation.

The promise here is simple. Predictable, uneventful (in the best way) reviews, where the outcome feels earned—never argued or manufactured. That’s the bar I hold myself to now, and the one I’d want if our roles were reversed.

Anticipating Doubts: Making the No-Surprise Review Playbook Work

Let’s name the tradeoffs out loud. You might be wondering if all this adds up—weekly 1:1s, note-taking, alignment templates. Is it really worth the time? Is this sliding into micromanagement territory? What if some managers follow through better than others, or the documentation just piles up and becomes a new headache? These aren’t hypothetical worries. I’ve bumped into every single one myself, and watched teams slow down when they’re left unaddressed.

Time always feels tight, especially in engineering, which is why strong engineering team review practices matter. But here’s the payoff. A few minutes each week sidestep hours of tense rehearsals before reviews, awkward repair after escalations, and the long wait for promotions to get “unstuck.” If you measure the cost in last-minute fixes, missed signals, and growth that never compounds, the ROI is obvious.

The real trick to avoiding micromanagement? Keep it humane and standardized without being suffocating. I use a management skills feedback loop and a simple template for weekly 1:1s. Three prompts, ten minutes. Expectations are public, and notes are visible to both sides. This moves feedback into the open and cuts the back-and-forth, turning what could be surveillance into shared clarity. Your Move: The No-Surprise Review Playbook.

If you want to share your playbook, document reviews, or ship clear team guidance, use this AI-powered content tool to turn notes into clean posts, docs, and templates in minutes.

When you close the loop with a no‑surprise review playbook—building predictability and fairness into how you track growth—trust starts to snowball. Over time, alignment isn’t something your team hopes for; it’s standard. No more last-minute bombs. By next review season, you’re not crossing your fingers—you’re setting the pace for compounding builds, not crisis control. The system does the work, everyone gets to focus on moving forward.

One thing I still haven’t figured out is if this approach is really scalable in huge organizations, with teams I don’t know personally. Sometimes the process outgrows what made it work in the first place. I keep tweaking, but the tension’s still there. Maybe it’s just part of leading as you scale.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.

You can also view and comment on the original post here .