Choose the right AI approach: Start in context, not tools

Choose the right AI approach: Start in context, not tools

Choose the Right AI Approach: Start in Context, Not in Tools

I’ll say it straight. I love ops and automation—the rush of watching a pipeline run with one click is still the best part of my job. Building systems that do what you want, when you want, without surprises? That’s what keeps me in this field. There’s something honest about seeing every piece move because you put it there. If you’re reading this, I bet you know that feeling too.

That’s why my own entry point for AI was Python and the OpenAI APIs, wire by wire. Writing code gave me the visibility and control I crave. When something broke, I could actually see why. But when you choose the right AI approach, your ideal starting point might look nothing like mine—and that’s the way it should be. Who you are, what you need, how much you want or need to control—it all matters. Not everyone should start in the deep end.

The reality is, a flood of new AI tools has made it way too easy to choose the wrong path. Pick something too advanced when you just need a quick win and you waste days fighting config. Settle for a no-code tool when you actually need traceability and you’ll hit a wall fast. I needed a system I could control, not just a surface-level tool. And the biggest impact from gen AI comes when you actually redesign workflows, not when you just swap in surface-level tools—real alignment means rethinking the system itself.

Right now, I get “How do I get started with AI?” at least once a week. My answer always hangs on the same variables: your background, your time window, where you are on the learning curve, what you can spend, and whether you’re solo or part of a team.

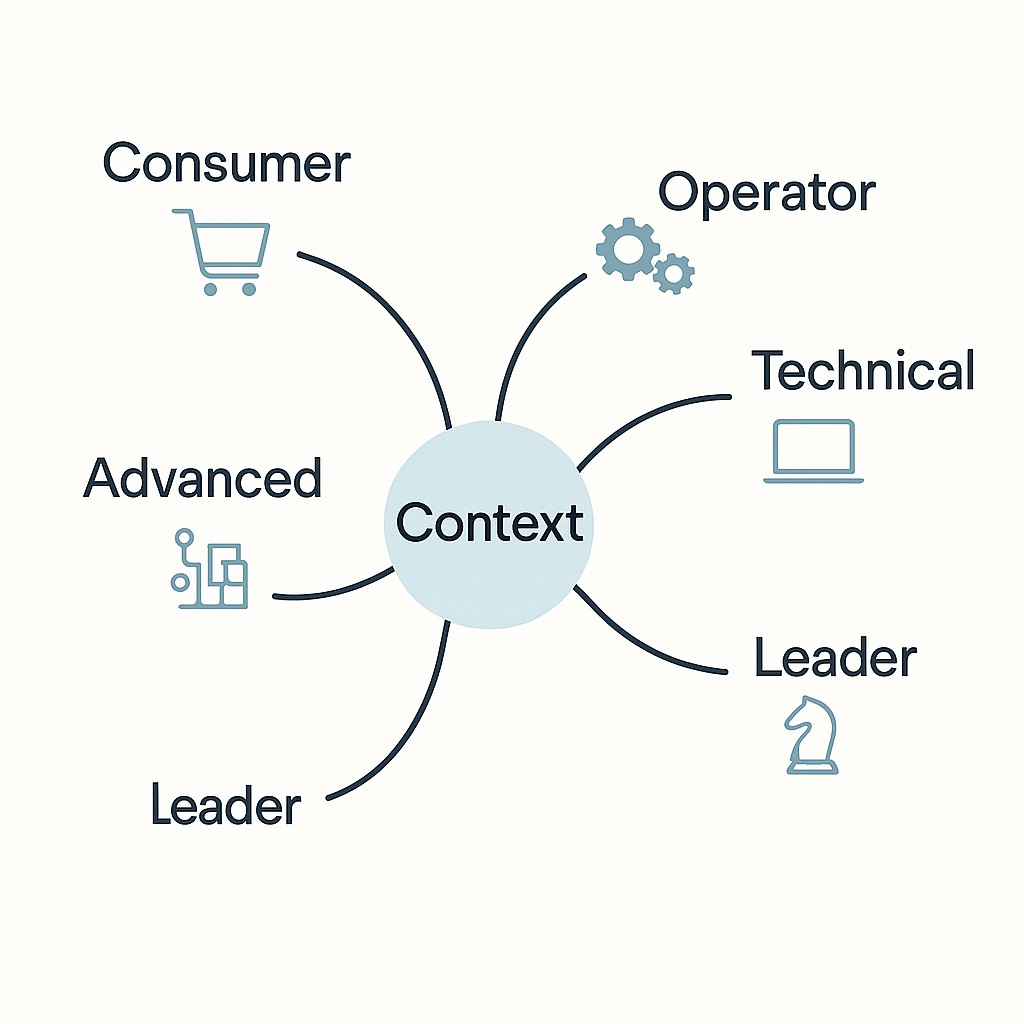

To make this easier, I’ve broken the chaos down into five lanes to Right-size AI adoption—Consumers, Operators, Systems builders, Leaders, and Model builders. This way, you can pick a starting track that fits your actual role and constraints. First clarify your core constraint, then move forward with way less friction.

Right-Sized Lanes for Getting Started

Let’s level with each other. Choosing your AI starting lane isn’t about technology first—it’s about knowing where you want speed, where you need control, and where your own strengths and constraints live. I see engineers and operators tripped up by mismatches all the time. Which lane makes sense really hinges on understanding your strengths, weak spots, and actual constraints. Strategy starts from there, not just picking trendy tools (MIT Sloan). You want practical impact, not wasted cycles on the wrong fit.

The consumer lane is for instant results with the least setup overhead. You jump in to plan trips, manage budgets, or draft emails. There’s almost zero friction, so if you’re just looking to shave time off your personal tasks, this is the way to get fast payoff and move on.

If your work revolves around repetitive team tasks—think updating docs, auto-summarizing meetings, or cleaning up copy in shared Slack threads—the operator lane probably fits. Selecting AI solutions wisely means you stick to familiar tools like Notion AI, GrammarlyGO, Otter, or Microsoft Copilot and let them do the heavy lifting for daily workflows. Here, speed wins out over customization.

Now, if you’ve got a builder’s mindset and want your hands on the controls, the technical lane is where you land. You see how AI amplifies developer leverage. This isn’t just about sending an API call and hoping for the best. You start with simple scripts in Python, maybe something that updates tickets or checks data, and then step up to chaining multi-step automations once you’ve got a steady base. It’s about unlocking deeper layers, not just skimming the surface. This is where I spend most of my time—wiring together CrewAI agents, threading outputs, catching edge cases before they become problems.

Some readers are ready—or required—to go even further. The advanced lane isn’t for everyone. It kicks in when your edge case means off-the-shelf tools aren’t good enough. Here’s where you’re fine-tuning models or training your own to get exactly the control you need. Meanwhile, if you’re guiding a team or a department, you’re in the leader lane: focusing on ROI, designing guardrails, and making sure new systems really serve actual business needs. It’s about framing constraints and pilots so you get real results without spinning wheels or running unseen risks.

Every lane helps you choose the right AI approach by highlighting a different trade-off. Fast payoff, low risk. Deeper control, more effort. The point is to pick the lane that matches your goals and your reality right now. You’re not stuck—next steps are always available, and scaling up should feel natural, not forced. The trick is starting in context.

How to Pick the Right Lane (and Avoid Overengineering)

This AI adoption guide starts with four checks. What’s your role, what’s the single thing you want to accomplish, what gets in your way, and how will you know if it worked? If you’re an engineer, you might be tempted to overdefine “goal”—think simple: automate one task, speed up one process, prove one point. Listing constraints isn’t just busywork. Maybe it’s timeline, maybe it’s IT signoff, maybe it’s your own unfamiliarity with the stack. And before you start, pick a metric you’ll actually track—is it reduced manual steps, turn-around time, or just whether you get to leave work earlier on Fridays—so you balance efficiency and effectiveness?

I’ll be honest. I’ve overbuilt before, chasing modularity and elegant abstractions when all I needed was a single-use script. Speed tanked, maintenance sucked, and the team ended up using an off-the-shelf tool after all.

This kind of thing reminds me of my coffee gear habit. I spent months optimizing pour-over recipes and collecting gadgets, only to realize my best cups came from paying attention to water and beans, not the fancy brewer. There’s this one grinder I bought that was supposed to change everything, and it mostly just made mornings louder. It’s easy to get lost in tooling when the core outcome is what really matters.

So here’s a shortcut: if what matters most is getting a result today, jump into the consumer or operator lanes and don’t look back. If having knobs to turn and outputs to trace is make-or-break, take the technical or advanced track. Leading a team? Your job isn’t just picking a tool, but setting the target metric and its boundaries. There’s a strong precedent in risk management frameworks. They all single out “Measure” as essential for achieving alignment and outcomes (NIST AI RMF). Align AI to goals by deciding what “good” means—then actually check it.

Go Small First: Pilot, Measure, Learn

Don’t try to overhaul everything at once. Pick one workflow as your best first AI project, tie it to a clear metric, and commit to piloting just that. Basically, start with small pilot moves before even thinking about scaling. This sounds simple, but it’s the move that keeps everything grounded in reality instead of hype.

Let that pilot live with a human in the loop. If it starts moving the numbers you care about, then (and only then) scale it up. If it doesn’t, you’ve lost very little, and you’ve learned a ton.

A while back—I think it was early last year—I tried to roll out three small pilots at the same time, imagining I’d finally nail the “AI everywhere” vision. Instead, I ended up not really measuring any of them properly, and only realized weeks later that nobody on the team was using two of them. I keep reminding myself that one thing working is always better than three left in project limbo.

For consumers, this can be dead simple—spin up ChatGPT or Claude for something personal, like travel planning or drafting quick responses to email. Don’t overthink it. Just measure the time you save in a week. If you start getting 30 minutes or an hour back, you know it’s working. If it fizzles, you walk away without uninstalling any drivers or reworking systems.

If you’re running operator workflows—say, relentless meeting notes or turning calls into team reports—start by wiring in something like Otter or Microsoft Copilot. Don’t chase feature lists. Watch for two things: how long it takes from meeting to summary, and whether the error rate drops. If you see tangible change there, it’s a win.

From the technical side, I always start with a minimal Python script calling OpenAI’s APIs, then gluing on another step if (and only if) the basics hold up. Don’t worry about orchestrators or exotic dependencies yet. Focus on three questions—how fast is the call, how accurate is the output, and does it run with one click or trigger? Most of my early scripts were just a few lines—a JSON call, a sanity check, then a printout to the terminal.

It’s tempting to leap into fancy patterns or wire up CrewAI agents, but “one thing working reliably” gets you way further. If something’s unclear, I keep a logging line at every step. Sometimes I realize after the fact my latency spikes weren’t the API—they were my own retry logic looping out.

Every now and then, you’ll hit that barrier where out-of-the-box just isn’t enough. That’s when it makes sense to consider advanced moves—maybe fine-tuning a model through the Anthropic API, or reaching into full-on custom training. If you get here, hold one line tight. Track genuine uplift versus increased complexity or cost. This is where the right metric matters even more, because it’s all too easy to pour resources into a solution that never actually beats your baseline.

Start small, measure for real impact, then—and only then—scale the thing that proves its value. It’s a discipline that pays off fast: you reduce risk, speed up learning, and avoid wasting cycles that could go into solving the next real problem.

If you’re ready for a small, measurable pilot, use our app to generate AI-powered content fast, reduce setup and iteration time, and get clear results you can compare before deciding what to build next.

Measure, Then Scale—Not the Other Way Around

The part most folks skip or rush—me included, until I got burned—is the measurement plan. Here’s how to sidestep both regret and wasted time. Choose a single metric that actually represents what matters to you or your team. It could be anything from “hours saved weekly” to “error rate dropped.” Then, time-box your pilot—give it two weeks, four sprints, whatever fits your reality—so you’re not stuck endlessly tuning something that’s going nowhere. Instrument the pilot so you get the data without manual busywork. Log outputs, track speed, and get honest AI feedback from your tools—whatever’s practical. Most importantly, when it’s done, compare results to your baseline, not just a gut feeling.

Back in the “I love ops and automation” days, I used to assume if something felt faster, it must be working better. That assumption bit me more than once. Turns out, having one number to compare makes saying yes or no simple—even satisfying. If you’re worried about the time risk, this structure means your bet is de-risked: you commit only what you’re willing to lose, you get a clean answer, and you move on or scale up from there.

So, don’t overthink tools or future features. Start from your actual context, pick a pilot, and only scale when the numbers move. The best starting point with AI is the one that fits who you are and what you need. And honestly, I still haven’t figured out the perfect balance between optimizing for control and just letting lightweight tools do their thing. That tension keeps the work interesting.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.