Use AI for First Drafts: Proven Workflow for Real Leverage

Use AI for First Drafts: Proven Workflow for Real Leverage

A New Intern with a PhD—and the Trap We Set for Ourselves

The first time I heard someone call AI “a new intern with a PhD in everything,” it got a laugh, but I couldn’t shake the dissonance in the room. Half the people were eyeing AI like it was only ever going to screw up coffee orders, while the other half looked almost relieved that nothing was about to change. That phrase stuck with me, mostly because it tried to smooth over a mess of anxieties with a punchline—and in my experience, adopting new tools is never quite that tidy.

The next time one of our builds hiccupped thanks to an AI-generated script, someone pointed at the screen and cracked, “See, this is why AI isn’t taking our jobs anytime soon.” When you hear it enough, it starts to sound less like a joke and more like an escape hatch for not trying.

I don’t buy the logic that mistakes equal uselessness, because I’ve seen messy drafts accelerate the real work. If we stop measuring success by spotless output, and start measuring it by how much leverage we get—how quickly we can move past the friction—then the game shifts. The good drafts always come after the first flawed ones.

If you want to break the blank-page paralysis, try this. Supply the core inputs that actually matter, use AI for first drafts to spin up something editable, and ask, “Here are the key inputs I care about, now draft a version I can edit.” You’ll get something useful to react to faster, and if you don’t have to keep correcting the same hiccup, it’s progress.

Meanwhile, automation keeps chipping away at the boring stuff, compiling docs, stitching together rough plans, quietly removing the background compiling and entry-level drudgery—often before a manager even writes up a job post. Most of it, you barely notice until it’s gone.

Why We Keep Underusing AI—And What Leverage Really Looks Like

Most teams trip over the same idea: that AI should “go from 0 -> 1 without human input,” as if it’s supposed to spit out the perfect answer with zero intervention. And the second it slips, people see failure instead of groundwork.

It’s a weird filter. You get an AI draft, spot a typo or a boneheaded suggestion, and boom—into the trash it goes. I’ve definitely thrown away usable drafts just because I couldn’t unsee a single wrong detail; that was on me, not the tool. The net result? Projects languish at the stare-at-the-blank-page stage while perfectly fixable drafts gather dust. Instead of saving time, we extend timelines and slow everything down.

Here’s the real comparison: you can now get five drafts in minutes, each of them with enough scaffolding that building on top takes a fraction of the original time. For engineers using Copilot, over 90% reported finishing tasks faster—often cutting work time by 20% or more with AI in the loop—a clear way to measure AI productivity. That’s the measure worth caring about.

This is why the job-safety jokes miss the point. This isn’t about swapping out people for flawless automation. It’s about acceleration—with us, not instead of us. You keep your agency, just shed half the wheel-spinning.

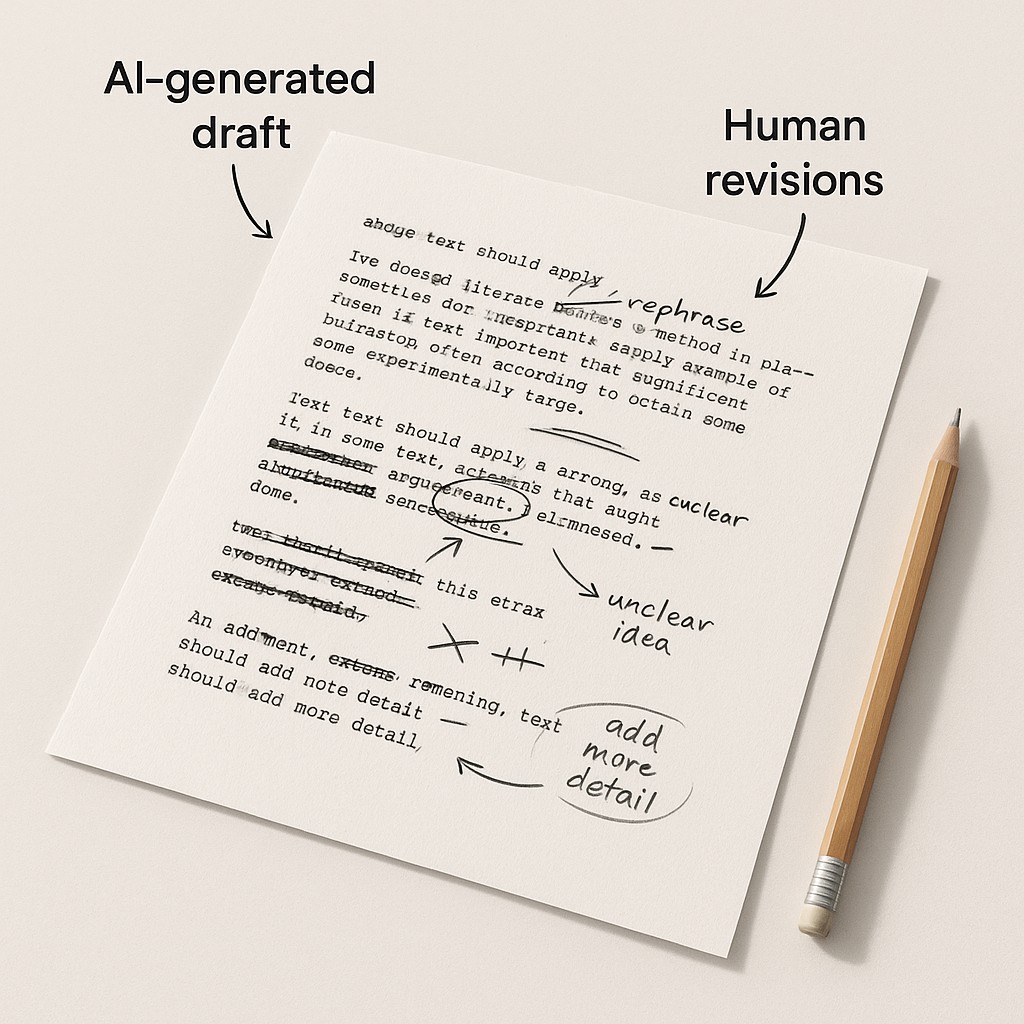

We needed to ship a product spec for a sticky backend migration. Instead of starting with a zeroed-out doc, we used AI for first drafts by feeding the main constraints and risks into it. In under five minutes, it lobbed back a draft. Sure, half of it got rewritten for clarity and edge cases, but the bones—the structure, sections, even flagged open questions—were right there. I had to admit that the slowest part—deciding the sections and sequencing the argument—was already done for me. My job shifted from architect to editor, sequencing and sharpening points, not chipping away at the outline from scratch. That’s not losing control. That’s real leverage, realized.

How to Get 0-1 Leverage from AI—Use AI for First Drafts: A Workflow That Works

Let’s cut to the chase. When you’re actually trying to get traction—not just admire AI from afar—the workflow that works looks nothing like the “one-click perfection” fantasy. You start with clear, targeted inputs (not rambling wishes), push those into the AI and get a rapid draft, but that’s just the opener. You, or your team, step in for a review check—first for structure, then for accuracy—then you refine, fix, and ship. This only works if you measure success by outcomes and time actually saved, not by error-free output.

I keep coming back to this. My definition of “done” needs review checkpoints, not just a pretty first draft. Orchestrated workflows like AWS’s, chaining toxicity checks, sentiment scans, generation, and human review, put practical guardrails on the whole process. When you flip the script this way, AI stops being a magic trick you keep secret and becomes a reliable accelerator you can measure.

Step one—inputs. Spend two minutes getting explicit within your AI drafting workflow: who’s the draft for, what constraints matter, what’s non-negotiable, and what’s the real goal? The tighter the brief, the less room for drift, and the faster you get a draft you can actually use.

Next is generation. As part of the AI first draft process, ask the AI for two or three structured variants, with clear headings and a quick rationale for each. That way, you’re not stuck with generic filler; in five minutes you’re choosing a track, not cycling through wild guesses.

Review is the pivot. In a human-in-the-loop AI approach, do a speed pass for overall structure, then slow down to check accuracy and fit. Here’s the mindset shift: instead of staring at a blank page, you’re refining, editing, and making judgment calls. That’s agency—AI is your jumpstart, not your replacement.

If you want a mental shortcut, I keep coming back to the meal-kit analogy. You get the main ingredients prepped for you—chopped veg, measured spices—but the actual cooking still happens in your kitchen. Prepped ingredients don’t make you a chef, but they let you cook dinner with judgment and taste in far less time. (I still tweak the recipe; that’s the fun part.) And what honestly feels better after a long day—starting from scratch, or jumping to the part where real skill kicks in?

Actually, last week I misread the meal kit directions, added way too much chili, and nearly ruined dinner. The only reason it turned out edible at all was because the peppers came in a sealed bag with the correct label. Sometimes having the framework ready is what saves you from your own autopilot mistakes. Not my proudest moment, but it made me think—templates and drafts aren’t about removing errors; they’re about giving you a real chance to notice and course-correct.

Finally, you iterate where it matters and stop when ‘good enough’ is actually good enough. Tighten prompts only if the final outcome depends on it, accept useful imperfections, and track the minutes you save against the quality of what’s shipped. An imperfect draft isn’t failure. It’s a head start.

That’s the workflow I rely on, and—so far—the only route I’ve found where AI gives you meaningful leverage, rather than more busywork in disguise.

Practical Guardrails—How to Make Oversight, Error, and Quality Manageable

You might be worried that adding AI means endless oversight or more time spent double-checking than doing. I get it. I used to think the same. That changed once I started using low-friction checklists, role prompts, and straight-up acceptance criteria to bound the review phase. You want AI to hand you leverage, not eat into your day chasing elusive perfection. The value of AI isn’t perfection—it’s leverage. If your oversight can be contained to a predictable, repeatable pass—a quick cycle to check structure, sanity, and “does this meet the brief”—then you get the upside without the drag.

Error rates still spark worry, but there are straightforward ways to keep them from snowballing. Constrain the domain, force the AI to stay inside what you actually know well. Toss in linters and tests for sanity checks, and require the AI to justify its choices. Basic “why did you do this?” prompts are surprisingly effective. Most error scans take seconds once you know your patterns; that used to take me an hour.

Quality is the stickiest question. If you’re skeptical, run A/B tests—compare AI-assisted drafts to your baseline, track how much editing you do, and ask your reviewers how drained they feel at the end. Keep what clearly outperforms and ditch what doesn’t—no ideology, just results. Call back to that first product spec draft: the realization that even if half the draft is rewritten, the slowest part—structuring from zero—was skipped, saving major time. The real measure is how much faster you ship good work, not how “algorithmic” it feels. If the draft saves meaningful energy, that’s the win.

I’ll admit, I haven’t totally settled on my own minimum bar for “good enough.” Sometimes, the urge to keep tinkering wins out, and other days I let things ship a bit rough. It probably balances out over time.

Make It Work—Apply the Leverage

Let’s get concrete. Whether you’re staring down sprint planning, triaging bugs, or drafting up release notes, the leverage-first workflow boils down to simple moves: capture the real inputs (not just “make this better”), hand them to the AI to spin up some viable options, check the results for truth, and ship what passes muster. You’re measuring minutes saved and the tangible uptick in quality against hours you used to burn noodling through versions.

The real win with AI isn’t perfection—it’s leverage that turns small inputs into big outcomes. And here’s what grounds the whole shift: Many enterprise-wide AI rollouts have spent 10% of capital for just 5.9% ROI—proof that tracking actual outcomes, not activity, is non-negotiable. Fancy demos don’t cut it; returns you can measure do.

Here’s your starter kit. Map out what goes in (the inputs that really shape the task), ask the AI for clear options (extra points for variety), review using a tight checklist, iterate twice—don’t let it sprawl—and ship. Put a simple dashboard up for time saved and what actually got better; you’ll know if you’re getting leverage almost immediately.

Skip the blank page—give your goals, constraints, and audience, get structured drafts you can edit fast, and measure minutes saved instead of polishing errors; start generating AI-powered content you actually ship.

So let’s stop chasing spotless drafts and start shipping faster—judge AI by outcomes, not error counts. Shift to leverage now, and let your calendar show the difference.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.