Build Reliable LLM Systems: From Prompts to Production Reliability

Build Reliable LLM Systems: From Prompts to Production Reliability

When “Good Enough” Falls Apart

The first time I dropped my carefully tuned support-bot prompt into an actual workflow, it broke in about five different directions at once. Suddenly every edge case, weird input, and latency spike was staring back at me. The demo was slick. Backstage, chaos.

When I started coding, my job was to make things work, write code until it ran, then move on. That mindset used to carry me through. Now, things are messier. It’s not enough to just make it run and call it a day.

At first, I was tweaking phrasing to improve responses—like writing clean, readable functions—but the real shift was learning to build reliable LLM systems. That kind of optimization looks good on a sample request but doesn’t get you far when real users start hammering the system.

Everything changed when my prompts became part of systems—driving tools, agents, or entire support flows. Suddenly we were talking about cost per request, latency SLAs, reliability under pressure, and attacks that turned clever prompts into liabilities. That’s where most “good demos” collapse. What works once stumbles fast under real load.

That’s no longer prompt writing. That’s prompt engineering. And it takes a system, not just a sentence, to make it work at scale.

From Clever Prompt to Reliable System

Let’s put a fine point on it. Prompt design and prompt engineering aren’t the same skill, but you can’t separate them. Building strong AI features takes both. When we start talking about measurable objectives—things like reliability, cost, and latency—we get a shared language, and suddenly everyone rows together.

LLM system reliability isn’t about how good your prompt looks on a slide. It’s how predictably it performs when inputs get weird, usage ramps up, or people try to break it on purpose. I think of it as “consistency under stress.” Cost isn’t just OpenAI billing at the end of the month. It’s the token budget you’re burning per request. And latency? That’s the user’s wait time. The numbers are real. For direct control, the limit’s 0.1 seconds; for seamless navigation, aim for 1 second. Miss those marks and users notice. The work here isn’t abstract. It’s connecting prompts to objectives you can actually measure.

Six months ago, I would have told you better wording sometimes helps, and like a lot of folks, I used to think writing code made me a software engineer. But shipping something robust without clear system objectives? That’s how you end up with a fragile demo, one that folds the moment it leaves your laptop.

Do this. Write a one-pager with the accuracy targets, token ceilings, and 95th-percentile latency you’re willing to stand behind. Track p95 or p99, not just average cases—tail behaviors are what break user trust, and that’s what real reliability means. Cost, speed, reliability at scale. That’s the work.

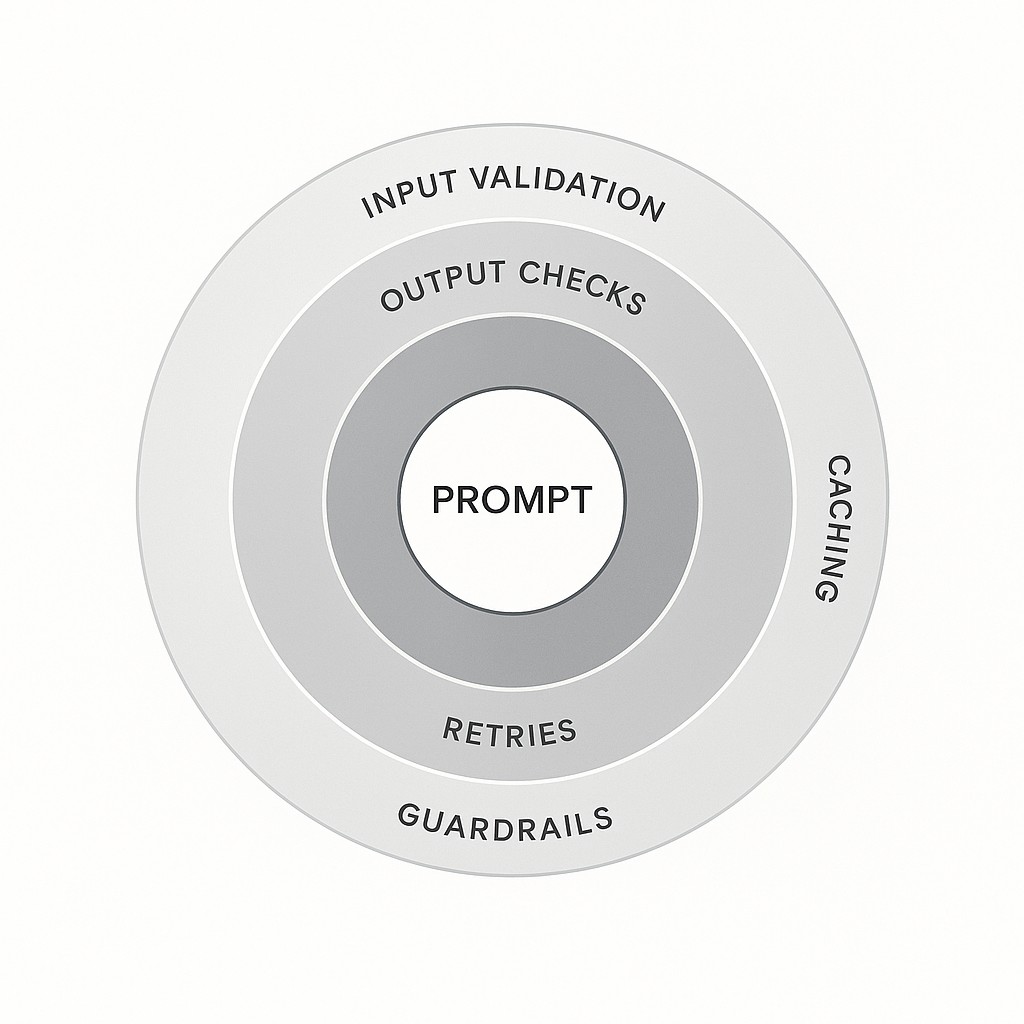

System Patterns to Build Reliable LLM Systems

Here’s the real shift. A well-written prompt gets you an answer, but prompt engineering means treating your prompt as just one component inside a system. The prompt takes in structured input, returns candidate answers, and then your system has work to do. You need to verify, route, and refine those answers before anyone sees them. It’s not about the cleverness of one prompt. It’s about the scaffolding around it that carries real load and catches errors when things get strange.

You don’t put unfiltered outputs in front of users. Start with input and output validation. Run schema checks, use type guards, and enforce content filters so that what goes in and comes out is exactly what you expect. That’s your first defense against things like injection attacks and accidental nonsense. Make your boundaries airtight before you think about sophistication.

Next up, how to build reliable LLM systems with caching and smart retries. When you’ve got a known-good response—a call that’s been verified and works for a repeated input—cache it and save yourself tokens, time, and frustration. But when the model sputters (timeout, weird output, or a subtle failure), don’t hammer it blindly. Retry with modified parameters, or swap to a fallback model. It’s a bit like dealing with flaky hardware. Sometimes a nudge makes it behave, sometimes you need to pick a new part. Now, you’ve got two knobs for stability. One for latency (hit the cache, skip compute), and one for cost (don’t burn tokens on repeated work). Once you start layering these patterns, your system gets steadier and your budget is under control.

Chaining is your friend when the task is complicated. Break the work into clear steps and checkpoint progress, and if something partially succeeds, pick up from where it left off instead of resetting the entire workflow. It’s less dramatic than restarting everything and gives you a way to isolate failure points. Clean, simple, and reliable.

There’s this weird thing I noticed the other day. I was waiting for an automated espresso machine at a café near my place—the sort with a little screen and a robot arm grinding beans. It looked futuristic, like something out of an ad.

But it got stuck halfway through a cappuccino and just froze. Everyone stared, someone muttered about software updates, and eventually the barista had to reach behind the fancy panel and unplug the whole thing. The robot arm jerked back to life, half a cup in hand, foam everywhere. Watching that mess, it hit me how easy it is to trust a demo until it’s stopped by an edge case you never modeled. Systems break in public, not in your cozy test setup.

And don’t ignore adversarial inputs or outright abuse. You’ve got to layer on LLM guardrails—rate limits, anomaly detection, system instructions that spell out exactly what the model should and shouldn’t do. Spend real effort here, not just on hopes. Mitigate prompt injection by spelling out the model’s purpose, limits, and capabilities in your system instructions—real guardrails, not just hopes. That’s the backbone protecting your users at scale.

These patterns aren’t over-engineering. They’re what make your clever prompt work every time, not just once. Treat prompts as components, build the guardrails, check the edges, and pressure-test the system to achieve production LLM reliability. That’s how you cross the line from “demo” to “production.” And it’s worth every hour you spend.

Operationalizing Reliability: Budgets, Policies, and Handling Failure

Reliability targets are only as good as the actual system limits you put in place. I started seeing progress when we stopped treating every LLM request like a blank check. Put hard caps. Budget tokens per request, not just per month. Route requests to different models depending on the job.

Use the cheap, fast one for boilerplate; reserve the spendier, smarter model for complex questions. Tuning temperature down isn’t glamorous, but it trades some creativity for stable, repeatable outputs—especially important when you’re chasing predictable behavior. These aren’t big changes. Just small knobs you set early that keep costs and latency in line before they balloon out of control. If you’re still adjusting everything for “best possible answer,” try aiming for “good answer that’s always on time and within budget.” You might be surprised how much stress you save.

Now here’s where things get practical. Failure is inevitable, so your system needs to handle it smoothly. If an LLM output looks off—wrong format, missing keywords, or just plain nonsense—don’t just mash retry. Instead, retry with a tighter prompt (think stricter instructions or simpler asks). If you hit a bottleneck, automatically switch to a fallback model that’s more deterministic or cheaper. For critical tasks (like compliance checks or sending money), escalate to a non-LLM tool if possible. Sometimes rules-based is best. I’ll be honest, I used to think smart retries were overkill, but they’ve saved us countless headaches and dollars. ‘Retry smartly’ is almost boring advice, but in my experience, boring is what keeps systems alive.

LLM monitoring and validation matter as much as coding. Build an evaluation harness that runs golden datasets—real requests with known good answers—against new updates before launch. Throw in synthetic edge cases and worst-case “red team” scenarios. That’s where most software breaks, AI included. Track results. Pass rates, cost per successful resolution, and especially tail latency (how slow is your slowest 1%?). Compare these stats against your actual SLOs. Don’t eyeball it, measure it. Once we started doing this, I found a lot fewer surprises in production, and when the numbers drifted, we could tune before users complained.

Don’t let the team get stuck guessing. Agree on reliability “gates”—the specific numbers you’ll hit—before launch. Ship in phases, harden the system based on what actually fails once real data flows in. That’s how you move fast and still end up durable.

Use our AI writer to turn rough notes into clear drafts, summaries, and outlines, fast, so you can focus on building while it handles tone, structure, and formatting.

Making Prompts Durable: Pragmatism Over Perfection

Start small—really. If you’re worried about overengineering, just add the basics. Validation so you catch nonsense early, minimal filters for obvious bad inputs, and a cache for repeat requests. That’s usually enough to keep things sane for your first few users. You’ll see where it actually cracks once telemetry shows you real failures; then layer in retries and routing only when patterns start falling apart. No one says “ship the whole kitchen sink.” Just cover the gaps that show up.

Here’s the thing. All those tweaks I once obsessed over? They never made outputs actually reliable. What did was recentering on engineering fundamentals. Reliable AI system design only shows up when you name constraints clearly and design for resilience, not when you chase perfect wording. It was about tradeoffs, constraints, clarity, and long-term resilience. Back then, I thought the cleverest prompt would be enough. Eventually, I learned it’s just one lever among many; most stability comes from how you handle faults and uncertainty, not how poetic your prompt sounds.

Some days, I still catch myself tinkering with a prompt, convinced the next edit will fix that last unpredictable edge case. Maybe it will, maybe not—I’m not entirely sure yet. There’s always another compromise to weigh, another failure mode to hunt for. You solve one thing, two new stumbles show up. I’m learning to live with that.

So let me leave you with this. Prompts matter, but systems make them trustworthy. If you design for objectives from day one, you turn a neat demo into a durable feature—one that protects your users and reins in spend. That’s the path forward.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.