Build production-ready AI foundations that last

Build production-ready AI foundations that last

The Week I Spent Fighting Azure (Instead of Building AI)

Last week should have been all about how we build production-ready AI foundations, not just refining model logic. Most of it just vanished down the drain of deprecated Azure services. I kept wanting to ship agentic features, iterate, actually push the product forward—instead, I was heads down with plumbing that quietly rotted out underneath us. Nothing like searching for half-baked migration docs at 10pm to make you question your priorities. Really, none of this was in my calendar. I kept thinking, this is time I’ll never get back on the actual product.

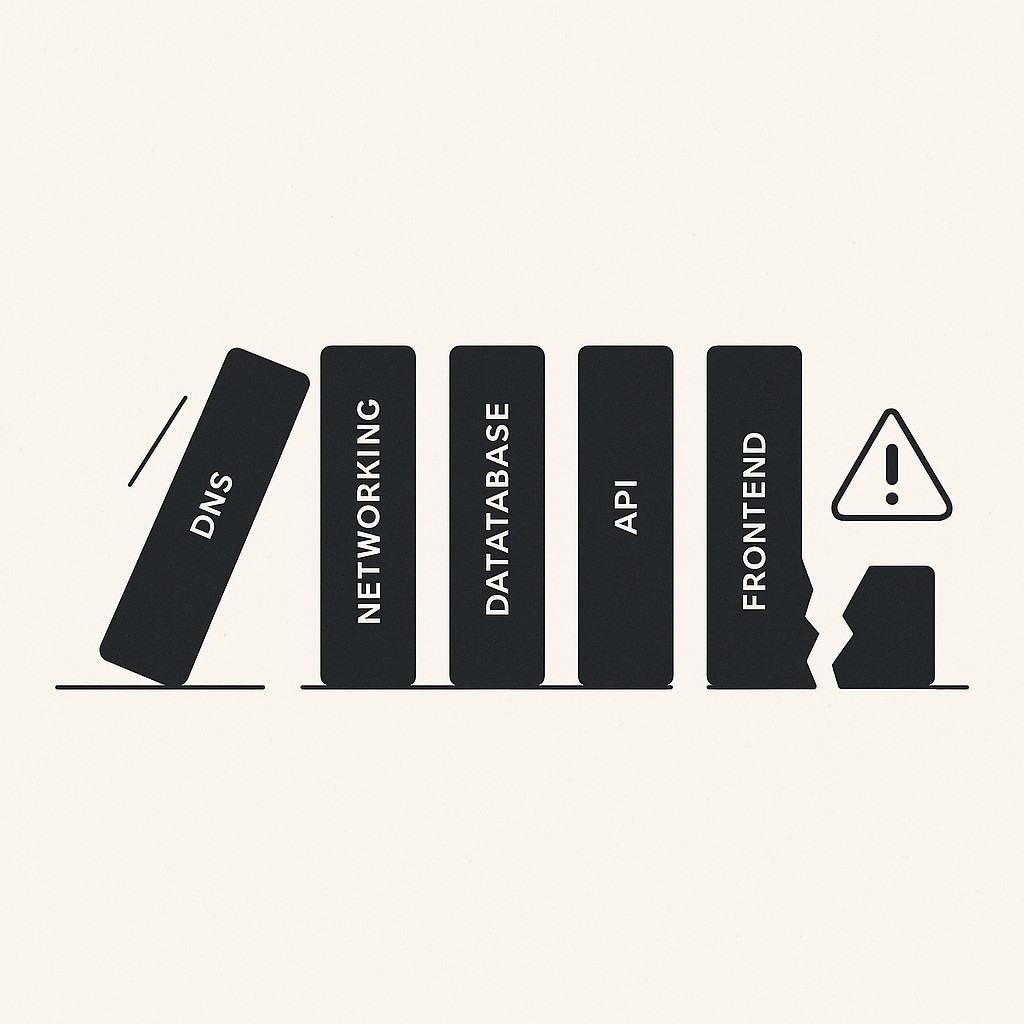

That detour meant rebuilding our entire domain routing from scratch just to restore production traffic. DNS changes aren’t glamorous. Break those and your “intelligent system” doesn’t even exist to the world. Make one shaky infrastructure choice, and the ripple echoes through every layer. Sometimes it’s not even a ripple—more like a domino line straight to whatever’s most visible.

The little stuff tripped me too. At one point the whole workflow bottlenecked because of a stubborn bit of CSS padding. One pixel off, wrong spot, and nobody gets their hands on the new feature. That’s one of those things I used to ignore—back when I assumed patches were quick and easy. You’ve probably hit the same wall. Suddenly what should be a “tiny” detail is the main thing blocking launch.

Honestly, we rushed the first demos. Everything looked fine when it was just us—piecemeal integrations, just enough “AI” gloss to show off. The second live users pounded those LLM endpoints; everything started fracturing. Connections dropped, inputs got misrouted, even the outputs degraded as weird edge cases multiplied. That shortcut seemed harmless until it wrecked the rollout. When you skip building real foundations, it always boomerangs.

Here’s my actual ratio: I spend 90% of my time on classic, boring engineering. The magic only works when you sweat the craft. It’s not just me—engineers spend 39% of their time wrangling data prep and cleansing, which outweighs all model work combined. If you want durable impact and systems that don’t fall over, you grind on fundamentals even when nobody’s cheering.

AI Strengthens—or Exposes—Your Engineering Rigor

Sophisticated models don’t replace discipline. They spotlight its absence.

Building your AI feature is like dropping a supercharged race car onto the AI engineering foundations—the “road”—you’ve paved inside your product. If you’ve laid down actual infrastructure—smooth lanes, guardrails, even traffic lights—it’ll fly. Skip the hard work and hold it together with duct tape, and the first burst of speed pulls the whole thing apart. Infrastructure scales, duct tape snaps. I still flinch when I see a clever hack in production, knowing the reckoning isn’t far out.

Now, AI product architecture is the gravitational center pulling your product together. Schemas, tables, backups, lineage: none of it is just paperwork. These things decide if your AI’s answers make sense on day one, and if they keep making sense a thousand requests later. I’ve watched teammates invent clever model tricks, only for messy data pipelines or a mystery grain in a SQL query to torpedo everything downstream. Tables and backups define reliability. Ignore lineage, and you’ll be hunting phantom bugs weeks later—sometimes with no clue where the trail even started.

Operational readiness keeps latent fires from becoming outages. Observability and health checks are not luxuries. That’s how you catch snaps before users do. Infrastructure-as-code makes your environments reproducible. Fix a bug once, and it’s fixed everywhere, not just your laptop at lunch. Serverless feels like magic, but never excuses sloppy ops. Your platform is alive. The only way it keeps working is if you keep patching and watching beneath the hood.

Don’t downplay UX craft. Micro-interactions matter—a loading spinner in the wrong spot, a misaligned grid—they shape trust right alongside model outputs. Last week, chasing down that annoying UI spacing issue took longer than the whole Azure adventure (somehow it always does). The data backs it up—just 6% actually use AI for proto personas, and only 0.5% for journey creation, which means UI basics matter way more than shiny model features. Cutting-edge AI only shines when the foundation is solid. Messy interface? All that intelligence gets lost before anyone truly cares.

This is the unsentimental part of agentic product building. Not hype, just the checklist. Ship on a solid base, or your AI ends up as a neat little demo that can’t survive the day-to-day grind. Discipline now means impact later.

Build Production-Ready AI Foundations: Ship AI on Discipline—The Practical Checklist

If you want your agentic platform to last, you have to start with a foundations checklist—the way you build production-ready AI foundations into a sprint gate, not an optional step. Sprint one isn’t just model architecture or slick demos. The basics go in from day one, before anyone’s wowed by outputs.

A Production-grade AI checklist starts with data integrity. Define schemas at the start, enforce the constraints like uptime depends on it—it actually does. Set up backups and run restore drills so you know your recovery plan isn’t fantasy. Track lineage, know where every bit of data comes from and how it flows. Quality checks belong in every pipeline. Data Gravity Is Real: your whole stack gets pulled wherever the messiest data lands.

For operational readiness, require dev containers for every service so you aren’t hunting dependencies at 2am. Baseline observability is a must—logs, metrics, whatever lets you see what’s really happening in production. Health checks go in first, not later. Bake in a reproducible deployment path from the start. Serverless or traditional, doesn’t matter. Consistency wins over improvisation every time you scale.

Secure AI product design starts with security hygiene. Not glamorous, but non-negotiable. Only give access that’s essential for your role (least privilege means nobody gets more than what’s essential for their role, locking down access to what’s strictly necessary for the business mission). Secrets rotate—actually automate it. Audit logs everywhere. Run threat modeling before launch. Breaches aren’t swayed by your roadmap. The cost of waiting is always bigger than it looks in sprint planning. I’ll admit, I still try to skip steps when the pressure is on, but I keep paying for it. Haven’t cracked how to balance that perfectly. Sometimes speed wins the argument in my head, only for regret to show up later.

Don’t overlook the UX polish gates. Define micro-interactions, set layout standards, fix spacing. Six months ago I shrugged off CSS padding, thinking “no one’s going to care.” Ended up reworking a key feature after launch because that off-by-one gap blocked half our users. Smallest details force MacGyver debugging every time.

So don’t leave foundational discipline for later. Ship on the checklist, sprint one. It’s slower at first, but the only way the AI you build becomes a product, not another brittle demo waiting to crack.

How to Use the Checklist Without Slowing You Down

I know what you’re worried about. “If we stop for every foundation check, won’t we lose velocity?” But last week’s lost sprint fighting Azure breakage is the tax you pay for deferring engineering discipline. Skipping the basics feels fast until you’re sweating through midnight fixes.

Here’s the pragmatic fix. Checklist items become sprint acceptance criteria, not nice-to-have chores stuffed low in the backlog. Every story passes through the foundation gate—data integrity, operational readiness, secure access, clean UX—before it’s allowed up the pipeline. That’s how you avoid demo drift. The code that ships matches what users saw in the demo. No hidden cracks. You keep velocity because the basics aren’t afterthoughts, they’re baked into every move.

Here’s how it actually looks. First day of the sprint, we rebuilt DNS with infrastructure-as-code. Env setup got repeatable and safe. After that: live health checks, monitoring endpoints. Only then did we start plugging in models, and only because the plumbing was green. No skipping steps.

That’s what building an AI product really looks like behind the scenes. This discipline isn’t extra. It’s how you actually build things that hang together.

When engineering work eats your schedule, create on-brand drafts for docs, release notes, and posts with AI, so you ship updates faster without pulling focus from the build.

Build on What Lasts (Not Just What Wows)

Bake the pre-launch checklist right into sprint planning. Remember—cutting-edge AI only shines when production-ready AI foundations are solid.

The headline feature might be AI, but the headline risk—and most of the effort—is disciplined engineering, iteration after iteration. That’s where rigor wins, every time.

Write the checklist. Name the gates. Post them. Data Gravity Is Real. Infrastructure scales, duct tape snaps. Commit to a base that won’t break. Start today.

And somewhere in the middle of sprint three, when I’m chasing down another misaligned grid or wrestling with a half-documented Azure API, I’ll probably still grumble about the pace. But I know this is how products get built—by living the checklist, not just admiring the demo.

Sometimes I still blow past a step, thinking I’ll come back. Usually, I don’t. I haven’t figured out how to prevent that urge entirely. Maybe it’s just part of the grind.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.