How to Structure LLM Workflows for Verifiable, Inspectable Synthesis

How to Structure LLM Workflows for Verifiable, Inspectable Synthesis

Flying Blind: Six Months Inside the Black Box

I spent the last half year inside a project UI, wondering how to structure LLM workflows so that, on paper, my research would be effortless. All the conversations, all the AI outputs, every document—they were “right there.” But practically, I was flying blind. Clicking through dialogs and chat threads felt like rummaging for tools in a pitch-black basement. When you’re sat there, staring blankly at yet another popup and trying to recall whether the right file was even loaded, it’s almost funny—until you realize the joke’s on you. If you’d asked me to map my own context at any moment, I’d have stared blankly. Then I realized I was still blind to my own context.

Giving the model access to every relevant file felt like the responsible move, but it was never enough. Obvious connections would get skipped, and other times the AI hallucinated links that didn’t exist. I couldn’t do anything but scold it to “do better.”

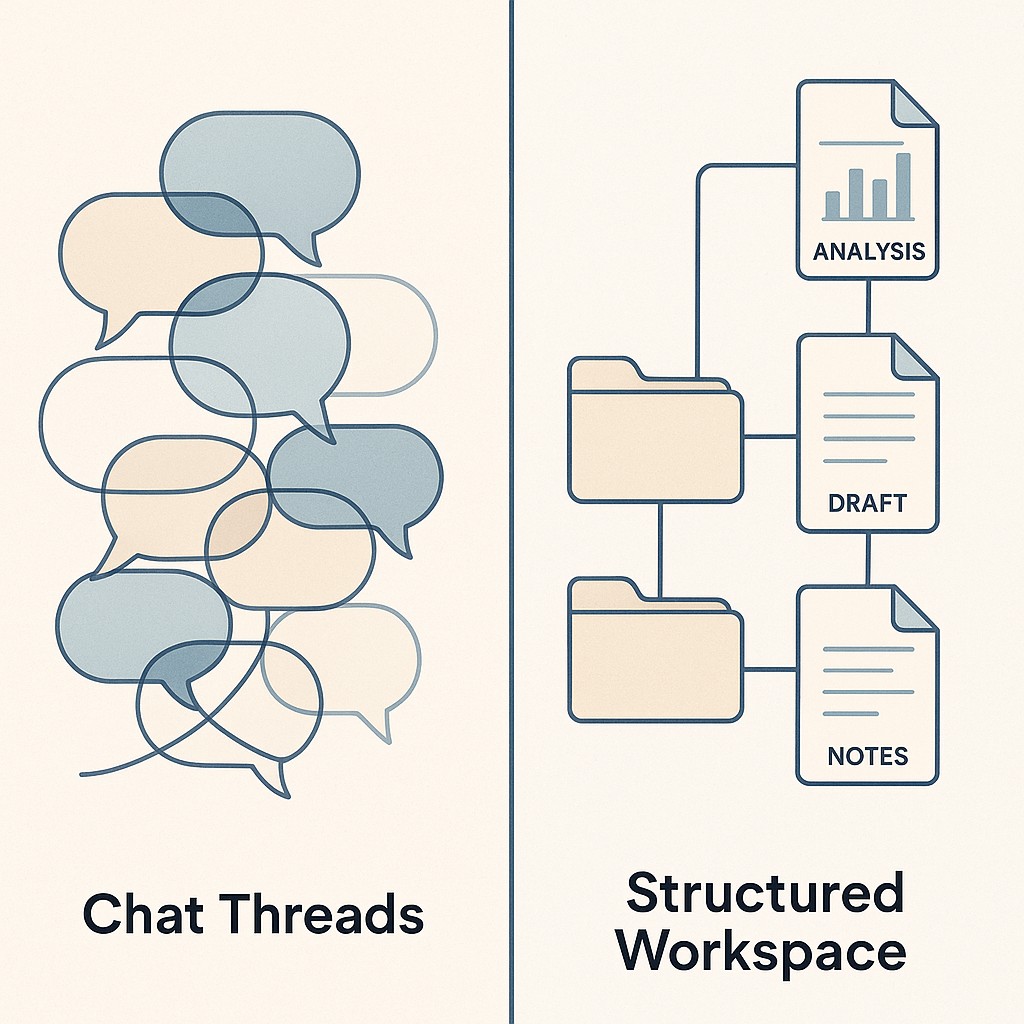

That’s when it hit me. The interface itself was the real culprit. We could iterate all day, but neither of us had a true map of the workflow. The UI let me run the system, but kept the structure hidden.

Every week, the mess compounded. The files grew harder to track, and the prompts chained into brittle loops. I kept trying to organize chaos with more chaos.

The turning point came the day I exported the entire corpus and treated it as a dataset, not a stack of random chats. Six months ago, access felt empowering. But access is not synthesis. A pile of files might make you feel equipped, but what actually matters is structure and search. When you operate with a unified, inspectable workspace, you can see what’s real, direct how synthesis unfolds, verify every step with fault-tolerant LLM workflow patterns, and finally trust your outcomes. It sounds slow, and the tooling overhead is real. But this is the shift that turns fleeting LLM experiments into dependable work you can audit and control. Access ≠ Synthesis.

Why Chat UIs Break Down for Multi-Document Reasoning

Chat and project interfaces aren’t built for complexity—they flatten everything into a rolling timeline. You drop files and paste chunks, but structure gets wiped out. The model can’t see boundaries or relationships. One long, undifferentiated string is all it gets. Here’s the kicker—add too many documents and performance actually drops, multi-document QA can sink by over 20% when context grows flat and unwieldy. Instead of clarity, you’re left guessing how (or if) the AI is connecting the dots.

After months in the trenches, my research threads sprawled across channels, tabs, and uploads. Weeks’ worth of context got scattered in fragments the model had no hope of reassembling. There’s always a moment when you realize you’ve lost track of what’s actually in scope—sometimes it’s weeks later.

Things get weirder when the corpus is mostly text. I caught outputs quoting sources that didn’t exist, merging terms from different documents, or mixing up internal references. Far more words than code, and the links between them get loose. Pinning generation to retrieved, verifiable content from curated corpora—like RAG systems do—restricts hallucinations and anchors outputs with trackable citations. Without that, it’s just hoping the model doesn’t get creative in the wrong places.

But here’s the real pain. There’s no way to prove, steer, or validate what the system just did. No artifact graph mapping connections. No searchable trail of what led to what, or why. If the output runs off course, you can’t correct a step. You have to start from scratch, dropping fresh inputs and hoping for a better run. The tool hides the “why,” and you feel locked out of your own work.

So I want to reframe things. The bottleneck might not be your model. It might just be your interface. If you can’t see structure, you can’t steer or trust outcomes.

Export, Inspect, Operate: How to Structure LLM Workflows From Black Box to Workspace Control

The real shift happened after months of wrestling with tangled threads and guesswork—I decided to move beyond chat UI and exported everything. It was not a grand plan. Frankly, it felt like admitting defeat. I dumped all the conversations and documents into folders, fired up VSCode, and for the first time, I could actually see what the model saw. The relief was immediate. Suddenly, both sides—me and the assistant—had the same window into structure. From that moment, the project stopped feeling like a black box.

The workspace itself was simple. Raw source files in one folder, every derived artifact in another, all normalized into text formats. Then I built a full-text index on top. VSCode’s search wasn’t glamorous, but it let me sweep through the corpus, surface links, and track changes in a way no chat history ever could.

With a structured LLM workflow in place, actual operations became tractable. You want to extract entities? I’d run a targeted pull over every document, drop results into entity_list.json, and sweep for overlap or contradiction. Consolidating insights stopped being guesswork. I could diff versions (analysis_v3.txt, analysis_v4.txt) and see exactly what shifted. Synthesis, too—when you merge conversations into a stable summary, now it lives as summary_final.md, not ephemeral scrollback. The file names alone—analysis_v3.txt, entities_merged.json—became anchors for collaboration, either with the model or teammates. You could hand them a concrete artifact and say, “Here’s what changed.”

The validation loop finally made sense, informed by rule tiers and infraction budgets you can tune to cut retries. You could launch targeted queries across files, cross-check references, and roll back edits if something went off. The whole process is reversible and transparent. In fact, as document count climbed, I saw model quality tanking unless I kept context short and loops tight. Short, focused contexts and validation loops land more reliable results—even when the same info is present. That’s leverage: working in slices, not sprawling dumps, so outputs stay anchored and testable.

This may sound odd, but the shift reminded me of making chili last winter—when I realized halfway through that I’d diced both onions and apples, and couldn’t tell them apart in the bowl. I spent ten minutes picking out apple bits before finally giving up and hoping the spices would mask it. The lesson stuck: clear labeling and prep save time, not just in the kitchen.

You might worry that the setup is heavy, or that jumping out of the chat slows things down. Maybe. But control over structure beats speed in the long run. And the first time you search a term, land every mention, and trace outputs back to inputs? You won’t want to go back.

Treating Your Corpus Like a Dataset: Building Verifiable AI Workflows

Let’s start with the basics. Data hygiene. Before you can do anything intelligent, you need your corpus tidy—define what’s in scope, normalize formats, index absolutely everything, use context briefs that align recommendations, and get obsessive about naming conventions. Raw artifacts live in one place, intermediate in another, finals get their own spot.

A filename like analysis_v2.txt or synthesis_final.txt isn’t just a label—it’s how you track evolution without losing your mind. If you don’t nail this discipline up front, every downstream task gets fuzzy and unreliable. You want to be able to hand off any artifact and have its lineage crystal clear, both to you and to whoever picks up the thread. Trust me: the more documents you wrangle, the more grateful you’ll be for clarity that scales.

Now, think bigger. Every time you prompt the model or do a manual operation, treat it like a typed function. Don’t just “ask for a summary.” Run explicit extraction, normalization, joining, and synthesis steps—each one pulling new, stand-alone artifacts. Extraction grabs entities, normalization aligns formatting, joins merge sources, and synthesis pins the output to traceable summaries. You can search, diff, and test these steps just like you’d check code modules with clean, modular workflow design. Here’s where the shift happens: stop thinking of prompts as chats and start applying LLM workflow best practices by treating them as versioned operations over a structured dataset. Friction drops fast once you adopt this mindset. You trade amorphous “conversations” for focused product artifacts, each ready for re-use or deep analysis.

Recording provenance is non-negotiable for LLM research traceability. For every artifact, store the source span, rationale, and links—it’s the only way to prove what led where. Got a synthesis_final.txt? It needs a record of not just what was said, but which files and statements fed it, and why. If anyone’s promoting an output to “final,” checks need to happen first—or you’re just rubber-stamping guesses. Some days I still skip this, promising myself I’ll do it later. Honestly, I haven’t fixed that tendency for good. The urge to move fast is hard to shake even when you know better.

Steer the whole operation with targeted scope. Use full-text search to zero in on specific slices of the corpus, iterate on small, atomic batches, and let your code assistant automate repeatable steps. When you design this way—especially with models like Claude Code at your side—every operation gets precise, controlled, and you’re not stuck rerunning big ambiguous tasks. This is where real leverage comes from. Sharp focus, quick cycles, fewer rabbit holes.

Finally, don’t go it alone. Treat these artifacts like source code. Set up reviews, keep change logs, and make reproducible runs the default. You’ll save yourself and your team future headaches, and shared knowledge becomes dependable—not someone’s personal stash. Think of builds, not drafts. If you do this, work stops being guesswork and starts being a living, auditable asset that actually survives turnover. It’s more overhead up front, but it’s how you make AI fit for real production, not just demos.

Fast Once You’re Structured: Why Corpus Discipline Pays Off

I get the hesitation. Exporting everything, building templates, setting up your workspace. It feels like you’re slowing to a crawl just as you want to move fast. But let’s be honest—that “speed” when you’re jumping between tabs and guessing what the model’s seen? That’s flailing. Once you learn how to structure LLM workflows in your workspace, you swap guesswork for targeted moves you can verify on the spot. Setup is an investment, but it pays back tenfold the first time you have to prove (not just trust) where an output came from.

It doesn’t have to be a production-grade pipeline out of the gate, either. I’ve used basic templates for file naming, simple scripts to reformat documents, and lightweight tools that let me keep everything in plain text—but crucially, out of the chat silo. Even ChatGPT Projects gives you a way to escape rolling scrollback and work closer to your actual files. That VSCode search I mentioned earlier? It’s not fancy, but it changed how I navigate and synthesize.

The after-state isn’t theoretical. Now, my outputs are traceable, hallucinations drop off, and handoffs—either to teammates or the next model iteration—just work. There’s a relief when you can actually see, search, and track the entirety of your corpus, instead of relying on vibes or memory.

Treating your AI workspace as a searchable dataset is what restores real control with verifiable AI artifacts. It isn’t about adding overhead. It’s about making your synthesis targeted, verifiable, and correctable, and it accelerates operationalizing AI in workflows where decisions need evidence. When you can map and audit every move, you operate from a position of strength, not hope.

Start generating AI-powered content in a focused workspace, keep drafts organized, iterate with clear versions, and ship pieces you can trace back to sources.

So export, index, and operate. Don’t settle for what you can’t see—visibility beats vibes, every time.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.