Accelerate project setup with AI: A proven workflow to start faster

Accelerate project setup with AI: A proven workflow to start faster

Accelerate Project Setup with AI: Upleveling the Boring Setup

Today I needed to build a small tool to upload blog posts to my site. Not for the first time, and probably not the last. It was one of those Tuesday-afternoon tasks: simple, familiar, but still—if I let it—more distracting than it was interesting.

AI doesn’t have to be magic or mayhem. Sometimes, it’s just practical. If you’re skeptical, that’s smart. The wild claims are everywhere, but I’m not here to sell anyone on fairy dust. I use what works, when it actually helps clear the path.

The real friction isn’t deep engineering. Accelerate project setup with AI, and stop recreating steps, hunting the right syntax (is this endpoint still POST or did I switch it?), and assembling the same REST API boilerplate again. I keep finding myself redoing these tiny rituals. Nothing hard—just enough loose ends and cognitive load to make me wonder if I should have grabbed yesterday’s version instead of starting fresh. It saps momentum before I even get to the part I care about.

So here’s the shift. Start faster with AI the next time you’re setting up something you’ve done a hundred times—use it as your starter engine. One click, one command, and you get a clean outline and crisp boilerplate, so you’re already rolling. It’s funny—six months ago, I would have spent twice as long sifting through Stack Overflow posts for “just right” examples. Now, the first draft lands in seconds.

The point is simple. AI delivers the biggest win when you overcome setup friction and free your attention, not when you hand over control. Review what it gives you. Decide what fits. You stay in the driver’s seat, but it lets you skip the traffic.

Outlines and Boilerplate: Lifting the Setup Burden

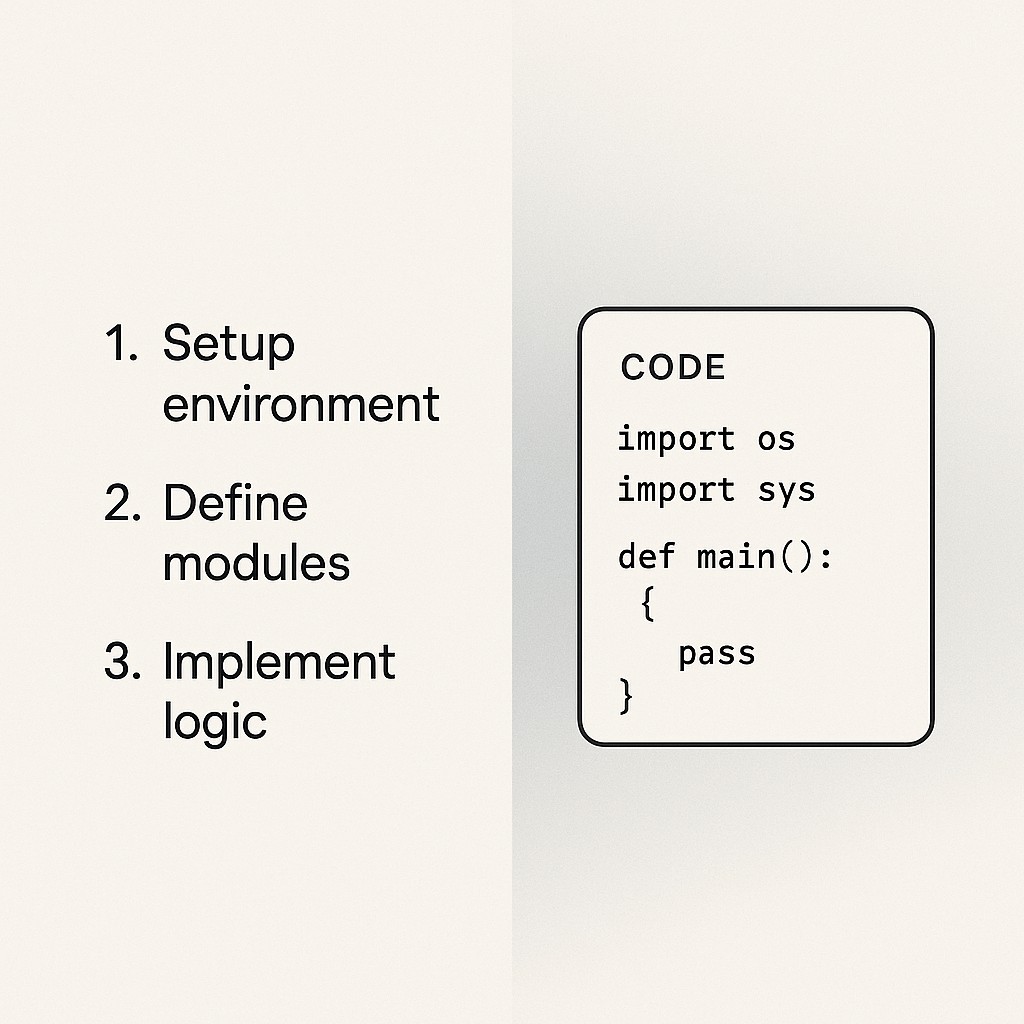

When I want to move fast, my starting point is always the same. I ask, “Give me the steps to do X. Assume I’m technical.” Models are surprisingly good at breaking things down when you frame the prompt to “think step by step.” It fragments the work into logical chunks, making it far easier to debug or adapt. You get crisp, actionable pieces instead of a wall of generic advice. (freeCodeCamp)

The magic of these outputs isn’t in their complexity. They land as short, skimmable action lists. Clean outlines with the right sequence and just enough detail. When the outline skips the fluff and sticks to the steps, I actually feel like I’m gaining momentum instead of wading through noise.

To reduce boilerplate with AI, it’s pretty much the same flow. Let’s say I want to set up a REST API endpoint to take in a new post. I’ll give the model a quick prompt: “Generate the Python boilerplate for a POST endpoint that saves a blog entry—keep it minimal, but production-grade.” What comes back? Usually, a lean Flask app, the endpoint, params, error handling, sometimes even a ready-to-run test stub. I read through the output line by line, tweak paths, check the error cases, strip anything I know my framework handles. I never copy it blind. But there’s a clear difference—I’m editing working code, not inventing it from scratch, and I don’t lose energy on repetitive syntax or configuration.

Skimmable, well-structured outlines cut down extraneous cognitive load caused by messy presentation, which directly sharpens momentum. When you’re not hunting through documentation or stacking tabs to cross-reference trivial dependencies, it gets easier to stay in flow. Even a rough first draft from an AI kicks off that process, so you’re deciding, not dithering.

It’s not about getting “the answer” right away. It’s about fewer tabs open, fewer micro-decisions on setup, and a much quicker first diff in your repo. Usefulness as a starting point drives real productivity—not just correctness—so even a rough outline speeds up development (CACM).

The punchline is not that AI automates you out of the equation. Accelerate project setup with AI so the acceleration is real and you’re still steering. The initial lift is the win—you shape what comes next.

A Repeatable AI Workflow—Momentum Without Surrendering Control

I don’t use AI to write all my code. But I almost always use it to get started. The playbook is simple—prompt for a clear outline, generate boilerplate, adapt it to fit, then verify that what you’ve got actually works. This isn’t about outsourcing thinking or being lazy; it’s about removing the slow, tedious friction that always shows up at the beginning of familiar projects. I know that initial drag: flipping through old repos, trying to remember if you went with FastAPI or Flask last time, scavenging for an example that isn’t two years out of date. Those are the bits that slow real work to a crawl.

So the point of this workflow is control—using AI to get all your pieces on the board quickly, but making every move yours from there.

First step: use AI for developer workflows by asking for a step-by-step plan tailored to your stack. Literally: “List out the steps to set up a simple REST post uploader in [your language or framework], assume I want basic auth and error handling.” Keep your constraints tight—set specs and constraints—and make assumptions explicit. When you do this, framing cuts down the back-and-forth cycle, and iteration stabilizes fast.

It’s a lot like mise en place when you’re cooking. Before you do anything interesting, you want your ingredients prepped and tools ready. Once everything’s in reach, building goes way faster. No frantic searches for a missing spice or (in our case) a missing import line.

Next, I ask for boilerplate covering just the edge I need—say, the POST handler, or the schema validator. I’m blunt about requirements. “Give me the minimal example, modern syntax, Pydantic for validation, use snake_case for names.” What comes back is rarely perfect, but that’s fine. This isn’t copy-paste territory. I read for gaps or assumptions that don’t fit my context—maybe it’s missing a custom exception, maybe it nailed the input checks but misnames params. Editing working code feels efficient; I’m swapping out pieces instead of building the whole rig from memory, and that’s much less mentally taxing.

Here’s an odd aside: last week, AI tossed in a random “hello world” route when I asked for just a POST handler. No clue why, but for a second I thought maybe I’d accidentally triggered some default template. Not helpful, but a reminder that even smart systems muddle the edges sometimes. Dead simple to delete, but it wasted a minute and made me double-check the output.

Once things look plausible, I run a quick test. Usually, it’s a lightweight dry run or curl request. Skim the logs, apply risk-based AI validation on the output, and once it’s clean, commit a small, focused diff. The important thing is to keep that momentum. Catching obvious mistakes while you’re moving, not after you’ve built a tower of accidental complexity.

You don’t have to buy into a hype cycle to see the value here. Just get the outline. Use the generated boilerplate. Tweak, test, and keep steering. That’s the pattern I rely on because it keeps attention on the parts that still need me—and lets the AI handle the stuff I’m always tempted to put off.

One thing I still wrestle with: sometimes I’ll compare the AI output to my older “handmade” snippets and wonder if I’m losing the little optimizations I’d built up over months. Half the time, I think I am. The other half? I honestly don’t miss them.

Guardrails for Using AI—How to Stay Productive Without Getting Burned

First off, I’ve seen enough rushed outputs to know the risks. Off-by-one bugs in scaffolds, odd naming conventions, or subtle logic errors. So I keep a simple checklist: read the diff—line by line, not just the highlights. Actually run the snippet in a safe sandbox before anything touches prod. Compare what you get against your usual standards: style, error handling, edge cases. Last point. If it feels off or too generic, trust that instinct and tighten it up now, not later. It’s always faster to catch a weird default before it’s stacked into the real build.

You might be thinking, “Doesn’t crafting the perfect prompt just eat up all the minutes you’re trying to save?” Fair question. Here’s what I do. Decide on a cap—five, maybe ten minutes max to get a clean draft. If I’m over the limit, it’s a sign I’d be faster typing it from scratch. A quick ROI check keeps momentum real.

Let’s be clear on boundaries. I’m not asking AI to be a silver bullet. I know what to do—just don’t feel like hunting down that one bit of syntax, or re-explaining my standards for the sixth time. The AI is starter fluid. I’m the one driving, reviewing, and shipping.

Momentum matters. This setup kicks off quicker starts, sharper progress, and lets you keep shipping on pace. Exactly the kind of workflow that keeps you focused on the parts that actually move the needle.

Extending the Kickstart—More Tasks, More Momentum

It’s not limited to blog tools—this method fits anywhere setup friction creeps in, especially with AI for code boilerplate. CLI scaffolds, basic migration scripts, cloud config files, deploy pipelines: all the routine stuff you know you could build blindfolded including ways to automate repetitive setup tasks—but would rather not. Or take schema upgrades—adding a column without breaking prod, rolling a quick CLI to clean up old data, generating a first-draft Dockerfile. Every time I’m about to wade through setup, I reach for the same “give me the outline, then boilerplate” loop and get moving. It’s the Tuesday-morning, mid-sprint booster—never mind if the stack is Python or shell.

If you’re working with a team, build norms around this. Agree when to ask for outlines (before kicking off a new service, or prepping a migration), how to review AI-generated boilerplate (a quick line-by-line, same as you’d do for a PR), and where to capture proven prompts (a shared doc, GitHub gist, or just a Notion page with your go-to phrases). Here’s the callback: Framing cuts down the back-and-forth loop mentioned earlier, which means less wasted time getting from blank slate to “first thing worth improving.” Treat the AI output as a starting block, not gospel—speed up kickoff, then use your normal team process to shape it.

Apply this workflow to docs and posts with our app, generating clean outlines and starter drafts so you start faster, keep control, and ship without the usual setup drag.

Try it right now. Next time you spin up a migration, stub a new endpoint, or need a quick cloud config, prompt for an outline and starter code. Feel the shift—then make it a default habit.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.

You can also view and comment on the original post here .