Align AI productivity with outcomes to build trusted value

Align AI productivity with outcomes to build trusted value

The Conversation That Won’t Let Me Go

A few weekends ago, over coffee that went cold while we talked, a friend of mine blurted out: “With AI, it’s like one person can do the work of ten now, right?” I laughed, but it stayed with me. Because it’s not just a line anymore—that leverage is real—generative AI can push performance for skilled workers up by almost 40%, which isn’t just a rounding error anymore. I used to think the hype was a bit much. Now, every project, every team meeting, it’s right there changing what’s even possible. You feel the ground shifting under your feet.

But then, as soon as the excitement faded, the real question landed: If one person’s doing the work of ten, what happens to the other nine who might have had those jobs? That part is harder to talk about, but it’s what actually matters.

The truth? Teams can’t just absorb that much change overnight. Markets don’t expand to meet this new capacity instantly, and skill gaps don’t close themselves just because there’s a shiny new tool in the stack.

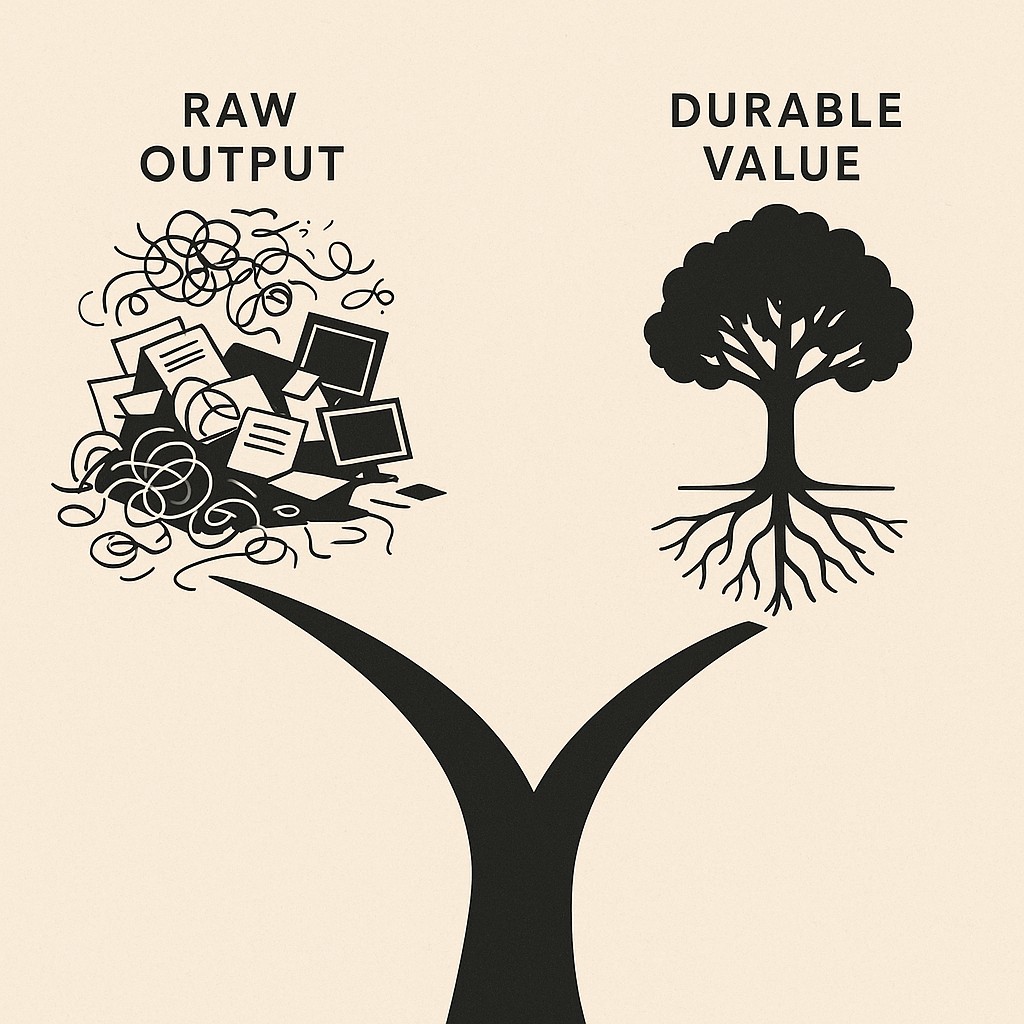

Here’s where things get tricky, and this part makes me wince sometimes—we need to align AI productivity with outcomes, yet our systems reward more output, not more value. It’s way easier to show you’ve generated a lot of stuff than to prove you moved the needle in a way anyone actually cares about.

That’s why we’re flooded with AI slop. The work piles up, but the trust starts to melt away.

AI Leverage Is Neutral—It’s What We Target That Matters

Here’s the thing that keeps getting lost in all this. Leverage is neutral. Just having more of it doesn’t guarantee progress. If you point AI at better metrics—outcomes you actually care about—it compounds into real value. Aim it the other way, and it just creates more noise, faster. The tool’s power just underscores your choices: what you measure, reward, or let slide becomes what you see tenfold.

How that plays out depends on your seat. For builders, there’s a fork in the road. You can flood the zone with quick wins and auto-generated content, or slow down just enough to craft something that holds up when the excitement fades. Leaders get a similar choice—replace people to pump up output on paper, or redeploy bandwidth toward stuff the old way never made space for. And consumers, the ones clicking, buying, reading, or building their own stacks, end up amplifying what spreads. If they reward surface-level quantity, we spiral into noise; reward actual depth, and durable value starts to return. The incentive structure gets radicalized by the speed and ease of AI, so whatever was incentivized? Now it’s everywhere.

But there’s that pressure—internal, external, sometimes just in your own calendar—to keep up. The worry creeps in. What if slowing down to get it right means missing output targets? How do you even measure something as slippery as trust when everyone’s watching dashboards, not relationships? I get it. The unease isn’t imaginary.

And honestly, the lure is real. Every time I launch a new workflow and watch the numbers spike, I feel it too. AI feels like a superpower. I can do the work of 10 people.

Designing Workflows That Build Trust, Not Noise

When I talk about “trusted outcomes” in engineering, I mean results that hold up under pressure—work that’s not just done, but done reliably. Here’s what matters: consistency (is it solid every time?), correctness (does it do what it’s supposed to?), and reversibility (can you back out if something goes sideways?). You don’t need to get fancy to measure trust. Even a simple tally—how many builds passed on first try, how often data matches what you expect, how cleanly you can roll changes back—is way more useful than gut feel.

Getting a good read on reliability takes actual numbers, not guesses. Most workflow pilots get much tighter if you test at least 20 users for each design. It’s not perfect, and honestly, I rarely have every metric dialed in from day one. But operationalizing trust in plain terms gets the team moving in the same direction, and you start seeing where friction shows up before it becomes a fire.

Let me go off course for a second—last month, I spent half a day tearing apart our deployment pipeline looking for a bug that turned out to be a timezone mismatch in a script we’d copied over from a project I barely even remembered. I was already tired, I skipped lunch, and I only found it because the newest engineer on the team glanced at their logs and noticed something odd about Tuesday rollouts—literally the sort of glitch that slips through because the stack moves faster than the people adjusting to it. I wish I could say that was rare.

The workflow I lean on is pretty repeatable. In Trust-centered AI workflows, you scope the task tight, pick your model and tools, plug it into an evaluation harness, keep a human reviewing at the key points, set hard guardrails, and version every output. You get fewer surprises, more signal.

Now, I’ll admit—rolling out even a tiny pilot used to sound like a drag. Like, wouldn’t carving out two weeks to baseline defects and kill off noisy steps slow us down? The first time I actually did it, the opposite happened. That short runway makes it obvious where we’re stepping on rakes, and it lets you measure exactly what lifts with AI and what needs to get tossed.

To put numbers behind “trust,” you don’t just wave your hand and say it feels better. Try tracking pre- and post-defect rates on AI changes, log the hallucination rate, watch review times drop, check if user retention lifts, and keep an eye on incident trends. It’s not rare to find 1.47% hallucinations in clinical AI output—almost half are major enough to affect real patient care if left unchecked. That stat is wild, but it’s a good reminder: small error rates in high-leverage environments turn little bugs into real headaches fast.

I sometimes think of this step like calibrating a coffee grinder. Dial it in too coarse or too fine, you drown out the intended flavor. Or those moments spent fiddling with a smoke alarm that’s hypersensitive for no reason. Cutting false positives there is basically the same job as tuning model thresholds and eval loops. Tedious, maybe, but worth every minute when the signal finally comes through.

From Cutting Noise to Compounding Value

At this point, as a leader, you’re standing at a real fork in the road. You can use AI’s leverage to quietly replace roles and call it “efficiency,” or you can redeploy AI capacity—locking down risks, bolstering quality, and getting under the skin of customer problems where the upside compounds year after year. I won’t pretend there’s a single answer here. I do know the pattern that preserved trust for my teams wasn’t replacement, but careful redeployment—pointing extra hands at the stubborn stuff that never made the sprint cut before.

There’s no magic to it, but here’s how it can look: run focused reliability sprints, attack your tech debt while you have breathing space, actually sit down for customer interviews, refresh your documentation, shape internal tools to cut future drag, and make onboarding less like a minefield. When teams turn their bandwidth toward this, you notice the difference fast.

And when it comes to building up your team, this new capacity is the perfect pocket to map skill pathways forward—think engineers stretching from “prompt engineer” to “pipeline builder,” or taking ownership of eval design, data curation, and regular safety reviews. These “AI toolsmith” roles will outlast the novelty phase, and they pay back as change compounds.

So really, whether you’re building, leading, or consuming the end result, the choice is here every week: will you use the freed-up cycles to dig deeper and set a new bar, or just fill the air with more noise? The opportunity is right in front of us—Align AI productivity with outcomes by choosing depth, not just output. That’s when the leverage turns into lasting value. If you remember back to that “ground shifting” feeling I mentioned up top, this is where it shows up. Some weeks it feels like the ground is still moving.

Align AI Productivity With Outcomes: Changing What Counts

Here’s where things start to shift for real. If you want AI leverage to compound for actual value, embrace Outcomes over output by swapping out the obsession with “how much did we ship?” and start measuring what holds up after launch. I’m talking outcome metrics: task success rates, user satisfaction, retention curves, incident counts, review quality, and—my favorite—time-to-trust. The difference is simple. Volume goals just ask, “Did you do a lot?” Outcome goals ask, “Did what you did work, stick, help, or get adopted?” This feels uncomfortable at first—it’s way easier to rack up counts than to prove impact—but when teams refocus here, signal starts to cut through the noise fast. Anyone stuck in looped rework or endless “generate more” sprints knows exactly what I mean.

So if you’re wondering how to motivate the right moves, use AI incentives alignment to redesign the incentives. Reward the work that actually builds and tests trust. Broad eval coverage, real red-team findings, crisp documentation, and postmortems on every AI-assisted workflow. Leave behind vanity metrics—like sheer ticket counts or “number of prompts used”—they only create shallow cycles. If you’re leading a team, this means praising the bug report that catches a subtle risk, not just the script that spits out 500 lines in a minute.

When it’s time to actually roll this out, start small but visible. Pick a lighthouse team—one crew known for shipping solid work—to pilot Outcome-focused AI adoption, give them the trust-building playbook, and publish a dashboard showing how their outputs perform on quality, reversibility, and real-user trust. Set guardrails: what has to be human-reviewed, which changes get checked before deployment. Then hook those checks into CI for every workflow, so hitting your near-term commitments isn’t a gamble but a repeatable process. In a month, you’ll know what really moved.

Put the ideas here into practice—use the app to generate AI-powered drafts you can review and refine against your standards, so you produce content that holds up and builds trust instead of noise.

End point, back to that weekend table: this is a decision you make in the open. With 10× leverage from AI staring you down, you’re faced with a clear fork. Would you use it to ship more—or to create more value?

I’ll be honest—I still second-guess what the right tradeoff looks like week to week. It keeps circling back to that same question, and sometimes I’m not sure my answer is any clearer than the first time my friend said it out loud. But maybe being willing to sit with that tension is part of the work now.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.