Balance AI and Manual Research: Orchestrating Reliable Debugging

Balance AI and Manual Research: Orchestrating Reliable Debugging

From Tracing Env Vars to Rediscovering Real Debugging Skills

Even this week, I found myself deep in a Microsoft-owned GitHub repo, trying to trace the actual flow of an environment variable that, on paper, should have been straightforward. Here’s the setup: a deployment pipeline breaking for reasons buried three layers down—one of those ancient, unnamed env vars that only surfaces every few months. If you’ve ever needed to explain why a particular config value turns up in only some environments, you know how quickly the mental model breaks.

I started by searching for every mention across the repo, checking Dockerfiles, YAMLs, even scavenging through half-abandoned setup scripts dated before the last reorg. This kind of hunt feels familiar—a mix of relief when a clue surfaces and mild dread as dead ends pile up. In moments like this, you want progress, not just plausible theories.

I cut my teeth learning to balance AI and manual research long before the AI-at-every-step era. The edge wasn’t in clever prompting—it was in knowing which rocks to turn over, which scraps of documentation to trust, and when to just read the code yourself. That muscle, I’ll admit, got built the hard way.

This time, I tried throwing a few pointed questions at AI. Nothing really landed. I got back summaries, some generic troubleshooting, a few code snippets obviously cribbed from old docs. But an answer that esoteric—where a shadow config literally changed deployment behavior depending on a rarely-set variable—just doesn’t surface in a chat log. Maybe you’ve hit this wall, too. It’s that moment you realize the model isn’t going to bail you out.

As soon as AI fell short, something kicked in. Old habits—the ones that got me through the scrappiest problems before all this automation. I was back to googling exact error strings, piecing together half-solutions from five-year-old Stack Overflow posts, parsing cryptic API docs written for someone, somewhere, with more context than me. And yeah, it was 2 a.m. when the answer finally snapped into place—a subtle cascade in the repo initialization, set years ago by someone now long gone. The thing is, none of these steps were fun, but every one was familiar. And honestly, it’s a reminder: those skills aren’t obsolete, no matter how slick our tools get.

Actually, I nearly derailed the whole search this week because a random Dockerfile line reminded me of a side project from last year. Spent fifteen minutes retracing a completely different bug just to check if the pattern matched. Of course, it didn’t. All that wandering—only to loop back right where I’d started, staring at the same env var that kicked off the hunt. Funny how your brain tries shortcuts that never quite land, but sometimes a wrong turn shakes something loose.

This is the real lesson. Velocity isn’t about a single prompt anymore. The actual leverage comes from orchestration, mixing the speed of AI with those deeper manual digs. That’s how you move from stuck to progress reliably.

Over-Reliance on AI: Where the Cracks Start to Show

If prompting is your only skill, you’re fragile. The models are fast, no question, but they turn brittle the moment context hides in a maze of half-documented repos or references scattered across outdated docs. You know the feeling—everything looks reasonable in the output, yet you’re still guessing at what’s really happening under the hood. Relying blindly on those answers is like climbing a ladder with missing rungs. Momentum’s great until you hit that gap.

Here’s how things routinely break down when AI answers fail—and why you must avoid AI overreliance. You’ll get hallucinated APIs that don’t exist, answers grounded in the wrong version, or just flat-out ignorance of the domain-specific edge cases nobody thought to put in a training set. It happens because when training data falls short or intent isn’t understood, models can hallucinate based on gaps in knowledge and weak context awareness. That’s not just a quirk. It’s a fundamental limitation.

Six months ago, I was convinced most troubleshooting would be automated by now. But there was a moment, right in the middle of that environment variable hunt, when each prompt just spun its wheels, and I lost hours before admitting nothing useful was coming back. It’s discouraging, staring at “best guesses” when you know you’re chasing edge behavior hidden in legacy code. The dip from “maybe I’ll find it” to “I’m stuck” can happen fast.

What pulls you out of that rut is old-fashioned craft. When AI stumbles, developer research skills—digging, filtering, and piecing together from multiple sources—are what separate good engineers from great ones. It’s not nostalgia. It’s being able to synthesize incomplete info into something usable.

Speed matters, a lot. But real resilience comes when you orchestrate the right mix, tapping AI for the quick wins while relying on manual checks for the deep cuts.

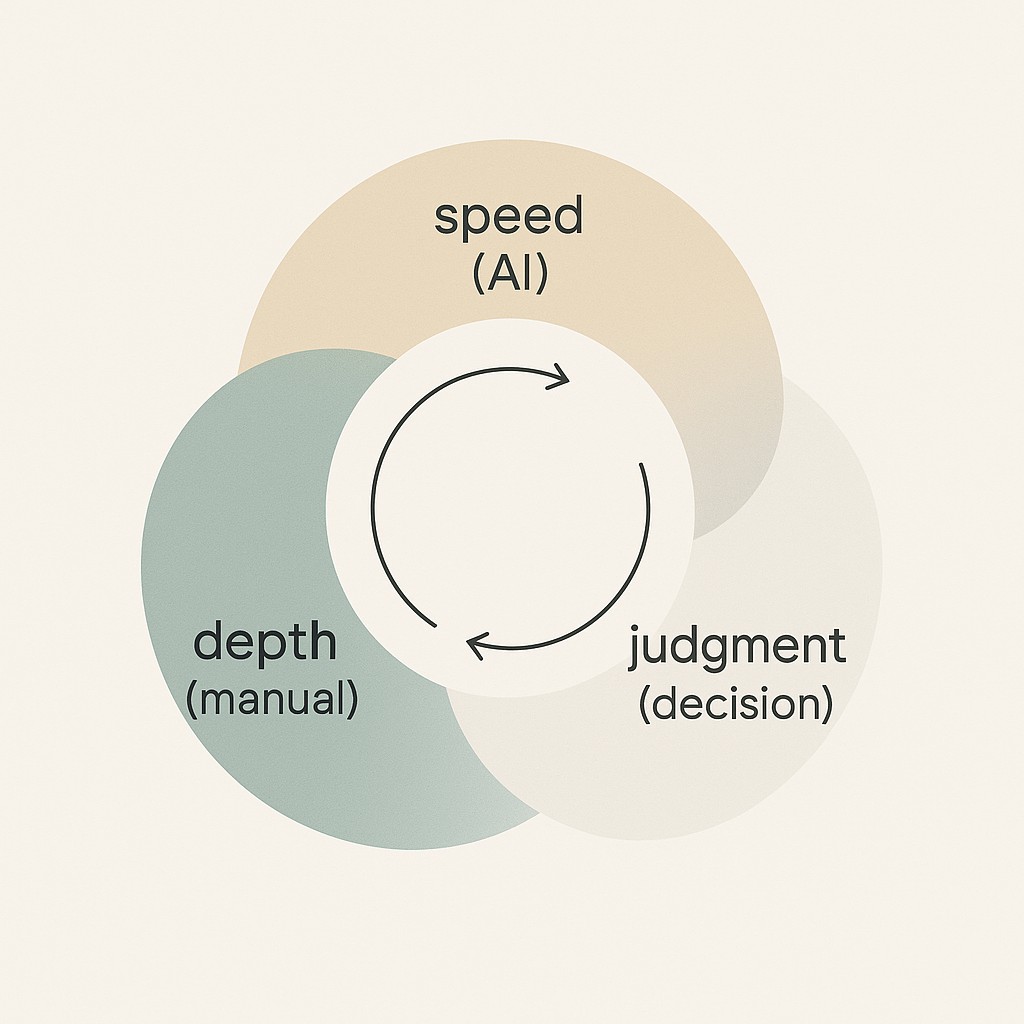

The Orchestration Loop: Balance AI and Manual Research—Speed, Depth, and Judgment in Sync

Orchestration, in practice, is a cycle, not a single move or tool. You start by using AI to map the space quickly, like sending out scouts to get the lay of the land before you commit. Next, you run manual probes—actually poking at the system, grepping through code, searching configs, testing assumptions. This is sampling, not blindly accepting the first answer.

Then comes judgment—the part that too often gets skipped. You take what AI surfaced and what you learned hands-on, weigh them side by side, and decide what truly holds up for this context. I’ve started thinking of it like scouting a trail, sampling water, and choosing the safest crossing. AI gets you a map, but only experience and a bit of guts get you across. Here’s the reframe. Don’t outsource the loop. Run it yourself, and use each tool for what it’s best at. That’s where autonomy and momentum really come from, especially when the answer isn’t obvious.

Back when I was learning to fix my bike, I’d watch a quick video for the basics. Then spend an hour wrestling with rusty bolts, figuring out which steps actually applied. The video set the direction, but getting my hands dirty was the difference between “looks easy” and “actually fixed.” It’s no different here. Whatever you’re debugging, mixing the quick overview with deep, manual tinkering is the real recipe for nailing the solution.

The maxim I use—maybe it’s worth engraving on every sticky note in the office. Use AI for speed, human knowledge for depth, and practice human-in-the-loop engineering so your own perspective decides what holds up. If you blend those, speed covers easy wins, depth guards against blind spots, and judgment builds trust. By working this way, you echo the NIST AI RMF—blending speed, depth, and judgment—to build trustworthiness and responsible use alongside results. If you want sustainable autonomy under uncertainty, claim this workflow as yours.

Scale this up, and suddenly teams are leveraging lightweight notes, decision logs, and repeatable checklists. Not just leaning on one person’s memory. Orchestration holds, whether you’re solo or coordinating a dozen minds. That’s how the practice grows beyond just surviving today’s bug.

From Stuck to Progress: The Workflow I Use When Models Miss

Step one, always: frame the problem so you know what you’re actually chasing. Jot down the question in terms you’d explain to a teammate—“Why does ENV_VAR override config in prod but not in staging?” Next, take stock of constraints: versions, environments, deployment style, anything that might shift the answer. And don’t trust the surface. Reproduce the issue yourself.

This first pass is about mapping where the clues might hide, so scan the repo top-to-bottom, sweep the docs with an eye to making documentation actually usable, and check the issues list for MacGyver-style debugging threads. When you get precise early, you’re not just making it easier on yourself, you’re setting up the next steps to go smoother. Framing like this matters—framing cuts down back-and-forth.

The second step is to fan out, but do it with intent. I start by searching the codebase—yes, literal repo-wide string searches for “ENV_VAR” and any aliases or camelCase variants. Then I’ll look at open and closed issues, commit histories for hints about why the variable even exists, and skim through docs and release notes for sneaky changes. Don’t neglect the wider web. Google the exact error message, drop into Stack Overflow, and pay attention to old forum discussions. The key is not to download everything at once.

Skim, bookmark, and refine what you’re actually searching for as new info pops up. I’m always a little amused by how much of this comes down to finding the right question, not the perfect answer. Sometimes, late at night, I catch myself deep in the details—chasing code snippets posted by a long-gone contributor—and realize this is half the fun. You watch your search trails narrow as you build a mental map, and eventually something shifts. Patterns emerge, and you know which sources can be trusted and which are just more noise.

Step three is focused AI-assisted troubleshooting, where I bring AI back in, but now it’s surgical. Ask targeted sub-questions instead of open-ended ones (“What modules set ENV_VAR in Docker?” or “Show me all potential .env overrides?”). Sometimes I’ll ask it to propose search regexes or outline possible troubleshooting trees, but always with an eye to cross-check against primary docs or actual code. If Stack Overflow or docs contradict what the model gave, trust the sources, not the summary.

Once you’ve gathered enough pieces, it’s time for synthesis and test. Collect code snippets, config samples, and paste them into a minimal repro you can actually run. Validate what you’ve found against interface contracts, API docs, and—crucially—against version notes or migration guides you dug up earlier. I’ll admit, this is where I sometimes get tripped up by a subtle version mismatch or a long-forgotten release change. Document what works (and what failed), so the next time you or your team hit this, you’re not starting from zero. API docs are sneaky; always skim them twice.

Last step: decide and ship. Apply your judgment with a smarter technical decision framework to choose what’s safe to automate or refactor. Add guardrails—env validation, sanity checks, a comment on the gnarly line. Note any lingering open questions, maybe drop a comment in the next sprint planning thread. Turn your findings into a cheat sheet or a small wiki entry, so nobody has to rediscover the edge case all over again. If a forum post led you here, pay it forward—add your cleaned-up answer back. This closes the loop and unlocks progress for the next engineer.

Turn your engineering insights into clean posts, docs, and updates, using AI for first drafts and your judgment for polish, so you publish faster without fluff.

That’s the workflow. Problem framing, intelligent search, strategic AI, synthesis plus test, then a decision the team can reuse. Every time I do this, I find the actual leverage comes not from the quick hit, but from the full orchestration. Blending speed, depth, and judgment so the process stands up under real-world uncertainty. Stick with it; your autonomy and reliability can’t be bottlenecked by the next model update or obscure config.

Scaling Orchestration: Redefining Time, Velocity, and Team Resilience

First, let’s talk about time. The biggest objection I hear is, “Manual search eats hours—AI could solve this in one step.” But that isn’t how it plays out. What actually burned time in my recent env var chase was spinning my wheels waiting for model outputs that didn’t land.

The moment I started orchestrating—AI for mapping, manual probes for answers, keeping a running log—the search resolved faster and left notes I could reuse next time. You’re not just insuring against dead ends, you’re building speed into every future encounter with this problem. And leaders can reinforce this by coaching teams to use AI well. If you’ve ever retraced someone else’s messy trail, you know that durable notes are worth ten good prompts.

The worry about velocity comes up next. Will all this double-checking slow us down? In practice, the opposite happens. When you blend targeted AI with manual verification, the rework drops, and as templates and notes accumulate across tasks, velocity compounds instead of stalling. Even with Copilot handling the boilerplate, I’ve seen teams snag 90% agreement on what’s shipped—speed without the usual fallout.

Scaling this is easier than it looks. Lightweight practices—research logs for decisions, repo-wide code search conventions, peer review checklists—turn orchestration into a habit the whole team can maintain. As projects grow, this isn’t overhead; it’s scaffolding, and it means future engineers step onto solid ground, not into a maze.

Bottom line: resilience isn’t optional. If you want autonomy under incomplete information, blend AI prompting with hard-earned resourcefulness, and make it a shared habit. Prompting is power. Resourcefulness is leverage. Together, they future-proof your engineering.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.