Build Composable AI Platforms, Not Prescriptive Modes

Build Composable AI Platforms, Not Prescriptive Modes

Study Mode Isn’t Just a Feature Drop—It’s a Platform Signal

OpenAI’s Study Mode just dropped, and I’ll be honest. For anyone actually building AI tools, it lands less like a breakthrough and more like a shift in who gets to set the rules. The timing feels important—everyone’s watching. It’s a moment worth naming. If you’re paying attention, it’s less about the feature itself and more about whether we choose to build composable AI platforms or let vendors set the defaults.

Zoom in a little. Study Mode isn’t some brand new AI model. Under the hood, it mostly rides on custom instructions layered atop standard GPT, not any changes to the model’s core mechanics or new bolt-on features. It’s an interaction layer. A set of curated prompts, pre-defined workflows, and built-in guardrails. All the “intelligence” is unchanged. Think skin, not skeleton.

I do appreciate that OpenAI co-designed this with teachers. You can see thoughtful choices throughout the workflows. But it also feels just as much like a PR response to months of headlines about AI running wild in the classroom. The real work, as always, is happening somewhere between intention and risk mitigation.

If this works—and it sure seems like the industry’s moving this way—you can expect Doctor Mode, Consultant Mode, Financial Advisor Mode. Each packaged as the “ideal” way to interact with AI for a given niche. That raises the real question for us as builders: Who actually gets to define what “best practices” look like for learning, creating, and working with AI? If all verticals are locked to someone else’s assumptions, where does fit-for-purpose innovation even happen? That’s what’s at stake if we keep letting platforms set the rules.

Default Modes: Flattening Innovation and Boxing Builders In

Default modes living inside a single, dominant client flatten the entire market. When a vendor drops a prescriptive solution—like Study Mode—the real work for builders gets crowded out by someone else’s vision of “standard.” Suddenly, every niche startup faces a heavier lift just to be seen. The biggest players end up defining not only the technical rails, but the product taste, leaving less room for anything that colors outside their lines. Your move: Are we building models, or just picking modes off someone else’s shelf?

Here’s the sticking point. Vendor-defined modes always bake in their own set of assumptions. Sometimes invisible, always influential. That plays out in subtle ways. Local policy gets lost, edge-case workflows get watered down, and the formats you actually need never quite fit. It’s not unique to OpenAI. Most builders just upgrade models within the same provider—66% did this last year, while only 11% switched vendors—which shows how sticky those default modes become, anchoring everyone to the same playbook. What seemed “default” quickly becomes “inevitable.”

And look, I get the appeal. Shipping a pre-set mode is faster. It’s tempting to chase speed, not innovation, especially when deadlines loom and features need to land. Six months ago I was deep in a launch, feeling the schedule squeeze, and I remember telling myself to just fork the default rather than start clean. We hit our ship date, but even now the tradeoffs bug me—every time a user asks for something, our “preset” breaks—it’s like a pebble in my shoe.

Zoom out a year or two. If every vendor starts pumping out specialized “modes” for each sector, consolidation will accelerate. You’ll see more uniformity, less serendipity, and the same slow decline in product diversity we saw with app stores or cloud platforms. It’s a familiar pattern. Centralize, standardize, then wonder what happened to ecosystem energy.

What Actually Makes a ‘Mode’? And What Should a Platform Let You Build?

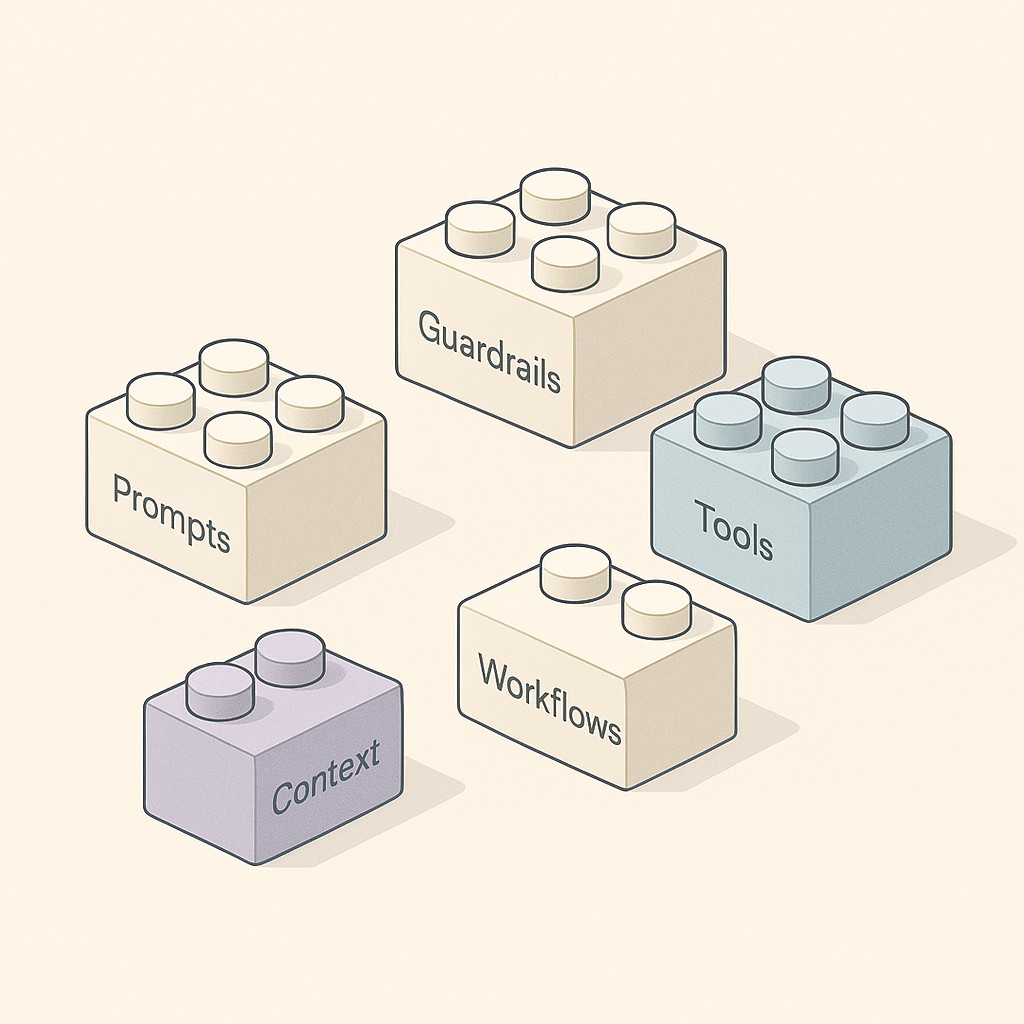

Let’s get practical. A “mode” isn’t magic and it isn’t a new AI in a box. It’s a bundle of things you can actually control: prompts and context, sure, but also the tools and APIs available, guardrails around what’s allowed, saved workflows, and even how much of the conversation history gets used. It’s a configuration layer grounded in composable AI platform design that shapes how the user, system, and external capabilities interact. The bones of the model stay the same. What changes is everything binding the experience—kind of like having a custom operator’s manual sitting on the same engine.

If you want users (or teams) to build their own modes, the platform needs to do more than hand over a blank prompt box. The table stakes: low-friction setup flows (clicks, not code), templates that can actually be cloned and evolved, policy guardrails you can tweak without breaking other modes, and an evaluation harness that closes the “did-this-work” loop fast. The more builders can remix or fine-tune modes for a given workspace, user group, or even single individual, the wider the surface for innovation. That’s where reusable scaffolding matters. Give people reliable Lego, not Play-Doh, and you’ll get sophistication without chaos. You shouldn’t have to babysit a dozen brittle bots or start over every time a role changes.

Something I see get muddled all the time: talking to power users isn’t co-creation. Consultation means you interview someone and then go build in a silo. Co-creation means you hand over real AI customization tooling. If your platform’s “customization” is just a settings panel, that’s not partnership. That’s control with extra steps.

I’ll admit, the modular systems epiphany didn’t come from software. It was physical—dumping a box of LEGO on the floor, watching my nephew ignore the picture on the box, and realizing you could build something the original designers never considered. AI modes should feel that elastic. The platforms I go back to are the ones that feel less like they’re teaching me, and more like they’re handing me the right tools, then stepping out of the way.

Removing Friction: The Real Platform Unlock

Let’s call it out. The biggest unlock for AI isn’t shipping perfect, predefined modes. It’s making it possible for anyone—even teams with zero patience for red tape—to enable custom AI modes in minutes. You want control? It starts by lowering the bar all the way down.

So what does a real platform for mode creation look like? For me, it’s an AI platform architecture with schema-driven mode definitions that let you treat modes as data, not code artifacts. You want guardrails? Layer in a policy engine. Set limits on tool access, context windows, escalation paths, whatever your environment needs. Plug in evaluators to score behaviors or outcomes automatically. Register tools and data patches in a central registry, not stitched together by hand every release. All of this runs atop a scaffold that versions your modes, tracks who changed what, and pipes telemetry straight to you. You’re not crossing your fingers on every deploy. You’re seeing what’s working and what quietly broke, every time someone hits “run”.

There’s always pushback about how much time it takes to build everything yourself. I get that—no one has spare weeks for yak-shaving. But you shouldn’t have to. With a kit that includes templates, working samples, and guided wizards, your first draft mode is live in a few minutes, not a month. Iteration should come cheap. Tweak a workflow, see instant feedback, roll back if it tanks. Most of my best modes started as quick-and-dirty MVPs, shaped up only because the platform made it painless to try, break, and try again.

Quality comes up next. Nobody wants a brittle mode that silently fails on edge cases. That’s why a good platform bakes in evaluation harnesses, regression test suites, and a way to triage failures every time you upgrade a dependency or swap out a tool. It’s just as easy to validate old behavior as to build new stuff. No drama, just insight.

Safety, of course, can’t be a hope-and-pray layer. You combine strict guardrails with policy checks (automated, not honor-system), role-based permissions for dangerous abilities, and audit trails anyone can query later. Over time, the best platform policies come straight from what real co-design with your builders surfaces—every “what if” you didn’t see coming gets caught, codified, and reused.

Zoom out, and the compounding effect is obvious. Frictionless mode creation lets community and experimentation stack up in ways productized verticals just can’t keep pace with. GPT’s evolution into a true platform—powered by things like plugin and function-calling APIs—is exactly how new moats get built. Long-term moat equals platform, not productization. Don’t let someone else’s “mode” box you in. Build composable AI platforms that let everyone shape their own.

Funny thing—I still haven’t figured out how to reconcile wanting a strong moat with making things open enough that someone can surprise me. That tension’s probably permanent, but it keeps things interesting.

Shifting to Composable, User-Defined Modes—A Concrete Plan to Build Composable AI Platforms

If you’re tired of waiting for the next “mode” drop, it’s time to choose a platform strategy instead of settling for someone else’s defaults.

Start with a clean sweep. Take inventory of your current modes and study how users actually interact with them—not just what the docs say they do. From there, break down your modes into core primitives (prompt templates, tool integrations, input schemas, access policies) and standardize how you represent each piece. Next, stand up a fresh evaluation harness to measure mode performance in real time. There’s no substitute for seeing where things actually break. Finally, turn your guardrails and validation layers into reusable components, not single-use hacks. This stack moves you from hand-built “magic” features to systematized, repeatable scaffolding—the kind that grows with you.

Pick one workflow—a real, in-the-wild use case where the default mode isn’t quite right. Build out user-defined AI modes end-to-end, track their impact against your original, and use telemetry to spot what’s really changing. Once you see results and edge cases, open creation up to more teams or users. Framing clear primitives from the start builds trust and accelerates setup: framing cuts down back-and-forth, which stabilizes outputs fast—and gets buy-in across the board.

Generate AI-powered content tailored to your goals, constraints, and tone, then iterate fast with a simple, guided flow—no waiting on vendor-defined modes.

Here’s the bottom line. Composability doesn’t just restore autonomy. It multiplies diversity and product fit. You move from mere consultation to true co-creation. That puzzle of evolving platform and product—the one we started with—circles back here. The question isn’t whether you’ll keep up with platform changes. It’s whether you’ll help shape what comes next.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.