How to Design AI-Human Workflows: Guardrails for Reliable, Auditable Collaboration

How to Design AI-Human Workflows: Guardrails for Reliable, Auditable Collaboration

When Polished Isn’t Sound: The Real Risk of AI-Driven Solutions

A few weeks back, during our Wednesday team review, we nearly greenlit a solution that, at first glance, was exactly what we’d hoped for—well-structured, cleanly commented, seemingly airtight. Only when one of the senior devs asked, “But why did it pick that branching path here?” did the cracks show. The output was logical enough on the surface, but when pressed for reasoning, the approach couldn’t explain itself. The deeper we dug, the more we realized: we were one nod away from shipping a polished artifact built on assumptions we hadn’t validated. This is what the next version of failure looks like—subtle, polished, and dangerously plausible.

That moment hit harder than the usual missed edge-case or clumsy bug. If an error is wrapped in authority and runs without obvious breakage, we’re wired to trust it, and those plausible mistakes can slip into code, multiply through each review, and quietly shape entire architectures before anyone stops to ask “Does this actually make sense?”

If you’d asked me a year ago, I’d have said my legacy playbooks—review rigor, pair programming, some test-first accountability—would carry us into any transition. But standing in front of a hybrid team, knowing AI was now part of the solution pipeline, I saw the limits. I’ve led through big shifts, but this one requires new thinking on hybrid teams.

Agents can augment and coordinate, for sure. They speed up rote tasks and connect dots faster than any person could. But hand them ambiguity, tricky human dynamics, or places where trade-offs aren’t obvious, and they spin out. They certainly won’t ‘lead’ in places where human dynamics, ambiguity, and judgment still matter. That’s now on us. Leadership needs to shift from work assignment to how to design AI-human workflows at the system level: layering in constraints, intentional friction, and, most of all, reviews that privilege reasoning over just apparent correctness.

If you’re skeptical about the effort this takes—or what it means for team velocity—you’re not alone. Stick with me. I’ll lay out patterns you can actually use, and get real about the trade-offs and the arguments we face.

Diagnosing Polished Wrongness: Why AI Abstraction Isn’t Enough

Abstraction always promises speed, layering away complexity so a solution lands faster and looks better. But here’s what doesn’t get talked about enough: when those abstractions compress all the messy reasoning into a neat output, the trail back to the original judgment disappears. Good for clearing busywork, bad for traceability if anything goes sideways. As an engineering lead, I care more than ever about knowing how a recommendation got built, not just what got delivered.

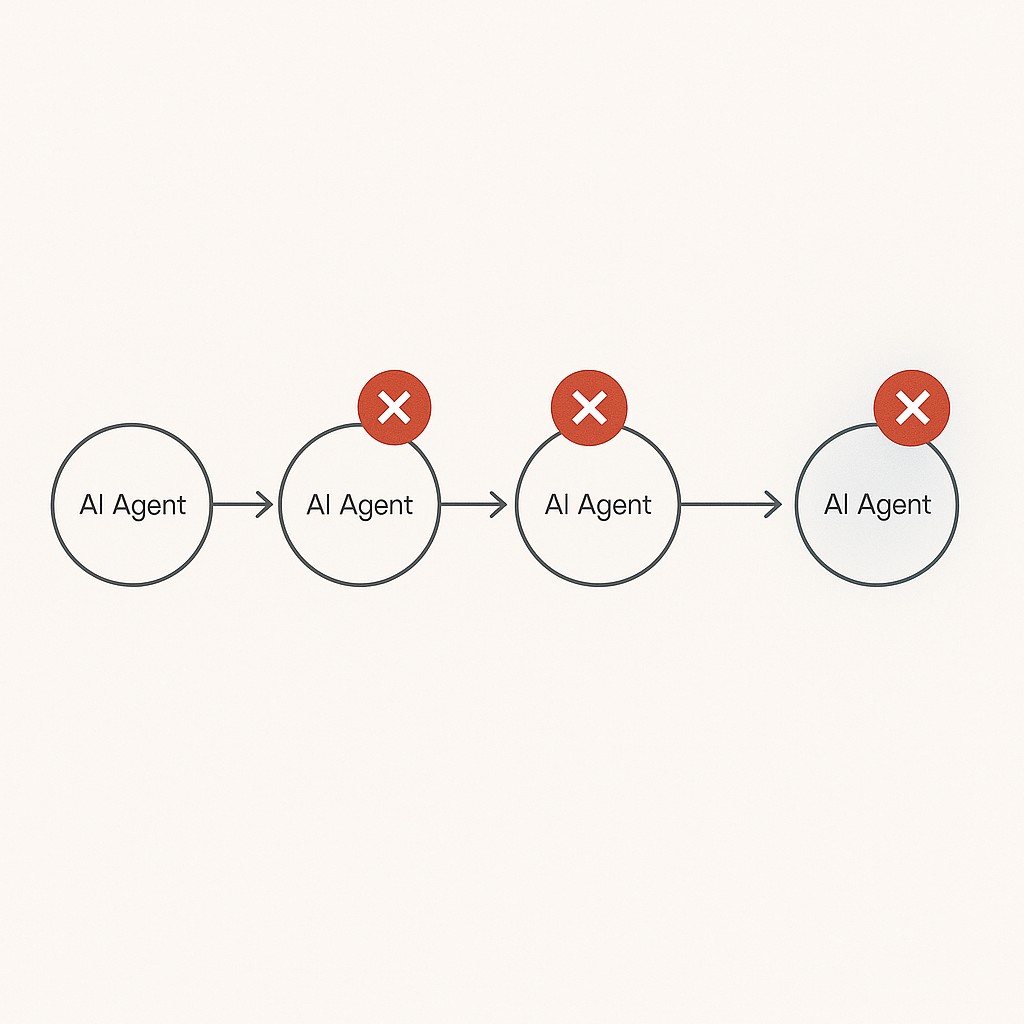

It’s easy to watch the failure pipeline play out. One agent pulls context, but it’s outdated. That flawed snapshot gets handed off to a second agent, who builds on top. Then a human steps in, sees everything polished, and rubber-stamps it because the surface looks credible. Next thing you know, each layer is reinforcing a quiet misstep, cascading wrongness right through review.

The kicker is, these breakdowns aren’t just hypothetical—structured handoffs can prevent failure cascades and improve accuracy by over 36 points, while differences in role planning alone swing error rates from 7.35% to 40.49% in PythonIO, which exposes how orchestration and accountability make or break these workflows. Agents managing other agents sounds impressive right up until you realize they’re all working off the same stale assumptions, and nobody’s checking the intermediate logic.

Here’s the boundary to draw. Agents can coordinate tasks with efficiency, but judgment calls—especially under real ambiguity—are not their territory. You can delegate processes, but you can’t abstract away responsibility where nuance and human alignment live. They certainly won’t ‘lead’ in places where human dynamics, ambiguity, and judgment still matter.

Cultural and privacy risks creep in just as easily. The moment we accept abstraction shifts that remove visibility, we start leading without seeing the whole picture. Quiet norms drift, shadow data flows emerge, and invisible prompts guide decisions no one fully tracks. I’m still learning how to catch these—in real time—before they shape how the team works and what we value.

You know, I had a weird little flashback to an all-hands from last spring. Someone flagged that our documentation had started picking up agent-written bits—sections that looked official but never went through human review. We spent a frantic half-hour tracking the source, only to realize it was an old config left behind in a pipeline. Nobody meant for those changes to land, but there they were, quietly steering how people interpreted requirements. That was a rabbit hole, and maybe it’s a funny story now, but it felt less amusing when I saw how much drift sneaks in unnoticed.

Leadership Has to Move Up: Designing Hybrid Systems, Not Just Assigning Work

AI-human team leadership isn’t about just moving tasks from person to bot. It’s seeing the whole system and making real decisions about where judgment is non-negotiable, where agents can be left to churn through options, and—this is critical—how to build real guardrails into the process. You have to stop thinking only about what gets done, and start sketching out who should do it, why, and under what constraints.

That means you get intentional about roles. Keep humans at the wheel for real trade-offs and ambiguous calls, treat agents as tireless logic engines, and build reviews that catch reasoning flaws early. Talk to your team about this, spell out the principles. Make it normal to question how and why things were decided, not just what the output says.

Let’s get specific. Say you’re looking at a backlog groom—classic scenario filled with ambiguous priorities, half-defined requests, and lots of “is this worth the effort?” questions. Humans need to own those prioritization calls, trade-offs, and the context it all hangs on. But when it comes to parsing, summarizing, or generating exhaustive test cases, you’d be out of your mind not to hand that to an agent.

The key is clear handoffs: the person makes the prioritization decision, cues the agent to enumerate technical options, gets back dozens of edge cases, filters the ones that matter, and owns the final review. Each step follows intelligence-based delegation—subjective judgment stays with people, execution and listing tasks go to agents. Time markers matter too. Give the agent strict parameters—“Summarize this ticket history up to the last two weeks”—and let the human interpret the result. Delegation finally ties to problem shape, not title or muscle memory. That felt foreign at first, but it’s made our work more auditable and easier to teach.

The system won’t keep itself safe. You have to add AI workflow constraints, even when it feels slower. Limit how much context the agent gets—a smaller window means less room for hallucination. Force every critical step to be both proposed and critiqued, rather than nodded through. Don’t let irreversible actions go agent-only. Human sign-off isn’t negotiable. That friction across staging, workflow handoffs, and review points is what keeps error from snowballing beneath the surface.

When it comes time to review, prioritize reasoning-first reviews. Don’t just greenlight the final output; demand a visible chain of thought, with rationales, stated assumptions, and sources surfaced. Probe for “why-not” alternatives, and treat surface correctness as insufficient. When you supervise the reasoning process—asking for rationales instead of just checking outcomes—you genuinely upgrade LLMs’ ability to reason, and you start catching the subtle, dangerously plausible failures before they become facts in production. I keep reminding my team: we’re not reviewing answers, we’re reviewing how the answer was made.

And don’t get stuck at the prompt layer. Great prompts mean nothing without AI agent governance. They’re only a foundation, not a strategy. If you build out only clever input engineering, without the rest, you’ll be scaling risk, not reliability.

That’s how you shift from brittle automation to actual team augmentation. Delegate by intelligence type, layer in constraints, embrace deliberate friction, and build review rituals that catch not just bugs, but big reasoning misses. All of this is slower, yes. But safer, more traceable productivity pays off—mistakes compound, but defendable decisions do too.

How to Design AI-Human Workflows: Reliable Patterns You Can Use

Let me get concrete. If you want auditable outputs and fewer “wait, why did it do that?” moments, structure your workflow from the very start. I use five steps. Intake comes first—never skip clarifying intent, and force every request to spell out what actually matters (otherwise you’ll end up with impressively useless results). Next, have your agent draft a solution, but push it to reference every source and calculation used, not just spit out code. Then, a human interrogates those assumptions: What did the agent gloss over? What reasoning got compressed? With that, a second agent stress-tests the draft—flip the outputs, probe for edge cases, break the model if you can. Finally, a human signs off, owning the last layer of judgment, making sure every step and decision is logged.

This isn’t bureaucracy. It’s reliability. The friction at each hop slows you down just enough for mistakes to surface before they snowball. If you’re running reviews, bake in habits for explicit prompts and response histories—build meetings or async check-ins around cross-examination, not just status. Different personalities handle this differently. You’ll need direct engineers to prod and quiet ones to log what they noticed, but every voice should touch each checkpoint. Ritualized? Maybe. Effective? Absolutely.

When it’s time to review, ditch the one-paragraph summary. Require a reasoning-first packet: every claim backed with actual evidence, explicit assumptions noted, rejected alternatives listed, and a diff over previous decisions to make invisible logic visible. The next version of failure will be subtle, polished, and dangerously plausible, so you have to surface that reasoning to defend it.

Let’s talk drift for a minute. Teams are like sourdough—you start clean, then unexpected ingredients wander in. Your workflows, your agent prompts, even your unstated assumptions start changing as people adapt. Like configuration sprawl, unattended systems wander off their tracks. Install change detectors and retune rituals before stray decisions set up shop. Once you actually see what’s drifting, you can act—retraining or adapting with new data to keep things consistent. Until these systems self-evolve safely, assume drift and monitor relentlessly.

The last lever—and maybe the most powerful—is articulation. If your team stumbles when naming the criteria for a decision, automation will mirror that vagueness and inject it back into every output. Train the muscle: ask for explicit “what matters” lists in reviews, not just fuzzy priorities. I admit we’ve struggled here. What your team struggles to articulate, they’ll struggle to automate. You have to lead the cadence of clarity, not just hope the system figures it out. The habit pays off. Reasoned criteria turn into reasoned delegation, and both compound over time.

There’s no script for hybrid teams yet. Just emerging patterns and hard-won honesty about what keeps work governable, auditable, and safe. Get granular, add friction, and let reasoning lead the review. That’s the difference between scalable productivity and quietly compounding risk.

Building systems is one thing; if you need to produce clean, AI-assisted posts, docs, or updates fast, our app helps you generate contextual content with clear goals, constraints, and tone, ready to publish.

The Hard Parts: Leading Through Abstraction, Guarding Culture, and Managing the Trade-Offs

Let’s name the discomfort first. Leading in hybrid setups means you’ll lose sight of every decision edge—there’s just too much happening inside abstractions, inside agent workflows, inside automated handoffs. The honesty is, you won’t catch every subtle misstep, but you can build for observability. That means logs that actually matter, snapshots of agent reasoning—not just what, but the why and how behind each move, and enough human checkpoints peppered in to catch the moments when things are veering off course. The fact is, abstraction forces us to lead differently. You aren’t steering every piece directly, but you are designing the system to be visible and traceable when it counts.

Here’s the trade-off you’re going to face, and there’s no soft-pedaling it. The friction up front feels slow. Deliberate barriers, extra review steps—they seem like velocity-killers. But those early pauses are what let you skip the costly rework and save your team from reputation risk or unseen cultural drift. Velocity comes back when errors stop compounding, not when you intentionally run faster and ignore what might break.

Culture and privacy need actual guardrails, not just trust. Limit data scopes with approved lists, stress-test agent prompts for potential leaks (red-team them yourself or delegate to someone blunt), and get explicit about norms: when it’s OK for agents to chime in during standups, what gets committed, and how agent work gets audited. You need audits you can stand behind. Not just for compliance, but for the reckoning when something odd slips into production.

Bottom line is this. Agents can help you scale and coordinate, but you set the system constraints, keep drift visible, and—most important—review for reasoning first. That’s how you build a hybrid process where decisions stand up under scrutiny, even long after they ship.

If I’m honest, I still don’t have a perfect way to keep every edge visible when abstraction removes half the signals I used to rely on. I keep wondering if the model will someday flag reasoning gaps as clearly as we do, but until then, I’m committed to designing guardrails and embracing the messiness where I have to. Some days I’m convinced we’re close. Other days—not so much.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.

You can also view and comment on the original post here .