How to Get Actionable Feedback That Drives Real Change

How to Get Actionable Feedback That Drives Real Change

The Difference One Specific Ask Makes

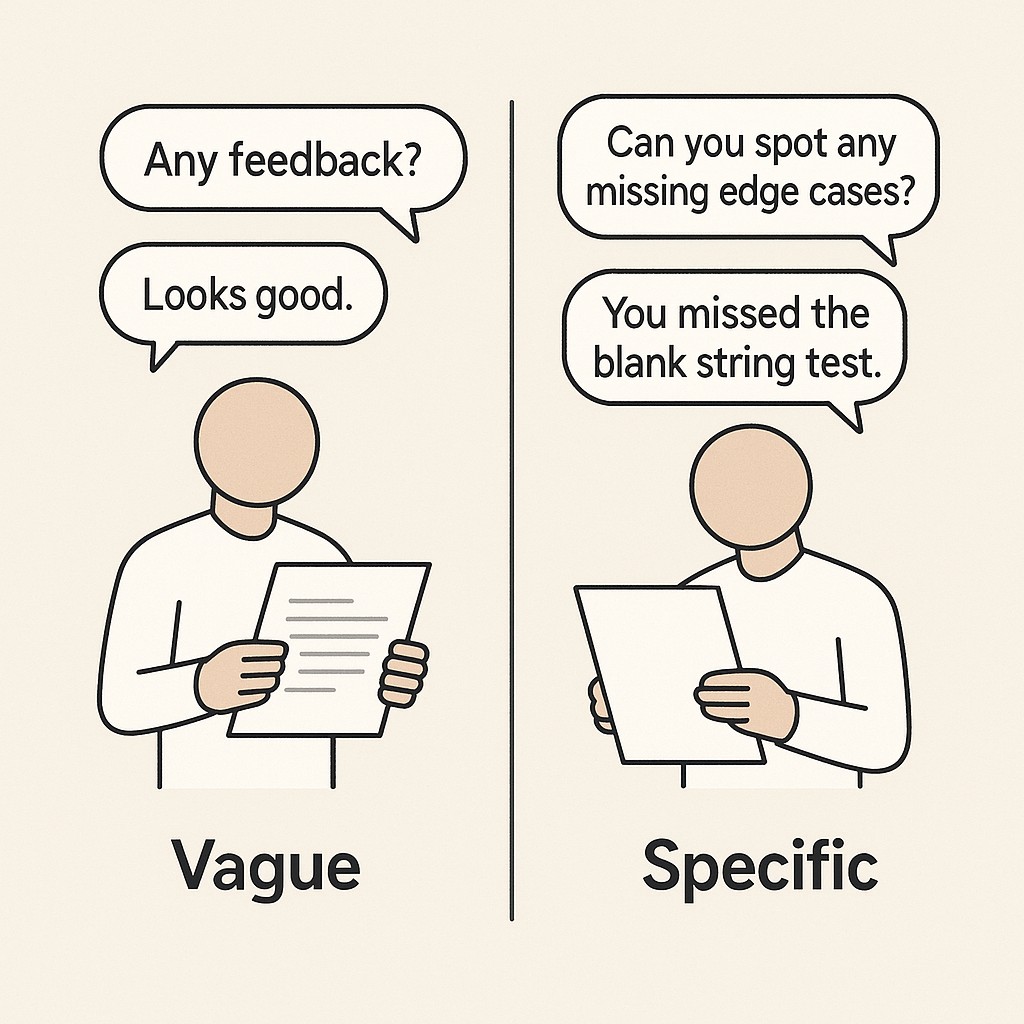

The last time I asked, “Any feedback?” on my code review, I got a pat on the back—literally just, “No, you’re good!” That felt reassuring for about five seconds, until I shipped the PR and found myself wondering what I actually missed. It changed the day I followed up with, “Can you focus your comments on edge cases in this PR?” That was the moment genuine, actionable feedback landed: a new test for an overlooked scenario.

If you’re wondering how to get actionable feedback, remember that vague requests are easy to hide behind. I’ve done it myself—fired off the generic ask and hoped people volunteer a miracle. But when I shifted to asking, “What’s one thing I could improve in PR reviews?” I set the stage for a practical answer instead of room-temperature praise.

What helped most was narrowing the field. Someone pointed out, “Your comments cover the basics, but they’re broad. Next time, try focusing on edge cases.” That one suggestion changed my workflow. Instead of worrying whether I’d missed an obvious bug, I zeroed in: are there tricky inputs the code doesn’t handle? It’s not just about being specific—it’s about how you make feedback actionable by tying it to something you can actually observe or change. When feedback gets linked to an action you can see (like a better test, or a tighter comment), the ambiguity drains out. Suddenly, everyone knows exactly what “better” looks like; it’s in the code or it isn’t. And it turns out, framing cuts down back-and-forth, because people can act on what they see.

This is the heart of it. The form of your ask shapes the form of the answer. Vague asks lead to vague answers. Specific asks unlock specific improvements.

We’re halfway into this feedback series—day 6—and the next step is understanding exactly why specificity works, and how you can make it work even faster.

How to Get Actionable Feedback: How Specific, Action-Focused Feedback Works

Most praise you get from teammates is genuinely well intentioned, but it rarely helps you get useful feedback. The gap is obvious. People want to be supportive, but vague encouragement can’t guide you toward the next improvement. The intent is kind. The utility, pretty limited.

If you’re chasing better feedback, the central rule is simple: the question you ask shapes the response you’ll get. Let’s get concrete. Ask a broad, open-ended question and you’ll hear wildly different takes, sometimes nothing useful, sometimes contradictory advice. In fact, when the stakes were made explicit, only 43% supported taking one action while 48% opposed it (Pew Research), which means specificity changes the outcome.

Layer in tailored cues, and people actually start recalling details you’d never get if you just asked for “thoughts” (Frontiers in Psychology). The trick is giving your teammate something exact and observable to respond to, like “Did this cover all the edge cases?”, not just, “Any feedback?” When you constrain the focus, you lower the mental load and sidestep the awkwardness—people know what to look at and you get answers you can actually use.

Here’s the difference you’ll feel. When you make a narrow, specific ask, you get clear, actionable steps—say, “Write one test for an unexpected input.” Toss a generic prompt out, and most responses drift, never landing on anything you can actually change.

Try this targeting move: whenever you request feedback, pick one area and one observable improvement. It could be “Suggest a more concise summary in this model card,” or “Identify one missing agenda item.” Ground it in the work at hand.

If you’re worried you’re asking too much, don’t be. Asking for a concrete, scoped improvement is actually a time saver, not just for you, but for your whole team. Trust me, a specific tweak now avoids hours of rework next week. I used to think it was extra work to be so precise, but every time I skipped it, I ended up paying later.

And honestly, sometimes you just don’t get an answer you can use. That’s the unresolved part for me. Even with all the precision and targeted asks, there are weeks when the feedback is just… meh. Still working out how to nudge it when the room goes quiet.

Framing Feedback for Real Improvement

Let’s break down exactly how to ask specific feedback questions that get results. Pick one narrow domain—maybe PR reviews, onboarding docs, or architecture diagrams. Call out a specific behavior that matters, like “catching off-by-one errors in data checks” or “flagging confusing variable names.” Then ask for one tweak you can actually ship this week. The more grounded and time-bound your ask is, the clearer the response. Callback to earlier: remember when “Any feedback?” got you nowhere? Now you’re putting yourself—or your teammate—in position to actually move the needle.

Here’s something almost no one does by default. Give the other person time. If you’ve just left a meeting, don’t call out in the hallway, “Anything I should change?” Let the dust settle. Say, “No rush, reply tomorrow.” This not only respects their focus, but you’ll get sharper, less canned input. I’ve learned that the best answers come the second day, not the second minute.

Walk through a PR example with me to see feedback framing techniques in action. The generic comment—“Looks good!”—isn’t wrong, but it’s nearly useless. Dial the ask to, “Can you point out one edge case this code might miss?” and suddenly you get something like, “Input with a blank string breaks on line 48.” That’s feedback I can act on.

You spot edge-case comments by looking for places the code assumes too much or tests too little. If you phrase the ask around “what weird input would crash this?” or “where would this trip up in prod?”, you direct the review to surface real risk—plus, you establish a follow-up: “I added a test for blank strings—do you see another edge case?” The outputs stabilized once framing cuts down the back-and-forth cycle, which makes reviews more dependable and less painful.

Quick tangent: once, years ago, I tried to shortcut a code review by pasting a block from Stack Overflow without checking edge cases. It worked for the main path. Then, weeks later, someone spotted my copy-paste move and pointed out that a blank input crashed prod. I remember the mix of embarrassment and relief—embarrassment that it slipped through, relief that someone had actually looked for the edge case. That stuck with me. Asking for one specific thing isn’t just process—it’s survival, or at least dignity.

And yes, normalize the social dynamic. “You’re doing great!” is always nice to hear—don’t dismiss it. Just pair it with, “Try one specific change this time?” That gentle nudge helps momentum, keeps improvement moving, and makes the ask feel normal, not demanding or awkward.

That’s the method. Stay specific, frame it around one actionable detail, and give people time. You’ll see real change, not just polite agreement.

Implementing Feedback: Closing the Loop for Real Progress

It’s uncomfortable to admit, but when feedback disappears into the void, people eventually stop offering it. I’ve seen this play out again and again—someone shares an idea or points out a fix, but no change is visible, no one circles back, and after a few cycles, suggestions dry up. The opposite happens when you respond with quick, visible follow-through; the dynamic flips.

Suddenly, teammates know you actually listen, and the bar for sharing goes up. Here’s what changed: if you respond with visible, constructive follow-through, you increase psychological safety—which drops from 4.35 to 3.31 when people sense negative emotion instead (Nature). That difference shows up in the quality of the next round of comments, and honestly, in whether people bother at all.

I’ve landed on a pattern that works without a ton of ceremony. First, I summarize what I heard (“You flagged blank string inputs as risky”). Second, I state what I changed right away (“Pushed a commit—now the handler checks for blanks”). Third, I preview what’s next (“I’m checking user auth edge cases tomorrow, will update on that”). Here’s a PR review before: “Nice job.” Here’s after: “Caught the blank string bug—fixed in latest diff; next up is user auth. Thanks for flagging!”

Publish faster without busywork by using our app to generate AI-powered drafts and outlines from your specific prompts, so you can ship clear posts, docs, and updates today.

If you want this to stick, pick one clear improvement per cycle—don’t overwhelm yourself with a laundry list. Track it in a simple log. One row per week, one visible change tied to feedback, even if it’s just a bullet in a Slack thread. Invite your teammates to hold you accountable (a ping is enough): “Did I actually fix what you raised last week?” It keeps the circle closed and the bar high.

Don’t wait for the quarterly retro. Each time you act and show what changed, you raise the odds next week’s feedback will be sharper, more honest, and actually useful. Next up, let’s break down the common objections and how to put this in play—especially when the process feels slow or requests seem demanding.

Shortcut the Hesitations—Checklist and Momentum

Let’s tackle the usual pushback around getting actionable engineering feedback. “Isn’t asking for one clear improvement more work?” When you’re moving fast—chasing deadlines or tweaking model code at midnight—pausing to phrase a tight, action-focused feedback request can feel like wasted seconds. But here’s the reality: spending a moment to frame the ask (“Can you find one edge case this could break?”) almost always cuts hours of rework later.

This works exactly the same way in model training—if you clarify up front what you’re optimizing for (say, one measurable fix in output handling), you skip those expensive, slow cycles of trial and error or endless review ping-pong. I’ve lost count of the times sloppy prompts led to dragged-out PR reviews and models that needed post-hoc surgery. One sharp ask turns churn into shipped progress.

So if you want to get started right now, steal this checklist. It works equally well for code reviews, model builds, or written communication:

- Scope it. One area or file, not “anything.”

- Name one observable action (“Suggest a test for blank input,” not “Any thoughts?”).

- Give explicit permission to think (“Sleep on it, reply tomorrow.”)

- Commit to implementing one thing.

- Close the loop (“I changed X based on your note.”)

Put this next to your monitor as a sticky note, or drop it in Slack as a template for the team. Remember: you’re not asking them to overhaul your work or dump a laundry list. One observation, one change—that’s enough to move forward, and you’ll actually see improvement.

Ready to try? Make one specific ask before the day ends. Tomorrow, ship the corresponding improvement and send a follow-up that names exactly what changed. “I added the blank input test you flagged.” That loop is what turns feedback into trust—and trust compounds, fast. Honestly, the only way through the hesitation and friction is to start small, refine as you go, and let everyone see the cycle working. If you do this once, you’ll see how much smoother the next review, sync, or model iteration runs—and you might just start enjoying the process.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.

You can also view and comment on the original post here .