How to decouple AI architecture for flexible, safer scaling

How to decouple AI architecture for flexible, safer scaling

The Trap of Tightly Coupled AI Systems

I was on a call the other morning with a builder who’d hardwired their entire AI stack—models, agents, tasks, everything fused together. Try swapping one model? It meant tearing into code, tracing dependencies, praying nothing else cracked. I could feel their frustration.

I’ve been there. Despite years in software, the rookie mistake I made with AI was tight coupling—locking all the moving parts together because it felt efficient in the moment. It wasn’t.

How to decouple AI architecture starts with recognizing that treating your model, agent, and task logic as a single block makes every little change a risk. Upgrade the model, and suddenly your agent code trips over a new response format. Want to experiment? Good luck. You’ll stall out, knowing any tweak could break downstream logic you barely remember writing—and every improvement gets buried under “just one more fix” until you stop trying.

That’s when I told them: you should be able to flip that switch in under a minute. Say you want to swap GPT-4 with Gemini, or try a local model—fine. That decision should live in a config file, not your main logic. The stack doesn’t need a code surgery for basic tests. I walked them through a quick toggle. One place to change, push the config, restart, done. Suddenly, the pain was less about technical fear and more about, “Why didn’t I do this ages ago?”

Here’s the bit I wish someone had told me sooner. Flexibility is the new performance metric. How quickly you can swap, A/B test, and upgrade isn’t just a convenience. It’s what keeps your system alive when the landscape shifts tomorrow.

Layered Architecture: Making AI Systems Flexible by Design

Let’s put the guiding principle front and center. Flexible AI system design depends on decoupling. AI systems are no different. Ask any engineer who’s spent years with brittle setups. Loose coupling and high cohesion are the backbone of well-decoupled architecture. It's a time-tested approach for stable, flexible layer boundaries Computer Society. I keep seeing teams chase speed but forget that good boundaries make every change safer, faster, and easier to reason about. If something feels tangled, it’s almost always too tightly coupled.

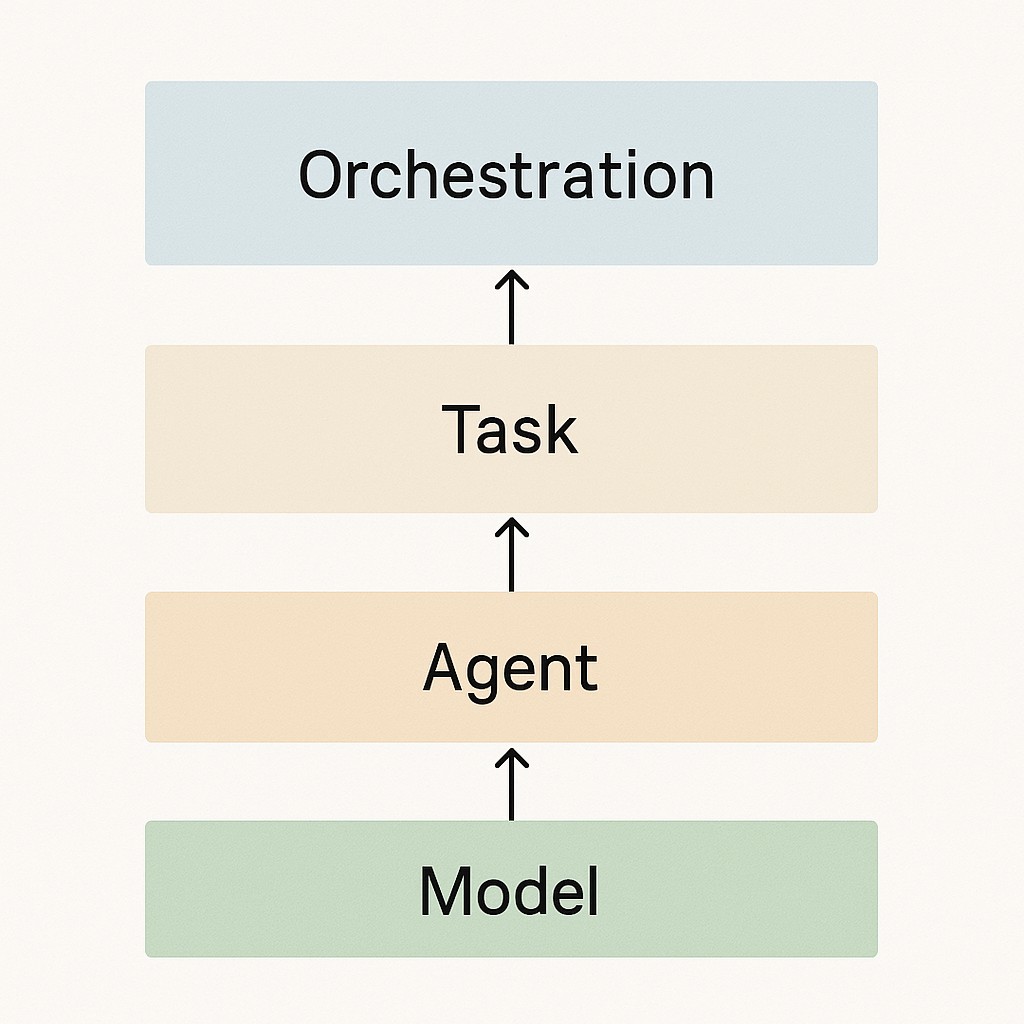

The first layer to nail down is your model. This is your capability provider—the engine behind every task. Model-agnostic AI design means that whether you’re plugging in Claude, Gemini, or GPT-4, what matters is a unified interface. You decide which model powers the system with a single setting, not a full rewrite. Model swaps shouldn’t touch business logic. That’s the baseline for flexibility.

Now, agents. Here’s where most setups go sideways. Agents end up fused with models, carrying extra responsibility. Agents hold tools, memory, and tone. They decide how to act, not what to do. Separate your agent’s behavior—its use of tools, handling of internal state, policy on responses—from the actual tasks it’s assigned. Once you split these responsibilities, you stop rewriting core agent logic every time you introduce a new prompt or want a different vibe.

Tasks are their own layer—crisp, with fixed input and output shapes, and evaluation rules that stay static even when pipelines evolve. Think: summarize, enrich, classify, generate. The point is to anchor your system to well-defined contracts, not loose intentions. When tasks stay unchanged, it’s trivial to swap models or agents without a QA scavenger hunt.

In a modular AI architecture, orchestration is what holds it all together. Flows, routing, fallbacks, and those precious A/B harnesses—this layer strings the components together and manages decisions on the fly. Visual builders like Amazon Bedrock Flows link prompts, agents, guardrails, and knowledge bases into modular AI flows, which turns orchestration into a clear, separate layer AWS Bedrock.

Honestly, when I first started layering interfaces and separating routing, I worried the overhead would slow me down. It ended up speeding everything else up. Setting up orchestration might feel like extra work, but it’s a compound investment. Every new experiment, fallback, or upgrade gets easier, not harder. That’s leverage you want in your stack.

There’s a thing I still wrestle with. Even now, I’ll sometimes catch myself slipping layers back together “for speed.” It never holds up under stress.

Design Patterns for Swappable AI Components

The mechanics here hinge on a few simple but powerful patterns. First, standardize how your AI models talk to the rest of your stack—force each model to speak in the same language, with clear input and output formats, so swapping one for another doesn’t break anything else. One way to do this is by locking your output format with JSON Schema. Every AI model responds in the same structure, making it painless to swap engines or build adapters (Microsoft DevBlogs). If a new model handles things a little differently, you build a quick adapter that maps its quirks to your common schema. Round it out with a registry keyed by capability so every model (summarizer, coder, classifier) is discoverable and replaceable.

But don’t stop at structure. You want your AI orchestration layers to be smart about routing and cost. That means adding metadata contracts to each model—think price per token, expected latency, throughput, and what kind of reasoning it supports. With those in place, you can let your flow orchestration pick the right engine on the fly. It’s like giving your system a cheat sheet to optimize for cost, speed, or reasoning depth without you micromanaging every call.

Now, let’s make it tangible. Say you’ve got Agent A running Task Y, powered by Model X. You want to see if Model Z improves outcomes. You swap Model X for Z right in your config—no code changes whatsoever. The agent’s logic and the task definition stay stable; all you’ve done is flip the engine. In seconds, you’re watching Model Z tackle the same instructions with its own flavor of reasoning. Sometimes the shift is dramatic. Model X might write bullet points, while Model Z delivers a narrative or catches subtle edge cases. That instant feedback loop is the point—you can experiment and reason about differences with no fear of breaking anything.

If you’ve only experienced the kind of model swapping that takes all afternoon, this will feel like cheating.

There’s a real pleasure when swapping out components feels effortless—like switching guitar amps or snapping in LEGO bricks. Same riff, new tone, and you didn’t waste time rewiring the whole rig. I love the moment you hear the sound change without breaking your flow; it’s the sort of modularity that makes tinkering fun again.

Let’s stretch that out a bit. With your registry and schema in place, A/B testing becomes easy. You can run GPT-4.1 next to GPT-5 and GPT-5-nano, capture response differences, and decide which model is actually right for your use case. No five-step MacGyver routine—just toggle, run, compare.

If you’re implementing this, use feature flags, environment variables, or even a UI selector so swapping engines is really under a minute—even in production. You don’t want a dev cycle every time curiosity strikes. The stack should flex as easily as you do. This is how you compound productivity and keep change from ever becoming risky.

Let me digress for a second. The worst swap I ever did was on a Friday afternoon, just before a team demo. I thought the output format “looked close enough.” Turned out, a tiny change in one field crashed reporting for every region west of Berlin. I spent two hours trying to patch it before realizing the agent was also using legacy code I’d totally forgotten about. It was a mess. That headache would have vanished if I’d stuck to strict layer contracts—and, lesson learned, never swap anything right before a demo.

Running Experiments Without Touching Core Code

Start with what matters. Build your A/B harness so you can A/B test AI models for reasoning quality, cost, and latency—without wading into the guts of your stack. Design the experiment around what you want to learn and set up the interface so swapping a model, agent, or config is just changing a setting. When the harness is reusable, you’re five minutes from testing a new engine or prompt, not five hours. I always tell folks to measure what matters most, and make it easy to test those metrics again as soon as a new candidate appears.

If you’re rolling out a new configuration, shadow and canary deployments are your best friends. Route part of the traffic to the new model while the majority keeps using the original setup. If something goes wrong, or the metrics don’t look right, roll back instantly. No drama, no scramble to fix broken routing.

Keep it simple. Persist your metrics—reasoning, cost, latency—right next to your outputs. This way, when orchestration picks winners or rotates in a new engine, it’s working with real data and you’re not stuck refactoring pipelines. Store everything side by side; when it’s time to swap engines, it’s truly instant.

Look, I get the doubts. Building these interfaces does mean up-front work, and, yes, it feels like you’re slowing down at first. Six months ago I was convinced “extra layers” meant less speed. I was wrong. That initial investment pays off every time you swap a model, run a new experiment, or scale up features. You’ll spend less time fighting regressions and more time improving the product. Change risk drops, and velocity compounds. Interfaces aren’t overhead, they’re leverage.

How to Decouple AI Architecture: Implementing Decoupling in Your AI Stack

To practice how to decouple AI architecture, pick one task you already own—maybe it’s classification, summarization, or something daily. This week, set aside time to define its contract: what goes in, what comes out, what success looks like. Then split out the model, agent logic, and orchestration behind it. You’ll get insights every day, and see real friction points faster than you expect.

Now run the anti-pattern check. Scan for places where model calls hide inside your task or agent code. If “call Gemini” shows up inside a function labeled “score intent,” pause and route that through an adapter. Any tight coupling here will bite later, so pull those boundaries apart now.

Here’s the upside. Suddenly, swapping models is just picking from a list—no anxiety, no code rabbit hole. You want to upgrade or try a new algorithm? The rest of the system stays steady. Downstream logic doesn’t flinch. Adding a new feature feels like you’re back on that morning consulting call, except this time, you can test, swap, and scale almost immediately. Flexibility is the new stability.

While you focus on decoupling your stack, use our app to generate AI-powered drafts, compare tones, and iterate fast, so content keeps up without extra engineering.

Shift your mindset. Treat flexibility as one of your team's top performance metrics. Build a system, not a bottleneck. The speed and safety you gain isn’t just technical—it changes how fast you learn, ship, and adapt.

I still don’t have the perfect answer for when to refactor a brittle module versus scrap it completely. There’s no clear line. Some legacy code hangs around longer than it should. That tension seems part of the job.

The big win is this. The more you decouple, the more you get to choose what changes and when. And sometimes, that’s all the control you need.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.