LLM function calling best practices: the strategist-toolbox shift

LLM function calling best practices: the strategist-toolbox shift

The Mental Shift That Made Function Calling Click

When I first started building with AI, “function calling” lived in the mental junk drawer labeled advanced stuff, maybe later. Honestly, I just assumed I could ship a working tool without ever touching it.

Six months ago, I thought this whole part was deep LLM magic out of my league, so I avoided it. Then someone reframed it through LLM function calling best practices in a way that finally landed for me. It’s just giving agents tools.

Here’s why that matters. When teams treat LLMs like all-knowing magicians and force everything—retrieval, logic, validation, output—through pure model prompt wrangling, the whole system slows down or starts throwing weird mistakes. I made the model write, fetch, validate, and decide in one go, and it seemed clever until real users hit the edges. That’s when the brittle parts began to break. Hallucinated data, silent failures, sluggish responses that left people wondering if anything had happened at all.

Part of the trouble was the phrase “function calling” itself. It made me picture fancy LLMs and AI-only voodoo, like some secret dance between cutting-edge models reserved for research labs, not for shipping features. In reality, the magic is a lot more mundane, and, as it turns out, way more useful.

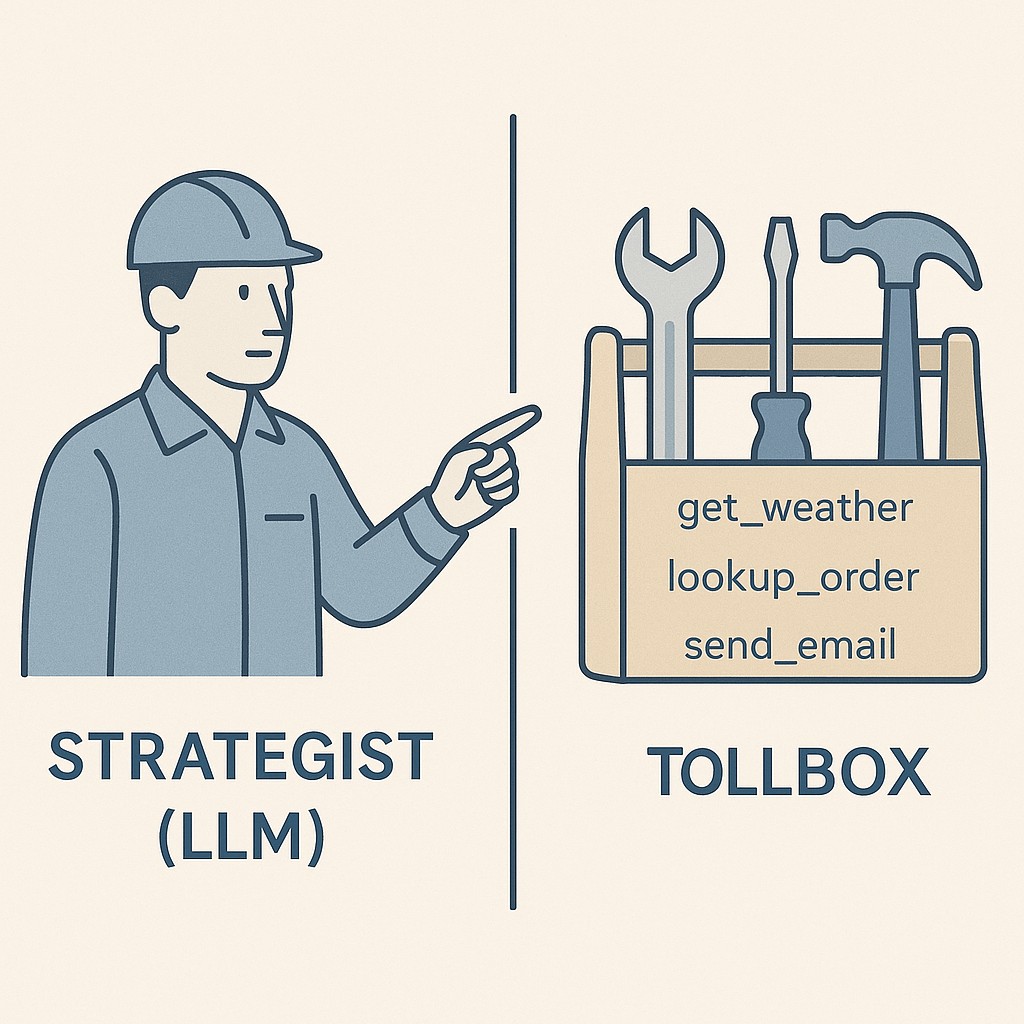

Here’s the operating principle that’s stuck with me since. The LLM is your strategist, not your worker bee. It decides, coordinates, and orchestrates. But when it comes time to actually get something done—send an email, fetch a record, crunch a calculation—you hand that off to a deterministic tool, a function that does exactly what you want every time. It’s like moving from “maybe the model will figure this out” to “here’s the toolkit, pick what you need.” That separation is what makes your system reliable and clears the path from proof-of-concept chaos to something people actually count on.

Agents, Demystified: The Strategist and the Toolbox

An agent isn’t some mysterious AI overlord. At its core, it’s just a software system where the large language model (LLM) thinks about what’s needed, then hands tasks off to reliable tools that actually do the work. Strip away the hype and you get a clear split. The agent plans, the tools execute.

The best analogy I’ve found is a construction project. The LLM is like your foreman, planning out the tasks, tracking progress, making decisions about what should happen next. But if you expect the planner to swing the hammer or measure the lumber, you’ll be disappointed. I kept falling into the trap of letting the planner do everything, only to watch it fumble the details that a simple tool could handle perfectly.

Take something as basic as web search to see how you orchestrate APIs with LLMs. The LLM figures out what information is needed and when, but the actual search—hitting Google or Bing, interpreting API results—that part should be done by a clear, purpose-built function. No more hoping the model gets the right answer from scratch.

It’s all about LLM tool orchestration. The LLM’s real job is to decide when and how to use the tools you give it.

To see this in action, consider checking the weather. Say you ask, “What’s the weather in Paris tomorrow?” The agent first spots what’s needed—a city and a date. It won’t call the weather function until it’s sure it has both pieces. If you just say “the weather,” it’ll ask you to clarify location or time first. Only when all required parameters are in hand—reliable LLM function calling in practice—does the agent trigger the actual API call.

You end up with crisp, structured inputs and reliable outputs: Paris, tomorrow, weather data, done. That separation between planning and execution calmed my fear of “magic”—because the model holds off on making a function call until it collects all required parameters, forcing it to ask for any missing details first (see Vellum tutorial). The result is simple, predictable, and easy to trust.

Identifying, Designing, and Wiring High-Value Functions: LLM Function Calling Best Practices

Start simple. Instead of brainstorming a laundry list of twenty possible abilities, look at your users’ real workflow and ask, “Which two functions would change their life right now?” I stopped mapping out edge case after edge case and just shipped the two tools users hit every hour. Most of the magic starts there.

Function schema design gets clearer and less error-prone when you apply LLM function calling best practices and focus on the essentials. Pick a name that conveys intent, specify precise inputs and outputs, and—here’s where I slipped up early—define what “deterministic” and “idempotent” mean for this tool. I learned the hard way that vague schemas turn tool calls into a guessing game, especially as things grow. Idempotence gets easier when you use large, unguessable values—then each client ends up with their own key and collisions basically disappear. Tight schemas stopped weird edge cases from popping up.

Wiring up the tools isn’t rocket science—LLM tool integration is straightforward. You can call a Python library, invoke a shell script, connect to a SaaS platform’s API, or even wrap flaky internal code behind a simple function. Almost anything can be made into a tool if you put in some guardrails.

Concrete example: searchDocs queries a database as a function call to fetch relevant documents. GetWeather grabs weather data by city and date. Wire them in so the LLM can call these with just the needed parameters—nothing more, nothing less. Start with instrumentation right away. Log every call, response, and any errors. Add basic guardrails like rate limits and timeouts to catch runaway tools or accidental infinite loops. If you’re worried the upfront time will slow your project, I’ve been there—turns out, setting clear boundaries and tracing each function actually sped up every future feature. For one launch, instrumenting just those two calls made every bug visible and every fix faster.

I still slip into “build everything” mode sometimes. One weekend I turned a toy app into a wiring diagram with five different tools, then realized that for learning the system, one tool was plenty for day one. Oddly, I still catch myself doodling out big architectures in my notes. Sometimes I look back and wonder if that urge will ever fully go away.

Practical Guardrails: Turning Fears into Confidence

Tooling up front feels like one more thing to slow you down, but it pays off instantly. Spending the extra hour on clear functions and boundaries is like filling in tests. It drags at first, then saves you every single day when systems get weird or users push the edges.

If you’re worried about tools getting misused, some basic patterns go a long way. Setting up allowlists, doing proper input validation, running dry runs, and scoping permissions all keep your system tight. Missing strict controls leaves plugins open to exploitation and even risks like remote code execution (see Redscan – OWASP LLM). I block the model from touching anything I wouldn’t hand to a junior engineer, and suddenly those nagging doubts drop off.

APIs can get expensive and noisy fast if left unchecked. Add rate limits and quotas, make use of caching, and debounce calls if needed. I wish I’d known how quickly a careless prompt could rack up calls. These simple patterns keep costs obvious and waste low.

The contract between prompt and tool has to be crisp. Take a minute to describe each function, toss in worked examples, and spell out that the model’s job is “decide, then call.” I literally wrote that right in the system prompt to keep speculative tool spam from filling up logs and draining the budget.

Visibility matters more than you expect. Log every tool decision, trace latency, track errors, and have fallback paths ready so the user experience stays resilient. The first trace I added revealed a hidden retry loop. Fixing it halved the cost overnight. Once you can see each call and what triggered it, debugging goes from wild goose chase to actual progress.

If you build these guardrails in early, the system starts to feel less like magic and more like something you can trust. Anyone stuck at the “is this safe, is this worth it?” stage—I was there too. The mindshift isn’t about more complexity. It’s just picking confidence over confusion, and that payoff lands fast.

Rollout: Start Small, Expand with Usage

Pairing clear tools with your model—and letting the model decide when to use each one—is what changes the game. The real power isn’t in how smart the LLM appears. It’s in the way you set up dependable functions then trust the agent to orchestrate. Looking back, once I started seeing the agent as a strategist, not as the worker, the confusion melted away. The mindshift isn’t “teach the model everything.” It’s “enumerate capabilities and let the AI pick the right tool for each job.”

Here’s how you actually roll it out. Pick the one or two functions that matter most (like “lookup user” or “fetch order status”), wire up tight schemas for inputs, add guardrails, and instrument usage from day one. You don’t need a sprawling suite of plugins—just the essentials. Start there, and you’ll see where to add the third function after real users hit the first two. Usage tells you what’s missing. The system grows by watching how it’s used, not by trying to predict every need.

This clicked for me building a support app. I gave the model three tools: FAQ search, order lookup, escalation. Instead of forcing the model to guess customer intent for every ticket, it started asking the right clarifying questions, then calling the correct function. My stress dropped once the LLM stopped guessing and the tools started answering. Adding usage instrumentation made it obvious where to improve—the model orchestrated, the tools executed, and support responses turned sharp and reliable.

Ready to build without the guesswork, use our app to generate polished, AI-powered drafts for posts, docs, and updates, then iterate fast with clear inputs and structured outputs.

That clicked. Try wiring up one tool today. You’ll feel the shift the moment orchestration replaces guesswork.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.