Prioritize Outcomes Over Features to Build Durable Trust

Prioritize Outcomes Over Features to Build Durable Trust

When Shipping Isn’t Enough

When I first stepped into engineering leadership, I wanted things to be straightforward. Ship exactly what was asked and do it well, but real success comes when you prioritize outcomes over features. Clean, simple, satisfying. Six months ago I would’ve bet on that recipe every time. We launched on schedule, hitting every requirement in the spec.

But no one was happy.

One stakeholder had expected something entirely different. They’d read the problem, heard our plan, but their idea of X never showed up in the deliverable. If you’ve seen that look in someone’s eyes, you know what I mean.

Then there was the platform itself. It turned out our architecture wasn’t built for Y, at least not without more retrofitting than we’d scoped. Suddenly, the thing we’d just delivered was pushing up against walls no one mentioned before.

Leadership? They’d been waiting for Z. When the dust settled, you could feel their disappointment. The feature worked, but what they actually wanted was missing.

Diagnosing the Gap: From Requests to Real Value—Prioritize Outcomes Over Features

Here’s the core mistake. Treating a request as if it’s the whole plan for value. Intent doesn’t always equal outcome. Especially when we stop at what was asked instead of why it was needed. Outcomes are customer problems, and outputs are the solutions we build against them—which frames why a request isn’t a roadmap for value.

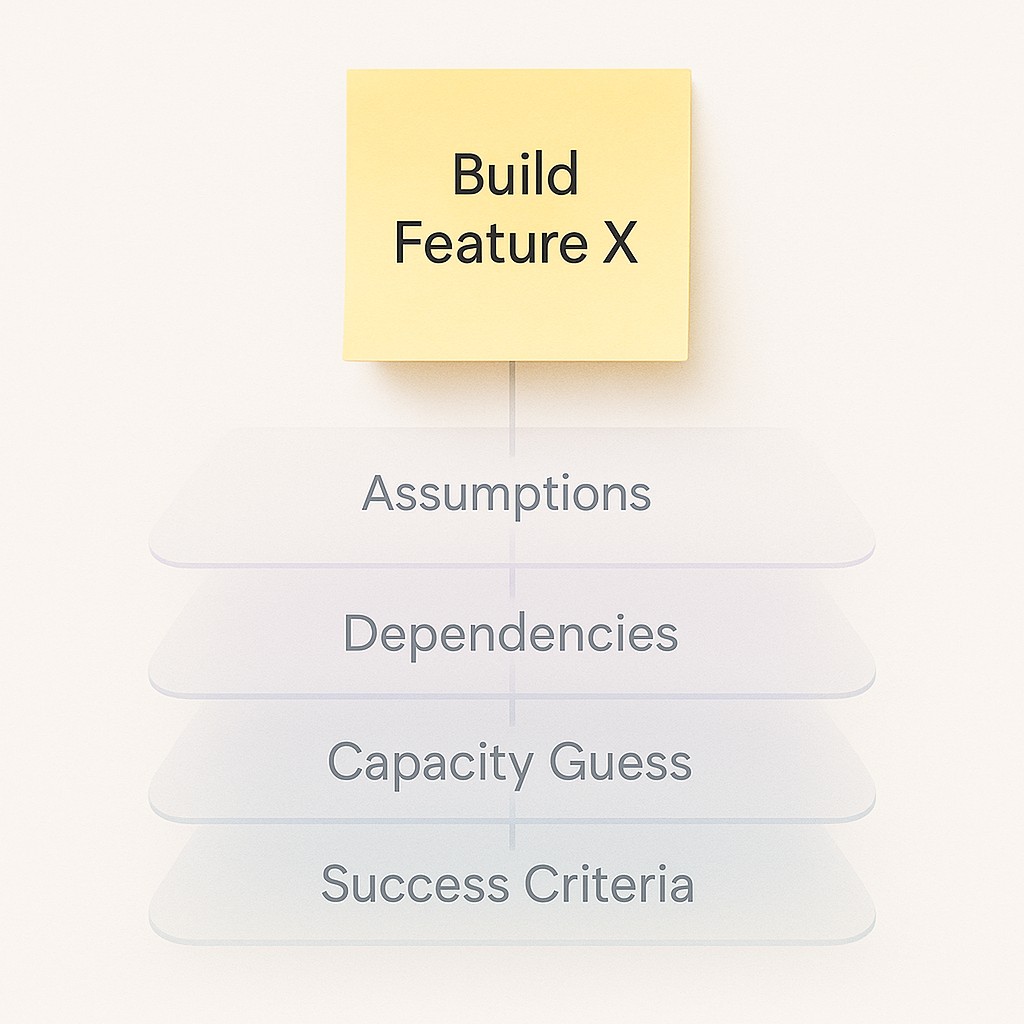

What tripped me up was all the assumptions hiding inside even the simplest asks. It’s easy to miss just how much scope creeps in, how dependencies lurk around the edges, and how many capacity guesses go unspoken. More than once, I’d commit to a deliverable before realizing that none of us had spelled out the real success criteria—who cares, how will it be used, what does “done” actually mean? I underestimated how many assumptions were embedded in a single ask.

The temptation is to chase every local win. Check the box, show the result, move fast. But great engineers don’t optimize for individual requests. When you zoom in too close, you risk degrading system-level value—solving the wrong problem or creating friction elsewhere.

I honestly felt deflated and confused that “done” didn’t feel like success. The shipping didn’t land. The impact wasn’t there.

Your job isn’t to make everyone happy. It’s to make the right tradeoffs and deliver long-term value. Step back. Align on what matters. Measure outcomes together, focusing on outcomes not outputs. That’s what builds trust and makes the work last.

Turning Competing Requests into Alignment

Start with the map. I ask myself who actually benefits, how, and when. Laying out stakeholders and value streams is more than filling boxes. It’s tracing the flow so you see where business value moves across people, process, and tech. Truth is, I hadn’t ever mapped value streams. The first time I did, it shifted how I listened to every request and changed what I thought “progress” meant.

Next, define success metrics. Not just “does it work?” but “does it move the needle for users?” For each ask, I push for criteria tied to actual impact. Performance gains, reduced downtime, efficiency boosts. I want metrics everyone can recognize.

It’s easy to default to measuring finished work—tickets closed, code shipped. But the only thing that counts is what users feel. Did the system get faster, did errors drop, did teams find their flow? I realized that unless we’re using those metrics, we might be busy but not valuable. I talk this through with stakeholders until the numbers match what the business needs. It takes patience, especially when folks are used to broad goals, but clarity here sets a true north for the work.

Constraints are where things get real. Make tradeoffs explicit: capacity limits, platform quirks, sequencing headaches, operational risks hiding around the next sprint. Making tradeoffs visible can feel exposed. There’s a risk in naming the hard edges before you start. But surprises later cost more. If a service is well inside its error budget, developers can push boundaries. When error budgets run tight, risk tolerance goes down and safety takes priority. See FireHydrant. Calling out these boundaries early removed the mystery around what was possible and forced us to translate technical limits into actual choices.

On Day 6, I draft a decision memo. Not some endless doc, just a sharp summary. Here’s what we will do, what we won’t, and why. It’s got the metrics we’ll validate, tied right to the value streams mapped out. If it’s not clear in the memo, it’s not clear enough to build.

At launch, align stakeholder expectations. I get all stakeholders to confirm. These are the tradeoffs, here’s how we’ll know it worked, and here’s our review cadence. Circling back to what we set out together, I make sure it’s not just in my head—it’s owned by the group.

Finally, put the scorecard to work. Track leading indicators and outcome metrics—the real signals, not just opinions or status updates. We use the data to adjust, not gut feelings. Seeing the scorecard weekly kept us honest about what was actually landing and where the system fell short. Progress didn’t get lost in optimism. We had facts, not stories.

This is value-driven engineering, not a bureaucratic hoop. It’s how you translate friction and ambiguity into trust and direction. If you’re worried it slows you down, remember. It’s easier to move fast when you know you’re pointed the right way.

Walking the Alignment—A Concrete Example

Picture it. Three stakeholders in a room, each with their own must-haves and ultimatums. One wants uptime above all—call it X. Another asks for platform flexibility—Y. The third pushes on speed to delivery—Z. I invite you to walk this with me: it’s late February, the calls are fresh, and the decisions aren’t abstract anymore. These days, I start by asking each person to spell out what success means to them, in their own words. You get more honesty and less guessing that way.

Here’s how we run the conversation. First, I get the constraints out in the open. Our team had finite capacity, so not everything fit in this cycle. The platform could only stretch so far without major refactoring. No magic wand. We’re not superheroes.

Next comes tradeoffs. If we push for reduced downtime (X), we have to compromise on onboarding customizations (Y) or accept tighter delivery windows (Z). This is where I anchor the talk on system metrics everyone respects—reduced downtime, standardized deploy logs, throughput on error counts. It’s simple, but here’s the admission: no matter how hard I try, each tradeoff means something gets left behind. But the clarity around system impact—what gets measured and how—makes sure everyone knows the real cost and payoff. The FireHydrant error budget case reminds me: when you make safety limits visible upfront, stakeholders have room to adjust priorities instead of arguing after you ship.

I’ll admit, sometimes the tradeoffs feel strangely familiar. In my garage I’ve got an old road bike I rebuilt last year. Thought I could get it to run faster and ride more comfortably for long stretches—all with a slim budget and YouTube tutorials. But every time I swapped out a tire or tinkered with the chain, gains in speed meant bumps in comfort, and vice versa. At some point I gave up on chasing both and just picked the ride I wanted most for the week. It wasn’t perfect, but it got me outside more.

Fast-forward to after shipping. I pull up the scorecard with the team. Downtime dropped by 24%. Platform flexibility held steady, but onboarding lagged. We moved the core metrics we agreed mattered, and the places we didn’t—well, that’s the accepted tradeoff. I keep wondering whether “Y” could have been boosted without hurting “Z,” but haven’t cracked that yet. We anchor these lessons right away, so nobody wonders later why “Y” or “Z” came up short. Instead of debating what was missed, we can point to what changed, how, and why.

None of this was flashy, but stakeholders could see exactly which outcomes landed at “Z-level”—and trusted how we got there. That trust is what lasts long after the feature fades.

When Alignment Feels Slow, but Actually Speeds You Up

Upfront alignment always looks like a time sink until you live through the aftermath of rushing. I spend more time before code so we waste less time after code. It’s a trade I’ll make every time, because the churn, rework, and escalation that come with misaligned launches swallow far more hours than thoughtful prep. Framing cuts down the back-and-forth cycle, which stabilizes iteration before it snowballs.

Let’s talk politics. Calling out tradeoffs isn’t comfortable. Saying “no” to misaligned asks felt scary until we had agreed metrics backing us. Most of us avoid tension, but transparency protects not just me—it shields the whole team from getting caught between shifting goals and revisionist history.

Momentum, though, is where the fear really spikes. Will all this clarity slow us down? Not in my experience. When you make priorities and constraints explicit, decisions snap into focus, not fog. At launch, everybody knows what success looks like and what’s out of scope. We stopped chasing “pleasing” and started “proving” by choosing to prioritize outcomes over features—measuring what lands, not what’s wished for—and the difference in trust is obvious. You move faster when you stop second-guessing, and you cut debates about what to do next.

If you want clear decision memos, stakeholder updates, and scorecards without the grind, generate AI-powered content tailored to your goals, constraints, and tone in minutes.

Here’s my stance. Shipping requests isn’t the goal; measurable tradeoffs are. A request isn’t a roadmap. If you want value to endure past launch, your job is making the tough calls visible and accountable.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.

You can also view and comment on the original post here .