Reduce dependency on AI tools: build resilient workflows that survive outages

Reduce dependency on AI tools: build resilient workflows that survive outages

When One Outage Shuts Down Your Whole Workflow

At 8:02 this morning, I hit ‘retry’ about 87 times before it even sunk in. ChatGPT was down. Not just flaky, but lights-out—both in my code and all the browser tabs I had left open. Should have seen it coming, honestly.

It wasn’t just an inconvenience. Both my main project and my own admin—writing snippets, triaging emails, even drafting a shopping list—came to a sudden halt. I checked the status page, hoping for a blip, but nothing was coming back. When both ChatGPT and its API went down with periodic outages lasting well past 1 p.m. PST, every workflow was left dangling in the balance, according to TechCrunch. Then I just sat there, staring at my screen. I’m not someone who folds at the first logjam, but that silence was honestly paralyzing.

The outage made one thing obvious: Reduce dependency on AI tools—because my workflows weren’t just interrupted; they were broken. The bar had moved and I hadn’t noticed how much faster I expected to move—or that I’d quietly stopped checking the route as long as answers arrived quickly from my “AI autopilot.”

Is this really how we all worked just two years ago?

There’s something weird about missing a tool this much. It’s not just that everything stops—it’s that everything grows heavy. A few weeks back, I lost cell service in the middle of a grocery run and couldn’t remember half the items on my list. I had to retrace steps, ask a bored clerk for reminders, and ended up buying three kinds of mustard but forgetting bread. That dull confusion snapped back again this morning. The friction is familiar, but you barely remember how much it stacks up.

Here’s the thing. Speed is supposed to help, not become the only thing holding the system up. The lesson’s clear: treat AI as an accelerator, not a crutch. If we build AI-optional paths and keep human validation in the loop, we’ll keep standards and momentum intact—even when the supposedly “unbreakable” breaks. My standards had shifted without permission, but I’m not letting reliability become optional. Let’s fix this, for real.

Why Reduce Dependency on AI Tools: Over-Reliance on a Single AI Tool Makes Everything Brittle

When you fail to avoid overreliance on AI and tie your coding, planning, and research to one AI provider, you end up with a hidden point of failure. It feels ultra-fast in the day-to-day—until that link snaps and everything grinds to a halt. Most teams don’t realize it: unplanned downtime isn’t abstract—the costs can hit $14,056 per minute and spike to $23,750 for large enterprises. The real cost? Momentum vanishes and suddenly even trivial tasks feel like brick walls.

This morning drove that home. I had travel planning, side project brainstorming, and personal schedules all running in parallel, each relying on ChatGPT like a keystone. One moment, it’s smooth. “Hey, what’s the best route to the hotel?” “Draft me a status update.” The next, the tool vanishes and my browser degenerates into tab-chaos: Google Maps, outdated links, sticky notes, half-finished thought threads everywhere. Each new plan spun off another tab. Each missed feature meant another missing connection. I’ll admit it—I’d let my workflows become a network built on a single, silent core. That’s brittle optimism, not robustness.

Without instant feedback from AI, idea exploration got weirdly old-school. I found myself sketching solutions by hand, relying on half-remembered best practices and raw intuition that hadn’t really been tested lately. It’s like reaching for a muscle and finding it… softer than you’d thought. The pace dialed back and suddenly you’re asking, “Was I actually good at this before the autopilot?”

The gut-punch was trying to manually dig up research, template boilerplate code, and chase down answers. A process that usually took seconds now dragged on for hours. It was almost embarrassing, how dependent I’d become on that constant feedback cycle—shocked by how slow everything felt and how much friction simple tasks could cause.

Here’s the kicker. The dependency wasn’t just technical. Everyday logistics—reminders, emails, even running errands—started to feel like hostages. This morning was a reset: more than my code broke. Over-reliance had permeated habits I didn’t even think of as technical. Suddenly, fragility wasn’t just a word for my stack; it described my entire approach to getting things done.

So yes, I care about speed. But what got shaken today was the confidence that comes from having alternatives. It’s time to build work that keeps moving—whether AI is on, off, or anywhere in between.

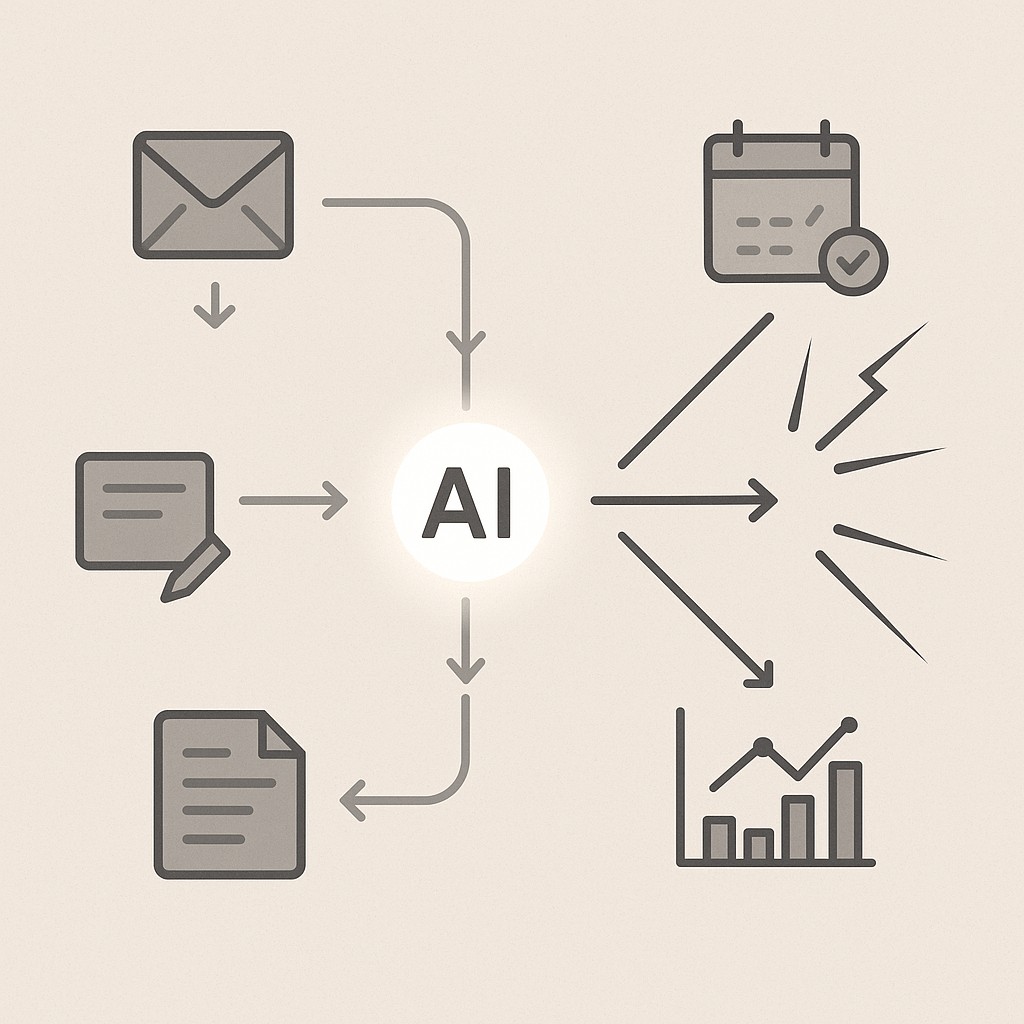

Building Workflows That Survive AI Outages

Let’s flip the problem. The real test is whether you’ve built AI resilient workflows that keep your standards intact when every AI tab goes dark. If today’s outage shook your confidence, that’s the metric worth watching. All this acceleration means nothing if the work collapses at the first sign of friction. Remember that tab-chaos I mentioned earlier? It’s the best indicator. If your system can’t handle a blackout, it’s not really a system.

Here’s my thesis, plain and simple. Reduce dependency on AI tools by treating them as accelerators, not the engine. You need baselines that run without machine help, fallbacks at the ready, and the flexibility to swap providers if something blows up. Don’t let your sense of quality or pace depend on one tool’s uptime.

This pivot isn’t just technical—it’s muscle memory. Six months ago I got lost in a new city when my phone battery died. Ended up reading street signs, asking for directions, piecing together the logic from context. It was slower, but the skill stuck. Honestly, I still pack a paper map when I travel, just out of habit. Sometimes I like the analog fallback way more; when the battery gives up, at least I’m not stranded. Building this kind of resilience calls for the same thing.

The practical baseline starts with simple, reliable templates: checklists for common tasks, code scaffolds ready for emergencies, research plans that pull from trusted sources—none of it tied to a single model. For example, I keep a checklist for ECG interpretation taped to my monitor. Having this backup isn’t fancy, but it works. Just by using structured human safeguards, a simple checklist cut ECG interpretation errors from 279 to 70—a dramatic drop.

That’s the power of a manual fallback anyone can follow. I’ll admit, working from these baselines can feel slower at first. You spend an extra minute, maybe five, setting up. But when disruption hits, you don’t lose hours. These tools pay you back every time the lights blink out. That’s how you build for messy reality—not just for a slick demo.

Validate your process with human-first loops. A quick code review, a “rubber duck” idea session, or a routine decision checkpoint that happens in conversation. No calendar invites, no missed interpretations—just a peer or two who keep quality from slipping. In outages, that’s what holds the standard.

Don’t let smooth AI workflows become the only standard. Build in the messy, manual, sometimes slower alternatives—so that when an outage comes, you’re the one still moving.

How to Make Your Workflows Outage-Proof

Start by auditing every critical path in your workflow and flag any places depending on just one tool. Treat today’s outage as a built-in stress test. Which steps froze when ChatGPT went down? Jot down everything stalled or lost accuracy—code generation, content drafts, scheduling, even admin tasks. If your “retry” muscle memory kicked in more than once, that’s a pain point to circle.

Maintain human baseline skills for the things you do most—scaffolding code, outlining briefs, sketching out experiments. Run through each step, deliberately, without AI. It’s clunky and almost outdated at first—that’s normal. The first run is slow, but friction highlights where your instincts need shoring up. After a few passes, patterns show up and what was rough starts to feel fluent again.

Diversify AI providers so you don’t lock all your workflows to one provider. Keep your prompts, templates, and data schemas somewhere portable—grab-and-go files, not trapped in app silos. Swapping tools then becomes a decision (and maybe a status page check), never a last-minute fire drill.

Set an AI outage contingency before you need it. Offline notes, a folder of local model scripts, a plaintext “manual mode” checklist hanging by your desk—anything to keep you from mashing the ‘retry’ button and praying to the internet gods. That reflex is real, but it doesn’t solve outages.

Define a validation cadence—how often you sanity-check against old standards, and who helps you judge “good enough.” Give names to your review step and its benchmarks. Good enough used to be… good enough. Now, after a dozen AI-accelerated sprints, it’s easy to let standards drift higher or lower and not realize it. Get a second set of eyes in regularly, or brief cooldowns to check work by hand. Standards stick when you make them explicit—even as speed returns.

If you’re worried about losing delivery speed, timebox manual loops and split steps so the “slowdown” hits in parallel, not sequence. Expect to ship slower at first—that’s just reality. The trade is that your momentum doesn’t collapse the next time an outage hits. Because resilience, not raw speed, keeps real execution moving.

None of this has to be perfect on day one. Audit, practice, diversify, set fallbacks, validate—then loop back and trim friction where it makes sense. AI can—and should—become an accelerator again, but not the linchpin. That’s how you keep standards high and keep moving, no matter what today or tomorrow throws at you.

When you need solid drafts fast without locking into one provider, use our app to generate AI-powered content, keep your templates portable, and maintain momentum even if your go-to tool has an outage.

Switching Providers and Keeping Momentum—A Quick Run-Through

So there I was—ChatGPT out cold, project deadlines still looming. I didn’t wait on the outage. I kicked over to my baseline setup right away. Out came the local code templates, the notebook with my manual task list, and a Slack ping for pair review. Tools slowed down, but the work kept moving—even if it felt a bit like riding a bike with a flat tire.

When switching AI tools, the workflow isn’t complicated. Prompts aren’t proprietary and most tasks—code generation, Q&A, planning—translate pretty cleanly between providers. I copy-pasted my prompt flow into a different platform (Claude this time), checked outputs against my own standard, and kept my review loop running. By keeping prompts clear and portable, you can sidestep lock-in and stay productive, no matter which AI goes dark.

That’s the bar now—for resilience, not dependency. Today proved it. When you build fallback routines and keep your standards in hand, momentum and trust don’t freeze just because one tool does. Next outage? I still haven’t figured out how to stop reaching for that ‘retry’ button first, but at least the rest is covered.

You’ll already know what to do.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.