Scale Engineering Teams Efficiently: 3 Proven Guardrails

Scale Engineering Teams Efficiently: 3 Proven Guardrails

When More Compute Isn’t the Answer

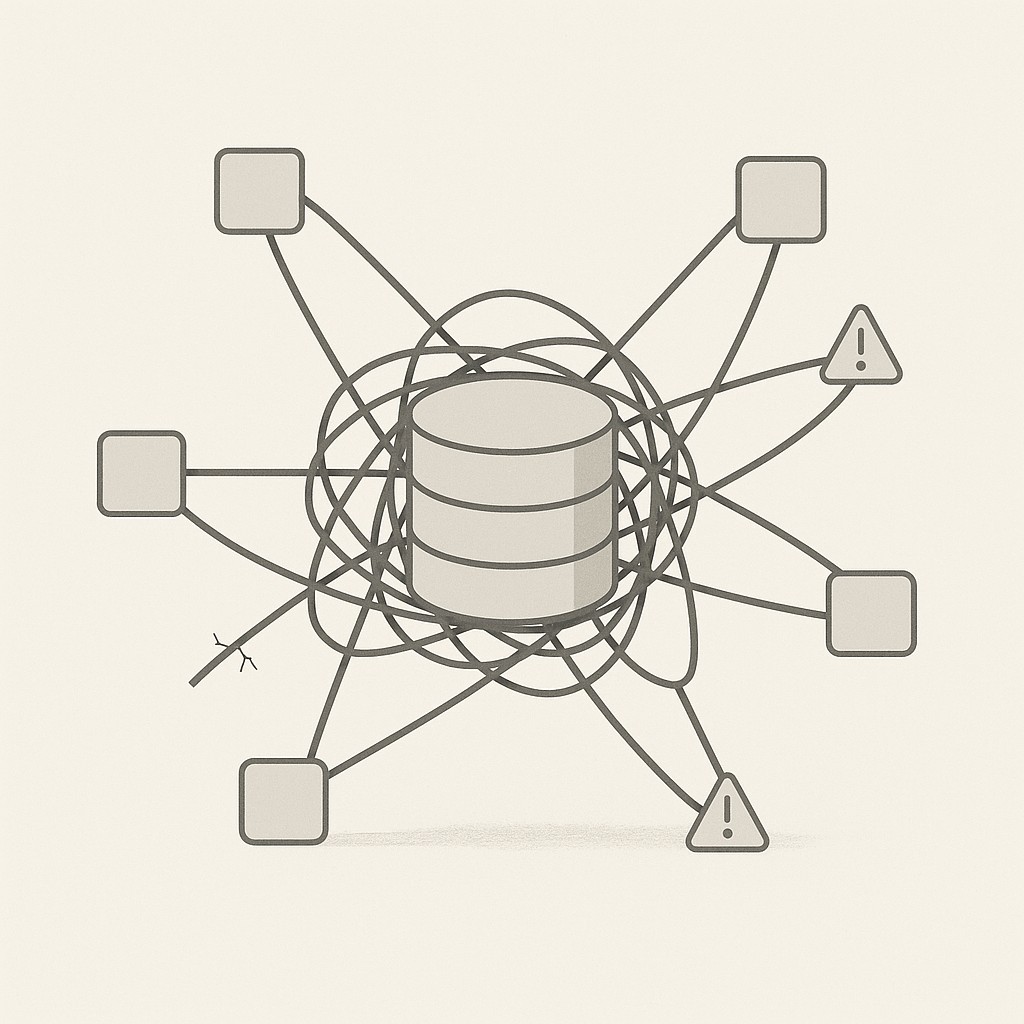

A few years ago, I sat down with a team that had built their core product around a NoSQL database—hard-wired, spread across a tangle of VMs. They’d been fighting fires for months. When they reached out asking how to scale engineering teams efficiently, I’ll be honest—I hesitated. Their challenge was familiar, and so was the temptation behind it. When growth outpaces architecture, do you fix the design, or just shovel in more budget and hope it holds?

“Money’s not an issue,” they assured me. “We’ll throw all the compute you want at it.” You might have heard the same line. It sounds decisive, but it usually tees up disappointment instead of true progress.

Here’s what I actually saw. The services weren’t just co-located—they were tightly coupled, stitched to each other and the database with hard-coded paths and hidden assumptions. Any small failure on one VM had a way of multiplying across the stack. Release days turned into marathons, with every update bouncing off a web of brittle dependencies nobody dared to touch. The architecture had no room to breathe. Adding compute didn’t ease the friction. It just multiplied the blast radius whenever something broke. Like doubling down on a shaky foundation, each new resource made troubleshooting and coordination harder, not easier.

I’ve worked to scale engineering teams efficiently and platforms for long enough to know it’s never just about doing more or spending more. Every shortcut on design or alignment shows up tenfold when you try to scale.

If you don’t address complexity and coordination first, no budget in the world will save you. You’ll just end up scaling the mess. Better to pause and lay the groundwork now—before runaway costs and fire drills become the new normal.

Once, during a code freeze late on a Friday, we realized the primary database was being hammered by a scheduled job nobody remembered writing. It felt like opening a closet and finding last year’s fire still smoldering. We never did figure out exactly who owned that script. The whole thing got patched over until the next crisis, which was sort of the point—we were always patching, never fixing.

Scale Makes Messes Bigger

Here’s the uncomfortable truth. Scale doesn’t just expose inefficiency—it multiplies it. The cracks in your system, the awkward handoffs, the calls that take a few seconds longer than they should—at scale, those hiccups become bottlenecks, outages, and weeks lost chasing root causes that never go away for good. I talk to leaders who expect scaling to be a spotlight rather than an amplifier, but the messy parts don’t just show up clearer; they show up everywhere, all at once. If you’re feeling the strain now, just imagine what happens when you pile more resources on top.

Six months ago, I watched cost spirals kick in fast. Services would stay up for days, idle but racking up bills. Teams spun up their own flavors of tooling, each claiming to have a “temporary workaround” that quietly became permanent. Infrastructure ballooned—not because demand justified it, but because throwing extra compute felt easier than designing for efficiency. You look back and realize half the spend was just keeping the mess afloat, not delivering any more value.

But here’s what really derails scaling attempts. Hardware doesn’t untangle tight coupling, and more budget can’t fix misaligned interfaces. Adding hardware can’t overcome what can’t be parallelized—the speedup always hits a wall at parts that remain tightly coupled. The overhead from each team and service trying to coordinate, tiptoe around brittle paths, and account for exceptions starts to outpace any raw throughput gains you hoped that new VMs might deliver. No amount of hardware was going to save them from a system that couldn’t coordinate or evolve.

That moment reminded me. The real benchmark isn’t “does it work when we buy our way out of trouble,” but “what’s the performance when we’re operating under healthy constraint?” You want to know it runs lean and aligned before you scale, not after. Because if your system only behaves at scale, it’s probably hiding issues that will become impossible—and expensive—to fix once you’re deep into growth. It takes discipline and a willingness to slow down now so you aren’t sprinting in circles later.

A Framework to Scale Engineering Teams Efficiently

Let’s cut through the noise. If you want efficient engineering scaling without constant drama, you need a deliberate, three-part discipline. First, reduce complexity so the system’s shape makes sense under stress. Second, enforce cost and SLO guardrails that catch problems before they turn painful. Third, align your interfaces and operating cadence so that your teams and technology behave as expected when you crank up the volume. Treat this framework like a circuit breaker.

Done right, scaling just feels like turning up a knob, not rolling dice and hoping for the best. Most teams end up firefighting because they jump straight to capacity, skipping the steps that make growth repeatable. If you’ve ever felt like more servers only brought bigger headaches, you know what I mean.

Start by working to reduce scaling complexity—literally choose your seams. Decouple write paths from heavy queries so load spikes don’t take out the whole stack. Stop with the hard-coded service calls that lock you into brittle patterns. Pull every last bit of configuration out of code and into something you can change without a deploy. This isn’t about over-engineering. It’s about isolating failure domains so one hiccup doesn’t cascade across your system. Your services need the freedom to fail (and recover) on their own, not drag down everything else when something goes sideways.

Don’t wait for disaster to enforce guardrails. To scale with SLO guardrails, define per-service SLOs with error budgets clear enough that nobody has to argue about what “bad” looks like—then pair them with cost guardrails. Use unit economics and spend caps that trip alerts before bills get out of hand, not after. Most organizations have untouched cloud savings of 10 to 20 percent, even at scale, according to McKinsey, so there’s always slack if you look for it. If your spend can surprise you, your system isn’t ready to grow.

Get explicit with your interfaces. Lock down API contracts so every team knows exactly what each service expects and promises, no guessing. Layer in migration protocols—versioning, clear deprecation timelines, and require cross-team reviews before anything breaks compatibility. That’s how dependencies stay predictable, even when traffic spikes or usage patterns shift. Sort of like those release days from earlier, your systems need their own kind of breathing room too.

Set your operating cadence now, not when you’re sweating through an outage. Schedule regular load tests against your defined SLOs, ship on release trains, run incident reviews, and cycle through capacity planning before growth forces your hand. As everything gets bigger and faster, coordination needs to scale too. Capacity planning directly supports SLO compliance by ensuring enough resources to meet reliability and performance targets, according to Harness, and that discipline pays off every single time you hit a new limit.

Finally, clean up your tooling and data. Measure utilization service by service, retire whatever’s idle, consolidate tools that overlap, and tie budgets to real outcomes to drive cost-effective engineering team scaling as teams multiply. There’s always hidden sprawl you’re “going to clean up”—but do it before new hires or new features turn small inefficiencies into chronic issues. I’ve had to dig my way out of these messes too many times not to flag it.

You don’t have to slow growth. It’s about making scaling boring, coherent, and reliable. Lay down these habits now, and you’ll spend less time firefighting and more time delivering what actually matters.

Coordinating Scale: How Cohesion Beats Drift

It’s easy to overlook, but as your teams and nodes multiply, the shared rhythm that once kept things smooth starts to slip. Context that used to be obvious gets fragmented between groups, operating cadences fall out of sync, and—you feel it—the ambient trust thins out. Cohesion isn’t culture fluff. It’s the operational glue that lets complexity act like coordination, not chaos. Ignore it, and predictability evaporates, no matter how sharp your architecture looks on paper.

Think about running a kitchen or leading an orchestra. More hands, more instruments: suddenly, cues matter and shared timing keeps plates from crashing or music from unraveling. It’s the same with on-call rotations—one missed handoff, or APIs without real contracts, and what felt tight-knit turns brittle overnight.

So get concrete. Hold to a single source of truth for your interfaces to align teams for scale—the canonical docs everyone can refer back to when things get messy. Publish decision logs where choices and tradeoffs are visible, not lost in backchannel threads. Run cross-team design reviews and, maybe most important, rotate ownership enough to prevent key knowledge from fossilizing in one corner of the org. This way, even as people shift and grow, context doesn’t fall through the cracks.

With the client I mentioned, the answer wasn’t a truckload of new VMs. It was getting the teams back to regular operating cadence. Shared contracts, predictable hand-offs, and clean interfaces. That’s when capacity started behaving—reliably, quietly—ending the cycle of emergency fire drills. Cohesion looked boring, but it was the difference between endless chaos and scale that actually works.

Even now, I wish I had a neat checklist that made cohesion automatic, but there’s always this tension between letting teams run fast and keeping everyone in sync. I know we’re supposed to strike the perfect balance. I’ve just never seen it last for long.

Make Scaling Boring, Not Risky

I get the hesitation. Building this discipline sounds like trading speed for bureaucracy. You worry it’ll stall momentum just when things are starting to move. But skipping it is like ignoring turbulence before takeoff. Tightening things up now is what actually keeps you moving fast once you’re airborne.

Here’s a practical runway. For the first 30 days, map every hard dependency and pin down SLOs that matter. As you reach the 60-day mark, hunt down three high-risk couplings—those spots where failure always cascades—and break them. Set a unit-cost budget for each major service so nobody can spend your way into a mess. By day 90, you should be simulating load under constraint, tracking error budgets, and only then adding capacity. Never before. Done right, scaling feels like turning a volume knob. Predictable, measured, and almost dull in its reliability.

If you’re an engineer or AI builder who wants clear, on-brand posts without wrestling prompts, try our app to generate AI-powered content fast, with control over goals, constraints, and tone.

So don’t let complexity slip past you while you’re chasing speed. Simplify first, put guardrails on, align the interfaces and your cadence—then turn up capacity so scale amplifies what works, not what’s fragile. This is what success actually looks like. Stable, boring growth, cost under control, and real reliability you can trust.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.