Update Cost Models with AI: When Cloud Convenience Gets Costly

Update Cost Models with AI: When Cloud Convenience Gets Costly

When Cloud Convenience Gets Costly—and Agents Start Rewriting the Story

I’ve always been a cloud fan, especially back in those early startup years when moving fast felt like the only way forward. We were small, scrappy, hungry for traction, and the promise of spinning up infrastructure without wrangling hardware was magic. But it didn’t take long for our cloud bills to start creeping—from $10K or so a month to the kind of $100K invoices that make you double-check the numbers each billing cycle. At first, I barely cared.

I was so preoccupied with launching features and keeping systems running, I’d pay whatever premium it took not to get bogged down in server rack drama or midnight patching. Looking back, I see how much I valued convenience—and how quickly the stakes (and the friction) grew as we scaled.

We made the trade on purpose, spending more to move faster, with flexibility baked in from the start. When your whole operation depends on quick pivots and launches, paying extra for managed services feels justified. You skip the long-term commitments and turn big fixed investments into smaller, more flexible operating expenses. That’s why the cloud’s velocity premium is so compelling. There were no leases for server rooms, no negotiations with hosting providers. Just a credit card, some clicks, and your app was live and scaling.

Here’s the hidden value that made it hard to walk away: the cloud let us dodge the biggest management headache. No servers to babysit, no ops team to assemble, and no need for deep expertise in monitoring or patching. I’d rather pay more to manage less. And let’s be honest—most folks reading this have probably made the same deal at least once.

But now the game is changing, and it’s time to update cost models with AI. AI agents are starting to handle much of the grunt work that used to require whole teams.

When I say “agents,” I mean these scriptable, always-on automations that can set up new infrastructure, keep an eye on your systems, respond to alerts, and keep your deploy pipelines flowing. It’s not just about spinning up a VM with a bot. It’s compressing hours (sometimes days) of manual effort into instant orchestration. When AIOps automates incident response, your team’s weighed down less by manual firefighting, and those midnight wake-ups start to vanish. Suddenly, the hands-on toil that used to come with “owning” infrastructure looks less intimidating.

But when I revisit those big bills—especially when we hit that $100K/month mark—I see the imperative to reduce convenience premiums. If agents can manage what used to take a whole team, and do it on infrastructure we own or rent more cheaply, the underlying economics shift. This changes the math.

Same Equation, New Inputs—Why Agent Automation Redraws the Cost Map

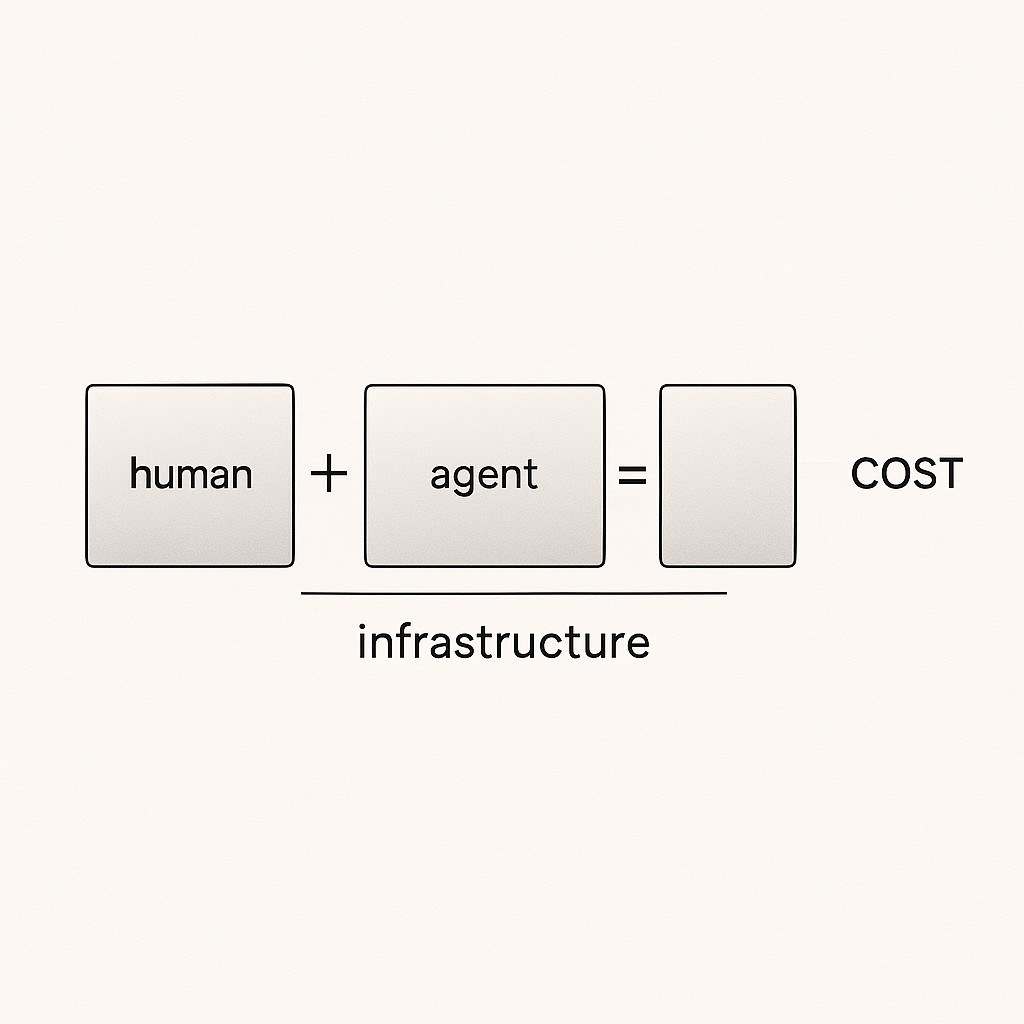

The formula hasn’t changed much since the cloud first landed. Total spend equals management cost plus infrastructure plus reliability. What’s shifting is the management side. Agents are driving it toward near-zero for a lot of stacks I used to sweat over. That overhead—the piece we paid cloud premiums to avoid—is quietly vanishing.

If you break down what really drives your operation, it comes down to a few things: account for AI agents in your team cost, your underlying infrastructure—compute, storage, networking—the reliability or uptime targets you set, and the operational safety net agents provide. The power here is direct. Agents cover monitoring, alert remediation, and deployment flows. What used to require bodies in seats and slack channels watching dashboards now runs quietly in the background.

So here’s the pivotal question—one I’ve been wrestling with as bills pile up and automation gets better. If agents can manage owned infrastructure at near-zero operational cost, does paying extra for cloud convenience still scale with your needs? The old assumptions might not add up anymore.

Six months ago I figured our cloud footprint was just “the price of doing business.” Now, I double-check every automation, trying to spot real costs versus old habits.

Take a step back. When you update cost models with AI—plugging in agents alongside your core team, recalculating overhead, and mapping TCO across different scales—you’ll see that cloud convenience premiums become a choice, not a default. It’s time to rerun the math using today’s reality. The decision is yours, not something you inherit from last year’s playbook.

Modeling Costs When Agents Join the Team: How to Update Cost Models with AI and Rethink Your Mix

First, lay out the framework for cost modeling with agents on board. No smoke and mirrors, just a clear path to an updated total cost of ownership. Start with a basic inventory: what workloads are you actually running right now? List out each major service, app, or workflow that keeps your team in business. Next, grab your cloud bill and highlight every managed service (think database-as-a-service, managed Redis, cloud monitoring). For each one, ask: “Could an agent handle this?” Get concrete—agents can provision databases, monitor uptime, and roll out deployments, sometimes with alarming repeatability.

Now, fold agent costs into your team’s total. What does it actually cost to run, maintain, and improve these automations over a year? Model your reliability and coverage: which workflows do agents cover fully, and where do humans still need to step in? Finally, run some sensitivity checks. What happens if the scale doubles, if workloads spike, if an agent fails unexpectedly? Lay out your decision thresholds. Where is the convenience premium worth it, and where does owned or partially owned infrastructure actually win?

Back in those $10K/month startup days, speed and low management overhead felt almost priceless. Your edge is launching fast and iterating faster. Agents may support bits of this—like automating staging deploys or keeping tabs on logs—but the full weight of managed services is often still worth the cost. You’re buying time you desperately need, not just technical labor. At that scale, every minute not spent fixing a server or patching an ancient OS is another sprint on your product roadmap.

When you cross into $100K/month territory, everything shifts. You’re not just burning cash for velocity—you’re competing on infrastructure economics. Agents now cover huge swaths of your ops, cutting headcount and reducing manual toil. The game is less about managed magic and more about wringing value from raw compute—buying lower-level resources and having agents stand in for what used to be sysadmin roles. That edge used to mean hiring three ops engineers; now it means deploying agent fleets that monitor, heal, and deploy across multiple platforms.

Choosing a hybrid mix is where things get both tactical and practical. Keep those managed convenience services where agents still can’t meet the SLAs your business demands or where the cost and risk of switching is too high. Everywhere else—where agents quietly handle ops and keep your overhead minimal—start moving to own or partially own those resources. The hybrid isn’t ideology; it’s pragmatism.

I was reminded of just how messy these transitions can get when I tried to switch our log aggregation from a managed cloud service to an agent-run stack. It should’ve been a breeze, but for two weeks our alert emails kept vanishing into spam folders, and we only realized when a production outage slipped through without a page. Scanning mail logs at 2am, I caught myself muttering about “robots managing robots” and wondering why I ever thought automation would mean less firefighting. In the end, the fix was stupidly simple—a single DNS record. But that small mistake shaped how I model agent-driven costs now. The hidden stuff bites you hardest.

Bring it all back to the spend optimization framework. Include agents in your team cost. Set clear thresholds for spend, and build your hybrid mix from the new numbers, not the assumptions you inherited back when cloud convenience was the only game in town. The inputs are different now. That’s where your next defensible decision starts.

Addressing Objections: Making Agent-First Models Work in Practice

Let’s start with reliability, because I get the hesitation. Agents aren’t magic—they still stumble, especially with odd edge cases or flaky dependencies. I’ve seen automations miss alerts or get stuck in error loops you’d never expect. The answer isn’t blind trust. It’s layering supervision on top, building clear runbooks for common failures, and having real rollback policies ready to go. The good news is, these agents are getting smarter fast, learning from incidents that would’ve taken hours to diagnose by hand not that long ago. I’m not saying “set and forget.” I’m saying “set, verify, and stand ready to intervene—less often, but still prepared.”

Security comes next, and it’s a fair concern. You don’t want agents with keys to the kingdom running wild. The fix: start with least privilege, give agents only the permissions they absolutely need. Set up policy-as-code to define and enforce those boundaries. Use infrastructure-as-code to keep the environment auditable, and back all this with detailed audit trails. If things go sideways, you can trace, contain, and revalidate exactly what the agent touched and when. It’s about setting hard borders, not letting automation sprawl into guesswork.

On hidden costs at scale—don’t let “near-zero ops” blind you to sneaky expenses. Egress fees, vendor lock-in penalties, drift between integrations over time, even the compute to run and monitor the agents themselves—all these can creep up. This is where you need an AI cost-benefit analysis: model what things look like in worst-case and best-case scenarios, not just the optimistic path. It’s not fun, but you’ll spot surprises before they hit your bank account.

So, what about actually pulling the trigger? You need clear triggers: a spend threshold, a specific SLA, or an expected switching cost that justifies more change. What makes the real difference is establishing cloud governance and regular accountability rhythms—key FinOps building blocks for sustainable decisions at scale to audit management overhead. Put these on your calendar. The system’s defensibility isn’t just technical—it’s that you keep asking hard questions, updating your inputs, and owning your mix with intention.

I’ll admit, though, there’s still a tension I haven’t fully worked out. Even now, with agents in place and hybrid models mapped out, I sometimes default to cloud services for small projects just because the muscle memory is strong. I know all the numbers, I see the case for change, and still—habit wins from time to time.

The Equation Hasn’t Changed—Just the Numbers

I’m not making a prediction. I’m asking a question: if AI dramatically reduces the cost of managing infrastructure, does the “pay more to manage less” value prop still hold? We’ve all built strategies on old equations, but this one demands fresh thinking.

Here’s your path forward. Include agents in your team cost, recalculate spend with those inputs, produce reliable TCO models across scales, and lock in a hybrid mix where agents stand in for classic management overhead. The decisions get sharper when you actually walk through the steps.

If you’re frustrated with agencies, tools, or DIY content, use Captain AI to generate a publishable article for free, saving hours, reducing hassle, and giving you a clean draft to refine.

Ground your choices in today’s numbers, not yesterday’s shortcuts. That’s how you keep speed and sustainability working for you—not against you.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.