Use AI for Real Growth: Automate Routine, Elevate Outcomes

Use AI for Real Growth: Automate Routine, Elevate Outcomes

What Counts as ‘Real Work’ Now? The Homework Debate Hits Home

Last week, I sat across from Megan Frate—a high school teacher and PhD student—while the end-of-term energy hummed in the halls outside. She’d just caught several students running their homework through an AI chatbot. I could see where she was coming from. On one hand, I was genuinely impressed by the students’ creativity and hustle. On the other, I felt uneasy. What are they actually skipping? There was this tension: are we helping them learn how to learn, or just teaching them new ways to check a box?

Of course, whenever a new tool arrives, the same debate flares up. It’s not just AI. When calculators first hit classrooms, there were panics over kids never learning to multiply. The internet—same drama, different decade. What used to feel like real work shifts with every breakthrough, mostly because it’s what we already know and trust.

Fast forward to my work in software and product teams: I see the same split every week between those who use AI for real growth and those who treat it like a shortcut. Some folks clutch tightly to “real work” as if it’s sacred. If you aren’t typing every line, did you even build it? Others race to offload whole projects to AI like they’re playing hot potato. Neither group quite lands it. Holding too tight means missing out on new leverage. Moving too fast means skills dry up, or meaning gets lost.

So here’s where I’ve landed, after plenty of second-guessing. As our tools evolve, our definition of value has to shift with them. ‘Real work’ isn’t just the work you do by hand. It’s the outcomes you own, and the strengths only you can bring. Judgment, creativity, empathy, and reframing the problem itself. Treat AI as an AI leverage strategy, not a crutch or a shortcut to mindless completion. It should be leverage that frees us up to do the kind of work that actually matters—and feels unique because we did it.

Clarifying ‘Real Work’: What Deserves Your Attention Now

I spend most of my days helping teams and individuals rethink what work is truly worth doing themselves and what to let go of. If there’s one thing I’m clear about, it’s this: no system, AI or otherwise, is going to make that call for you. You have to get comfortable drawing the line.

Six months ago, I used to think that delegation was mostly about efficiency—hand off what slows you down, keep what sparks joy. I was too optimistic. In reality, outcomes matter more than artifacts. The deliverables only matter because of the change they create or the problems they solve.

The hardest parts of any job have always been judgment, creativity, empathy, and the ability to reframe problems. That’s the edge we have, and it’s where AI helpers keep hitting invisible ceilings. I started asking, “What do we actually want students to learn? What outcomes are we really aiming for?” Once you strip away the busywork, those sticky human capabilities become obvious—the very gaps where AI has trouble replacing things like empathy, judgment, ethics, and hope. If you feel lost in the tech hype, remember your real value starts where your tools hit their limits.

Bringing this back to software and product work, the measure of success with AI for real work isn’t keystrokes or perfectly formatted pull requests. It’s about shipping meaningful change. The teams who keep their eyes on delivery outcomes—specifically those measured by DORA metrics—are twice as likely to reach or surpass their business goals. You’re not rewarded for how many times you rewrite a feature or polish every line. You’re rewarded for getting dependable value out the door.

Here’s the pain I see week after week: people burn hours on checkbox outputs, copy-paste prompts, and letting AI run wild on tasks that actually need human nuance. If you start treating completion as the only metric, your distinctive skill fades into noise. And honestly, when you rely on an AI assistant for routine tasks over time, your hands-on skill erodes faster than with traditional automation. You may not notice it for months, but suddenly you’re stuck if the tools glitch—or if you need to do something original.

If you’ve felt that unease, there’s a way through. I’ve been developing and sharing a four-part decision approach to turn task completion into real growth. I’ll break that down next. Here’s what to expect: you’ll be able to look at your backlog and sort out what should be automated, what deserves a push for growth, and how to reflect on what moves you forward. We’re moving from “done” to “better,” and it starts with owning how you choose.

The Sorting Method: Automate, Elevate, Reflect

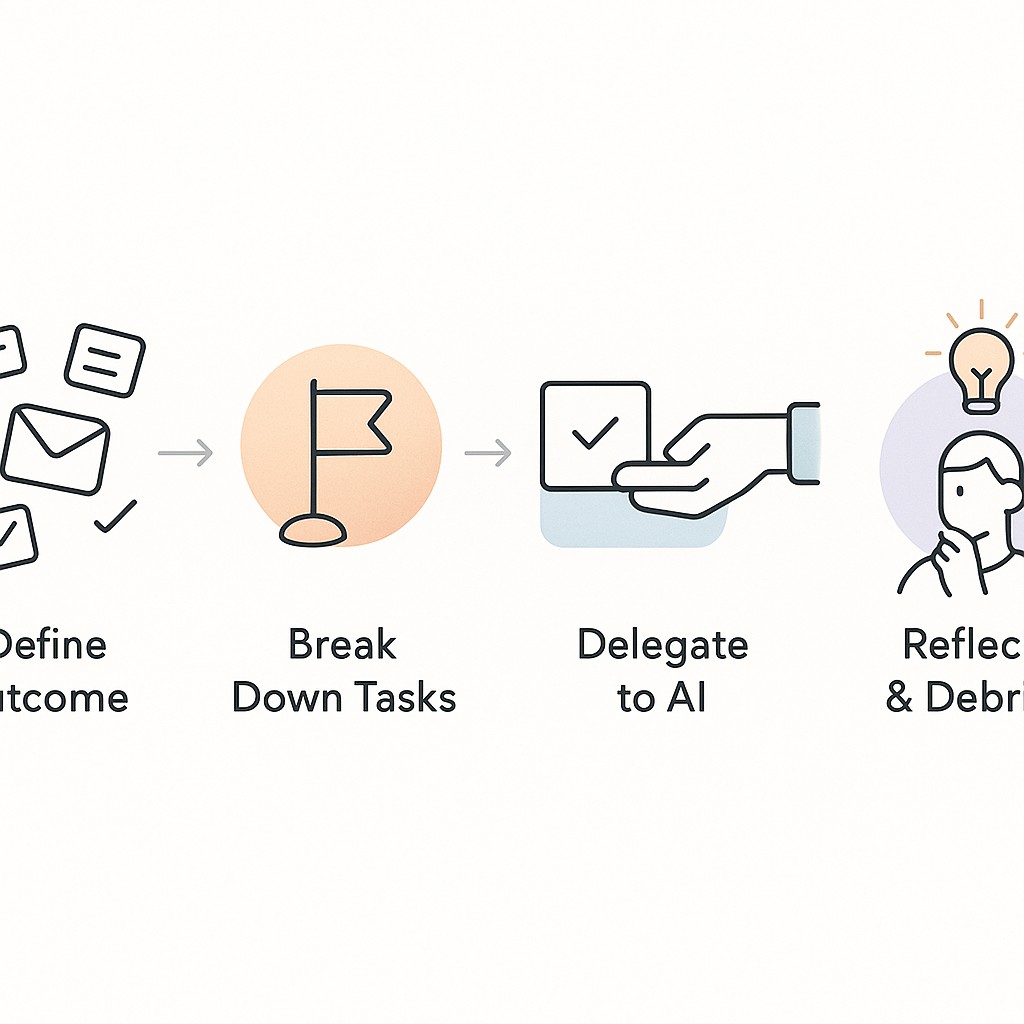

When I coach teams—or when I pick apart my own workflow—I use four steps to pull work apart. Define the outcome, Decompose the job, Delegate (to yourself or AI), and Debrief to lock in what you learn. This maps directly to my day, because everything from code reviews to product launches boils down to that sequence. The first move is getting painfully clear on the value you actually want.

Then you break the work into digestible pieces. Decide which to automate and which to push yourself on. Finally, you spot the next edge by pausing for a short reflection. It sounds simple, but these steps stabilize the whole process. Most frustration is just unframed expectations or blurry task boundaries. If you start with outcome clarity and stay honest about what only humans can do, the rest falls into place. If you get stuck in endless back-and-forth or produce checkbox work, odds are you missed one of those steps.

Step one is Define. I always ask: what am I actually trying to accomplish, and where does my human judgment advantage—creativity, empathy, and discernment—matter most? If you don’t call that out upfront, everything starts to flatten into “just finish.” The real gains come from naming the skills at play, out loud if you can. For technical teams, problem framing always cuts down wasted cycles, but for anyone, the clarity pays off fast. If you’re after growth—in students, colleagues, yourself—it’s always anchored in the stuff only humans can bring.

Messy moment. Sometimes I get this part completely wrong. Last winter, I tried to “optimize” my home office by putting everything on automatic timers—lights, coffee, workspace music. After a week, my mornings felt somehow hollow. I’d saved minutes, but lost the small rituals that get me actually ready to coach or write. It forced me to step back, and now I let some things stay manual just because they’re grounding, not efficient.

Step two is Decompose. Break your project or task into units. Which ones are repeatable and have clear specs? Which ones look murky or ask for invention? Signals for routine chunks are things like “I do this same formatting every week” or “the result is always a list of links.” The ambiguous stuff shouts for human effort. If the instructions feel squishy, or there’s a surprise every time, you’re in the elevate zone. Give yourself permission to be literal here. Don’t pretend something is standard if it always winds up unpredictable.

Step three: Delegate. Automate the Routine, Elevate the Essential. Anything you can write specs for—where the output is deterministically checkable—should go to AI with solid, judgment-centered guardrails. But the real work (problem reframing, designing exceptions, anything that actually moves the needle) stays in your hands. That’s what “automate” vs “elevate” actually means—not a value judgment, but a tactical split. So next time you’re tempted to hand off your whole backlog, pause and ask what gets automated, and what deserves a push for new understanding.

Step four is the most often skipped: Debrief. After each execution, big or small, add a brief post-task reflection—literally one or two sentences. “What did I learn? What’s still clunky? When did I miss the chance to push myself—or leverage tools more effectively?” Reflect and Level Up, Every Time. This locks in gains, keeps the skills sharp, and quietly defines the next experiment you’ll run. The compounding effect is real—this is how you compound learning with AI. Even basic projects start feeling like stepping stones, not checklists. If you skip the review, you lose the momentum.

Try it with your next piece of work—a ticket, a lesson plan, or just your morning routine. Not every outcome needs your fingerprints, and not every shortcut is a trap. Decide, then act. Automate where you can, elevate where it counts. And after each round, take a minute to synthesize. That’s how you stop work from feeling like an endless chore, and start treating it like a craft to shape over time.

Reliable AI Outputs Without Losing Your Edge

The goal here is simple. You want AI to do the repeatable stuff—triage, formatting, summaries—without chasing your tail for hours fixing errors. When I set up a list of tasks for AI, I start with templates, clear examples, and short verification loops. You’re aiming for predictable outputs from predictable inputs. That means giving the AI specifics—like “return this in a table, only include these columns”—and making sure you can quickly check the result. You want a steady rhythm. Input, output, verify, move on.

Let’s get granular. The minimal prompt toolkit you need: define what you expect (structure), toss in two or three sharp examples (few-shot), lock the tone or style if it matters (constraints), and add a simple pass/fail check for each output. Don’t just accept whatever comes back. Push past the bare minimum. This is where you get confidence, not just speed. Before I shifted my own workflow, I was often disappointed with AI drafts. Once I started framing prompts clearly, the back-and-forth cycle got cut down, and iteration stabilized fast.

If you’re worried about the time it takes to set these up, or about losing hands-on skill, you’re not alone. It feels slow upfront, and sometimes that nags at you when you’re hustling from one thing to the next. Here’s what changes the math. Once you save a prompt (or a short checklist for reviewing outputs), you’ll reuse it ten or twenty times. That upfront investment pays off quick. I also keep a habit where, after every few automated cycles, I do one rep manually—just to keep my own chops alive. You get quality through audits, not blind trust, and you grow by alternating tool use with intentional practice. It’s not all-or-nothing, it’s an ongoing calibration.

Concrete example: say you’re triaging bug reports. You feed the AI a template, include a couple example reports, and specify a clear rubric—severity, reproducibility, description clarity. Drafting unit tests? Same approach: structure, examples, checks. Summarizing user interviews? Constrain style, set points to hit, then skim the result yourself. It’s the same principle Megan Frate and I talked through earlier—automation holds up best when you pair it with a real review, not just a blind checkbox. That’s how you keep automation trustworthy, while your own skill keeps compounding in the background.

Use AI for Real Growth: Turn Task Completion Into Growth, Not Just Done

Let’s flip the old script—use AI for real growth so you move from “just finishing” to actually getting better every time. If you want to start, pick one thing off your backlog today and try handling it with this mindset: not just done, but improved. Your move: turn task completion into real growth.

Once you use an AI tool (or tackle something manually), stop for a minute and run a quick debrief. Ask what worked. What surprised me? What did I learn? What will I change next time? Seriously. Sixty, maybe 120 seconds tops. After using AI, I pause to ask, “what did I learn?” and “what could be better next time?” That simple reflection keeps you moving forward, not just ticking boxes.

This is something I haven’t fully solved. Even when I know I should take time for reflection, I still skip it some weeks, especially when deadlines pile up. The process isn’t perfect, but the intention sticks.

Now, here’s a point I wish I’d caught earlier. Protecting hands-on skill takes intention, not just hope. You have to schedule manual reps for the hard or creative parts, while letting automation handle the grind. If I automate bug triage, I’ll still review a few reports by hand each week. The key is tying your practice to real outcomes, not vanity metrics. That combination—routine handled by tools, edge sharpened by you—keeps your skills from atrophying.

Put this into practice by generating AI-powered drafts that follow your goals, constraints, and tone, then review quickly and adjust—turning output into outcomes.

What’s wild is how small tweaks compound. If you debrief and adjust every week—just a tiny experiment at a time—you start seeing faster turnaround, cleaner quality, and better instinct for what’s worth automating. It’s like what Megan Frate was worried about in education. Do we risk losing what matters in the rush to offload? Callback to that tension—here, you meet it head-on, developing a personal intuition for when your work stands out, when it scales, and when it just needs to be finished.

Let your tools evolve, but let your value lead. This is your call, not a system’s. Choose deliberately, and keep your real strengths at the center.

Enjoyed this post? For more insights on engineering leadership, mindful productivity, and navigating the modern workday, follow me on LinkedIn to stay inspired and join the conversation.